But you are forced to run the p-cores at their max power right?!

Of course not. That's why I talked about core-scheduling and dialing in the optimal blend of clock speeds for a given power target and number of threads.

That's why this statement makes sense to you?!

My point about comparing efficiency at peak utilization was to show that the P-cores' efficiency becomes worse than the worst of the E-cores', past a certain point.

Much worse. If you focus only on the overlap, you can easily lose sight of that.

On the x264 workload, that threshold seems to be the P-cores running at 4.4 GHz. You can see that in this graph, based on where they draw equal on the Y-axis:

Yes you are not wrong as long as you use enough qualifiers, that's what I already proposed you do.

Complex data defies simple explanations. Nuances matter.

The picture is fairly complex, but not surprisingly so. We know the efficiency of just about every core in existence falls off a cliff, once you push clock speeds high enough. So, it's almost a given there will be a crossover point. However, nobody is saying you have to run every core in the CPU at the same clockspeed or power level. The OS driver that's deciding what clock speeds to run the different cores needs to balance the performance demands (i.e. how many threads are running) against the efficiency curves for each of the cores.

You can also operate both types of cores at their sweet-spot, imagine that, crazy right?!

That was just a thought experiment. In reality, a user expects a certain level of responsiveness or throughput, is running a certain amount of threads, and is prepared for a certain amount of power/heat to be dissipated. If we take the simplistic case of a performance-oriented scheduler, it will nearly always push the cores past their "sweet spot", in order to maximize performance. That's often not a matter of simply running any of the cores at max speed/power, unless the number of threads is low or the power limit (and cooling capacity) is very high.

When you are accusing people of cherry-picking you shouldn't be cherry-picking yourself...or I guess this is more of a goal post shifting.

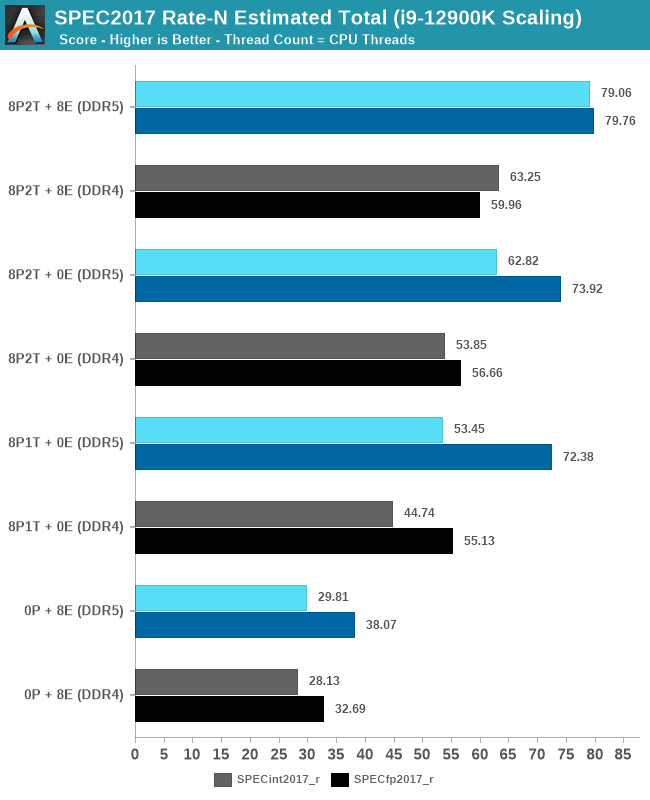

I'm not. I just included a dataset you omitted. I've never shifted goalposts, either. My contention is the same as it always was: E-cores are a more energy-efficient way to scale performance.

The same is not better, there is a whole range of clocks that the e-cores are not more power-efficient which is what you claimed.

The problem is that you're taking this raw data outside the context of how it's used. In practice, you're not running both P-cores and E-cores at the same frequency, which is the only way that graph shows parity. In practice, you're usually running the P-cores at

significantly higher frequency than the E-cores, which is why adding E-cores to the mix helps improve the overall efficiency of the solution.

As I said, core scheduling is complex and this is merely raw data.

The e-cores are only more power-efficient if you add a ton of qualifiers, but then you can do the same with the p-cores.

That's a false equivalence. The precise interpretation of the data matters. The tagline of Chips & Cheese is: "The Devil is in the Details", which is why they do such in-depth micro-benchmarking, mixed with extensive analysis. Even when I don't agree with their analysis, the data is still very useful.

For the same reason they do it on the desktop, the fewer expensive p-cores they need to use per sold unit increases the money they make per unit and also allows them to make more units to make even more money.

Using twice as many p-cores in a product for example would cut the amount of product they can make in half.

Not to agree with you, but just to explore this line of reasoning:

you're saying Intel intentionally builds a mobile product with worse battery-life, just to save money?

At certain price-points, I can see the argument. However, not if we're talking about even their premium, low-power models. Let's look at some examples, like the i7-1365U, which seems to be their highest-end U-series CPU and has 8 E-cores + 2 P-cores.

If what you're saying is right, you should be able to point to some Intel mobile CPU with the same or lower power-budget and more P-cores.