Maybe it would help if we work an example. So, let's consider an all-core integer workload, similar to the 7zip case measured here:Show me one benchmark that shows that happening because for the last forever years every single benchmark is with the clocks running pedal to the metal, either with unlimited power or with the a certain power target but always with all cores running as fast as the power allows.

For the CPU, consider the i9-12900, once it reaches the 65 W point in its operating envelope.

So, for optimal throughput, you'd want to schedule:

8x E-cores @ 3.1 GHz, consuming 27.86 W and providing 40.50 MB/s of throughput

8x P-cores @ 2.5 GHz, consuming 29.38 W and providing 40.82 MB/s of throughput

---

Total: 57.24 W; 81.32 MB/s.

If you just ran the 8 P-cores flat out, then you'd have to go with about 56 W for them, which achieves about 56 MB/s of throughput. That's only about 69% of the performance attained with the 8 + 8 combination.

If we consider a hypothetical CPU with 12 P-cores, then you could hit about 54 W at 69 MB/s of throughput. That's still only about 85% of the 8 + 8 combination considered above.

If you're saying they're only more efficient at 1.1 GHz, then realistically nobody uses those frequencies. So, either you're wrong or Intel is wrong.how is the battery life at very low power where the e-cores are the most efficient?

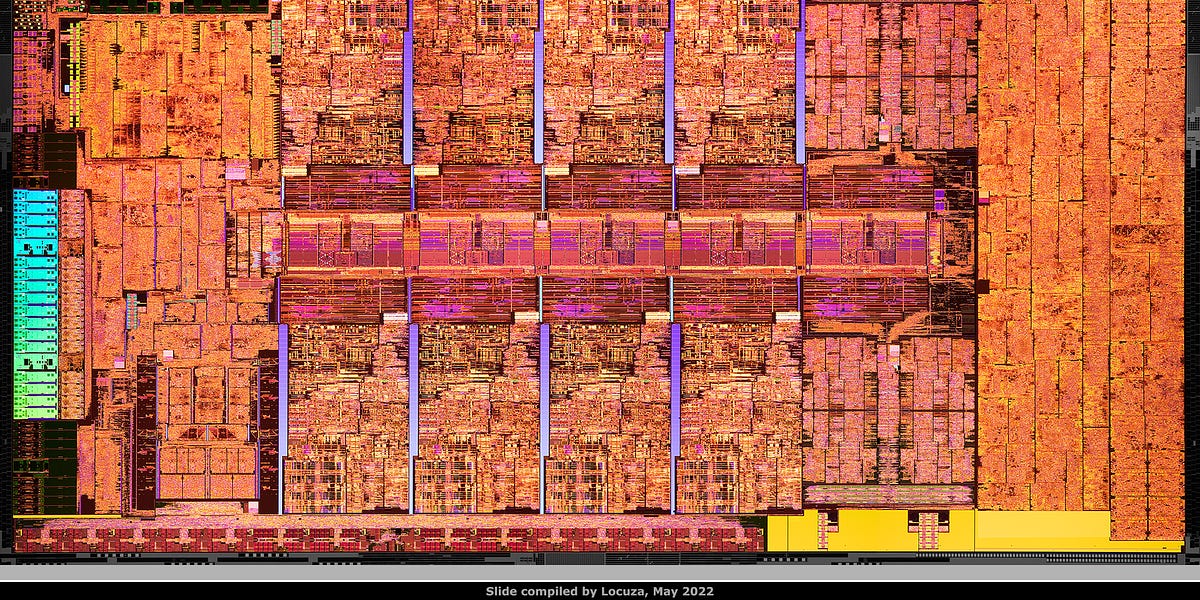

Where do you get 90 W?Look at this picture, if you can allow your CPU to use more than 90W and unless you have to run every core in your CPU at full power all the time then you can replace every single e-core with the equal amount of p-cores and make those replacement p-cores use the same amount of power as the e-cores do now and you would get higher performance for the same amount of total power,

Given that example, you could have a 16 P-core CPU that uses about 70 W @ 2.5 GHz and is the same speed as a 16 E-core CPU. That seems pointless, to me. If that's as fast as you're going to run those P-cores, then save money and go with 16x E-cores, instead.

Anyway, it's unrealistic just making an arbitrarily large CPU. You should really compare against something that's approximately the same cost (i.e. area). So, that would mean about 10 P-cores.

Working with a 95 W package power budget, if we allow 10 W for uncore, then here's the optimal 8 + 8 configuration:

8x E-cores @ 27.9 W -> 40.5 MB/s

8x P-cores @ 56.0 W -> 56.0 MB/s

---

Total: 83.9 W; 96.5 MB/s

Using 10 P-cores, you'd get only 76.25 MB/s in 85 W. Even with 12 P-cores, I get only 84 MB/s.

Oh, you mean like the i9-12900 (65 W)?unless you are forced to use your desktop PC at below 90W but still with all its cores enabled for some reason

Intel® Core™ i9-12900 Processor (30M Cache, up to 5.10 GHz) - Product Specifications | Intel

Intel® Core™ i9-12900 Processor (30M Cache, up to 5.10 GHz) quick reference with specifications, features, and technologies.

I actually have one of these in my work PC. It's a Dell compact desktop and that's the fastest CPU we could configure it with.

Last edited: