My reflection, based on a few reviews, is that with RT off there seems to be very little actual difference in image quality between the "Low" and "Ultra" settings.

In TH's examples I can see differences between the pictures, but I'm unable to tell which picture use which setting.

In the examples provided by SweClockers the only notable difference is in the leaves of a palm tree.

So as far as I'm concerned there doesn't seem to be any reason to use settings above Medium (unless also using RT).

It's a standard procedure for driver development.

Video card drivers come in two types: The generic driver to be "one size fits all" and then optimized application specific drivers that superseed the generic when applicable.

Nvidia has many employees in driver development and therefore excel at creating optimized drivers for most games. As a result they also don't spend that much effort on the generic driver. The resources for development aren't infinite though, so older architectures also get less attention when it comes to optimizing for new games.

AMD has significantly fewer developers and therefore spend more resources on their generic drivers while not being that good with game specific optimizations.

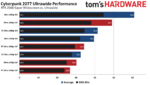

The result of this is clearly visible in the test results here: Just compare the performance of GTX 1060 vs RX 580 at 1080p. These two cards were essentially equal performance at launch, but in this game where I expect both to pretty much rely on their generic drivers the AMD card is nearly twice as fast!

As I've noted elsewhere, a lot of the differences in performance also tend to be thanks to DX12. AMD's GCN architecture with its asynchronous compute engines is simply better at handling generic DX12 code. To extract good performance from Pascal GPUs in DX12 requires a lot of fine tuning on the developer side, and there's not nearly as much that can be done with drivers. My understanding (in talking with AMD and Nvidia over the years) is that with DX12 games, a lot of times it's Nvidia looking for things that the developers have done poorly and working with them to fix the code.

With DX11, it's easier to do wholesale shader replacement -- so if a game does a graphics effect that isn't really written efficiently, the drivers can have a precompiled optimized version and detect the game and replace the generic shader code with optimized code. The whole point of a low-level API is to give developers direct access to the hardware, which means the devs can seriously screw things up. Look at Total War: Warhammer 2 with its DX12 (still beta!) code, which runs far worse on Nvidia's Pascal GPUs than on AMD's GCN hardware. Actually, I think at one point even AMD's newer architectures perform better in DX11 mode. Shadow of the Tomb Raider, Metro Exodus, Borderlands 3, and many other games that can run in DX12 or DX11 modes still run better in DX11 on Nvidia (except sometimes at lower resolutions and quality settings -- so 1080p medium might be faster in DX12 on RTX 2080 and above, but 1080p ultra can still favor DX11 mode). SotTR and Metro are even Nvidia promoted games -- it worked hard to improve DX12 performance, since DXR requires DX12. But at 1440p ultra, you'll get 5-10% higher fps in DX11 mode last I checked (and of course can't enable DXR).

The 2080 Super vs. 3060 Ti isn't really surprising when you're running with RT Ultra preset and DLSS. Nvidia put quite a bit more effort into optimizing the RT and Tensor cores on Ampere. So 3060 Ti has 38 RT cores that are theoretically about 70 percent faster per core than Turing RT cores. 2080 Super has 48 RT cores. 38 * 1.7 = 65. That's potentially up to 35 percent faster in RT code. Tensor cores are a similar story: 3060 Ti has 152 Tensor cores, but each is twice as powerful as a Turing Tensor core, and with sparsity is up to four times as powerful. So 152 Ampere Tensor cores is roughly equal to 608 Turing Tensor cores, and the 2080 Super only has 384 Tensor cores. In non-RT performance, the 2080 Super is much closer to the 3060 Ti, but even in our review of the 3060 Ti we found it was on average 5 percent faster than 2080 Super in traditional rasterization performance. In ray tracing games, the average was still only about 5%, but Control for example has the 3060 Ti leading by 12-15% and Boundary it's also around 10% faster. So in Cyberpunk seeing a 15% lead isn't impossible.

And yes, Nvidia worked extensively with CDPR to optimize Cyberpunk 2077 for Ampere GPUs as much as it could. It probably put plenty of effort into Turing as well, so that every RTX card plays the game quite well, but was it equivalent effort? Almost certainly not. This is the biggest game of the year, by far -- possibly the biggest game launch of the decade. This is supposed to be the game to move RT into the "must have" category. While I like the end result in terms of visuals, I don't think it gets there. I'm still surprised at how good the game looks even at low or medium settings, though outdoor areas don't necessarily convey the enhancements you can get from RT as well as some indoor areas. But if your GPU can't handle RT Ultra, you can still enjoy the game at medium settings -- bugs and all.