13900k

Editor's Choice Techpowerup

Guru3D Recommended

PCMag Editors' Choice Best of the Year 2022. Note AMD dont get best of the year.

12900k

Guru3D Recommended note most amd cpus are recommended as well. Only the

7800X3D cpu is higher,

5800x3d is recommended.

5 star review out of 5

PCMag Best of the year 2021 Editor' Choice. Note AMD dont get best of the year.

anandtech

7950x3d spot the award

Ryzen 9 7900X3D spot the award

7900/7700 spot the award

Burnout issues

5800X3D review spot the award

Award This was after the 11900k but before the 5800X3d and 12900k. The 11900k was not a good CPU release from Intel.

I really had to look for the award, given the only CPU that came close to Intel for game performance was the 5800x3d and that got no award. Cherry picking are we?

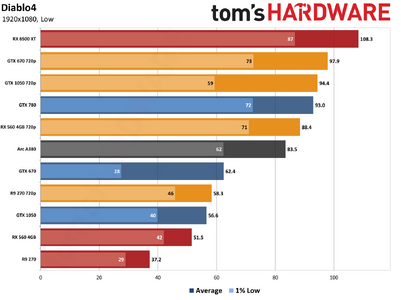

The other problem with AnandTech's CPU testing is that most of those gaming tests are fundamentally useless. 360p Low? Nonsense! It has almost no bearing on the way people actually play games. The low resolution means texture sizes and cache hits are radically different than at 1080p! The same applies to 1440p and 4K Low: Minimum quality settings fundamentally changes what the games are doing. There are plenty of games where a big chunk of the FPS drop in going from medium to ultra settings comes from all the additional geometry and stuff that's added to the rendering process.

So of the four tests that Ian ran for the games, three don't actually convey practical data. And then the test that is useful (1080p max), there's far less of a difference between the CPUs. Focusing there, here are the numbers comparing AMD's fastest 5900X (usually fastest) versus the i7-10700K (which is basically equal to an i9-9900K):

Chernobylite: 3% lead

Civilization VI: 51% lead (highly questionable benchmark, given how much of an outlier this is)

Deus Ex Mankind Divided: 1.5% loss

Final Fantasy XIV: 11% lead

Final Fantasy XV: 5% loss

World of Tanks: 0.5% loss

Borderlands 3: 3% lead

F1 2019: 0.6% loss

Far Cry 5: 8% lead

Gears Tactics: 10% lead

Grand Theft Auto V: 5% lead

Red Dead Redemption 2: 1% loss

Strange Brigade: 1% loss

So, outside of Civ6, it wasn't a massive change in performance. And I often wonder about some of the results from Ian, since he's usually focused on automated testing and that can cause anomalies that he wouldn't notice because he's not actually watching the screen and testing the games.

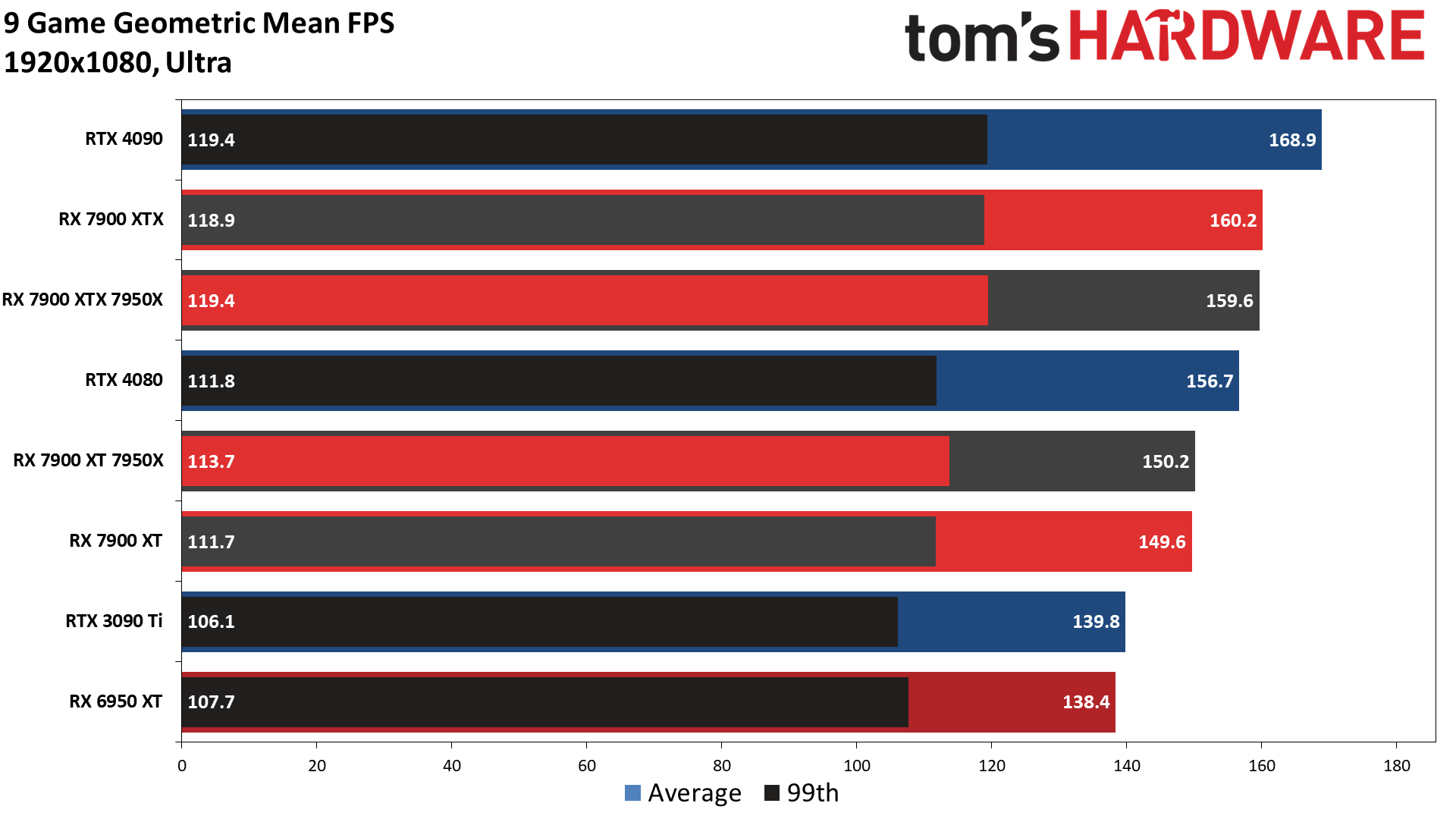

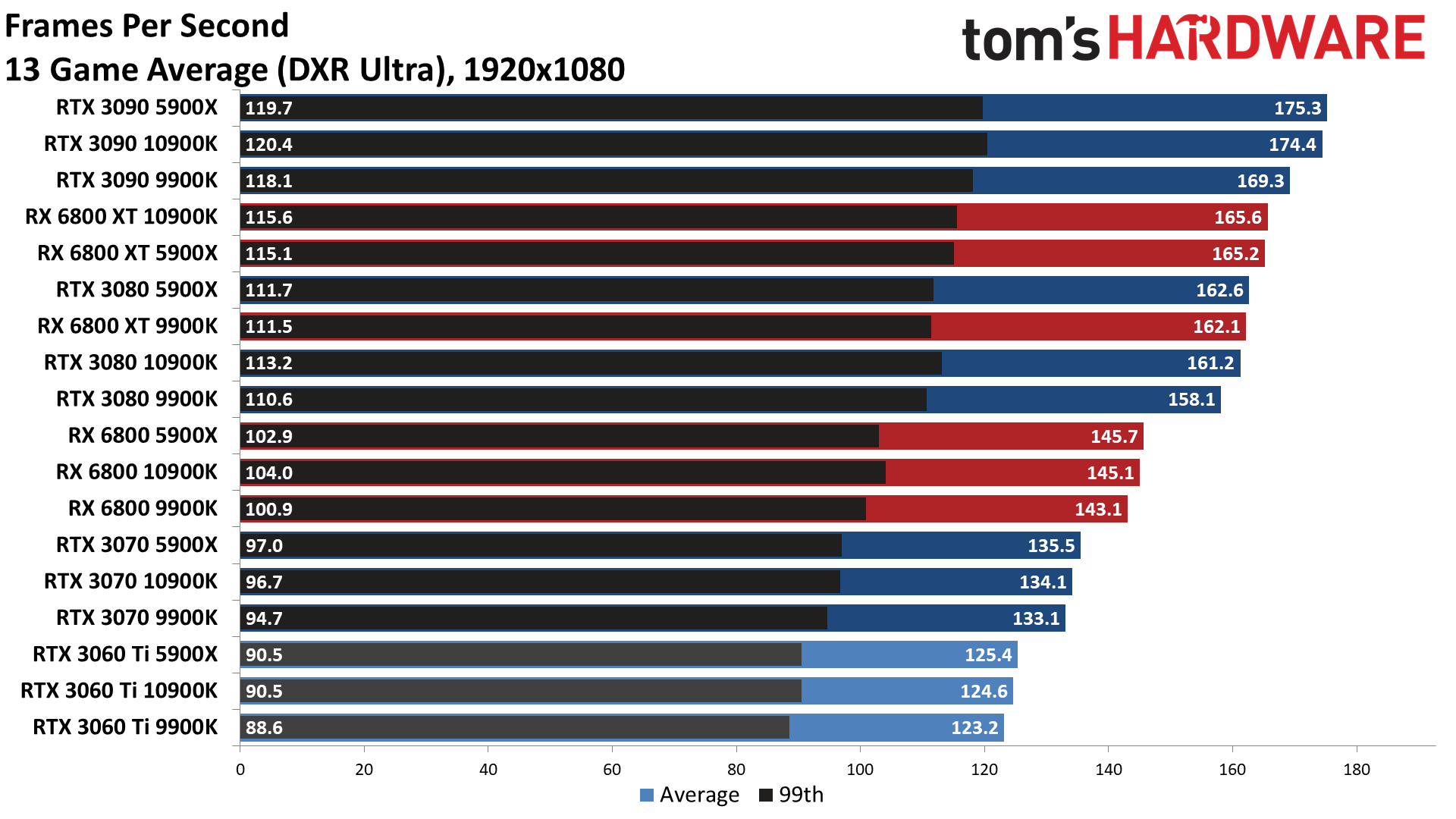

I also tested with XMP and DDR4-3600 memory, and when I checked Ryzen 9 5900X against Core i9-9900K and Core i9-10900K, the difference was minimal. As in, at 1080p Ultra (sometimes with DXR), here's what I measured back in January 2021:

The 5900X was 0.5% faster than the 10900K and 3.5% faster than the 9900K at 1080p with a 3090. With a 6800 XT, it was tied (fractionally slower at 0.2%) than the 10900K and 1.9% faster than the 9900K. With a 3080, it was 0.9% faster than the 10900K and 2.8% faster than the 9900K. RX 6800, it was tied (0.4% faster) than 10900K and 1.8% faster than 9900K. The margins shrink to basically nothing once we drop below the RX 6800 level. Is that sufficient data?

When I considered that I had benchmarked over 60 GPUs on the 9900K, and that if I swapped to a new test system, I would need to retest everything, I decided to stick with the 9900K for another year. Then I skipped the 11900K as well. Only with a major change (12900K) did I decide to upgrade and retest, which I did at the start of 2022. It took months to get everything retested!

Then I swapped again because of the RTX 4090 ("too fast" for the 12900K). I'm still finishing the last few tests/retests with that one for the GPU hierarchy, several months later. (I tossed two months of testing when I realized I had VBS enabled.)

If you want to be an AMD or Intel or Nvidia fanboy, fine. But don't expect others to buy into your world view. They're all fine for various purposes. And I will still go on record with saying I've had far more issues and oddities using Ryzen PCs than with Intel PCs.

The first and second generation Ryzen motherboards and CPUs were, IMO, decidedly

not awesome. I had two 2700X CPUs die, along with a Ryzen 7 1700. I also had about 10 X370/B350 motherboards die (seriously, by the time I was done with CPU testing a few years later, I only had two fully functional X370 boards left!), and three X470 boards died.

Do you know how many Intel CPUs and motherboards have died in that same timeframe? Not. One. Every CPU and mobo still worked when I switched from PC Gamer to Tom's Hardware. That's anecdotal evidence, but that experience has always left me a bit gun-shy of switching to an AMD system as my primary testbed.