jeremyj_83

Glorious

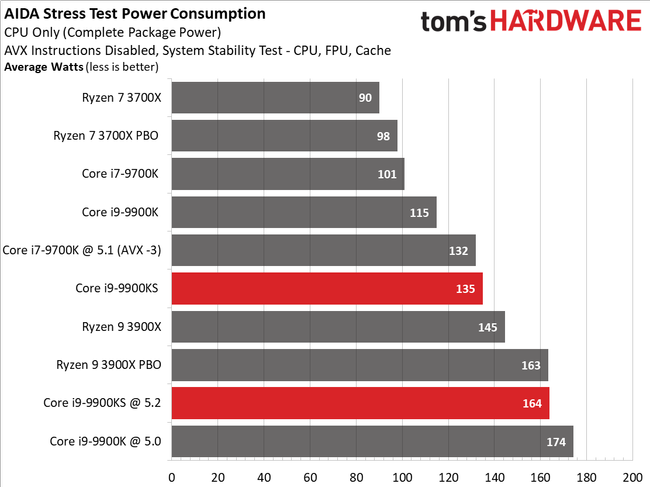

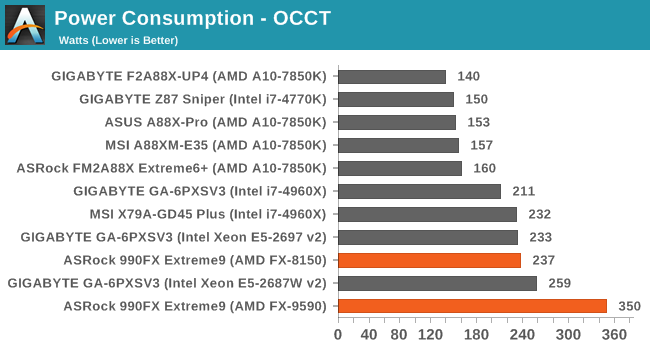

As I have said on a different thread, extremetech is the only publication I have read that shows this. Toms, Anandtech, KitGuru, etc... all show that the 9900k draws MORE power even during extended tests. A lot of publications don't use Prime 95 anymore for power tests because it acts like a Power Virus anyways. Therefore a good conclusion from the extremetech numbers would be that the 9900k is actually thermal throttling because it is drawing so much power.It's nice that you only focused on the first part but I had the second part there because it has meaning.

Also the comparison I make is between the 9900k and the 3700x both of which have 8c/16t.

At default settings with load on all 16 threads the 9900k only boost to about 200w for a short period of time and then drops down to about 150 for the remainder of the time,it's a very very different thing from a constant stress test.

But ok sure, go ahead and overclock ZEN2 to 4.7Ghz all core run prime on it and prove to me that it will draw less power....

Direct from Tomshardware review of the 3700x "We're moving away from using AVX-based stress tests for our CPU power testing, though we will continue to use them for their intended purpose of validating overclocks. AVX-based stress testing utilities essentially act as a power virus that fully saturates the processor in a way that it will rarely, if ever, be used by a real application. Those utilities are useful for testing power delivery subsystems on motherboards, or to generate intense thermal loads for case testing, but they don't provide a performance measurement that can be used to quantify efficiency. "

https://www.anandtech.com/show/14605/the-and-ryzen-3700x-3900x-review-raising-the-bar/19

AMD Ryzen 9 3900X and Ryzen 7 3700X Review: Zen 2 and 7nm Unleashed

AMD's Ryzen 3000 series promises more performance and value via the benefits of the 7nm process and Zen 2 microarchitecture.

AMD Ryzen 9 3900X & Ryzen 7 3700X ‘Zen 2’ CPU Review - KitGuru

AMD has launched its Zen 2 architecture in the form of the Ryzen 3000 processors. Slotting directly

www.kitguru.net

www.kitguru.net

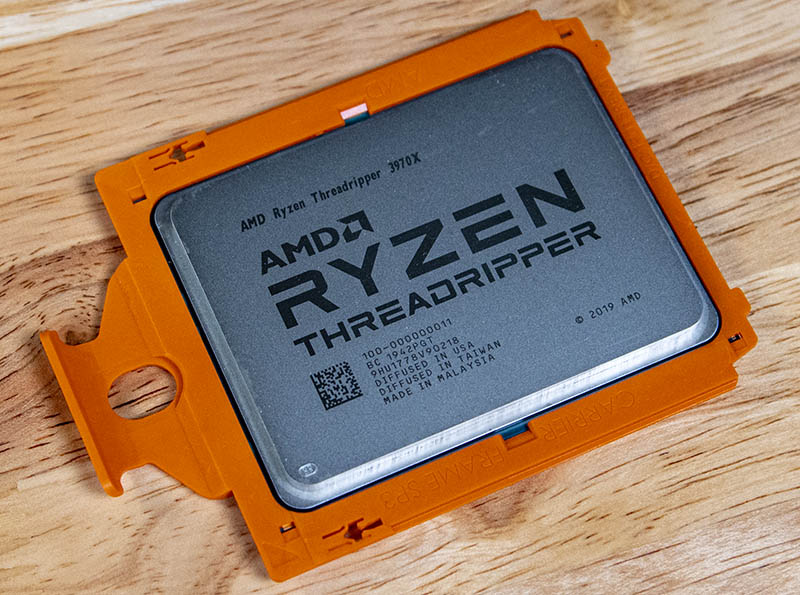

Heck even the 3970X uses less power during operation than the i9-10980XE even though it has a much higher TDP and 14 more cores.

AMD Ryzen Threadripper 3970X Review 32 Cores of Madness

The AMD Ryzen Threadripper 3970X is a 32-core workstation CPU that offers an enormous amount of performance and PCIe bandwidth at a reasonable price