This is such a limited view of things. RX 6950 XT is not "much faster" at raster. It's faster, yes. In some cases it's barely faster, in others it's 20% faster (one GPU tier), and in a select few (generally AMD promoted) games, it might even be 35% faster.

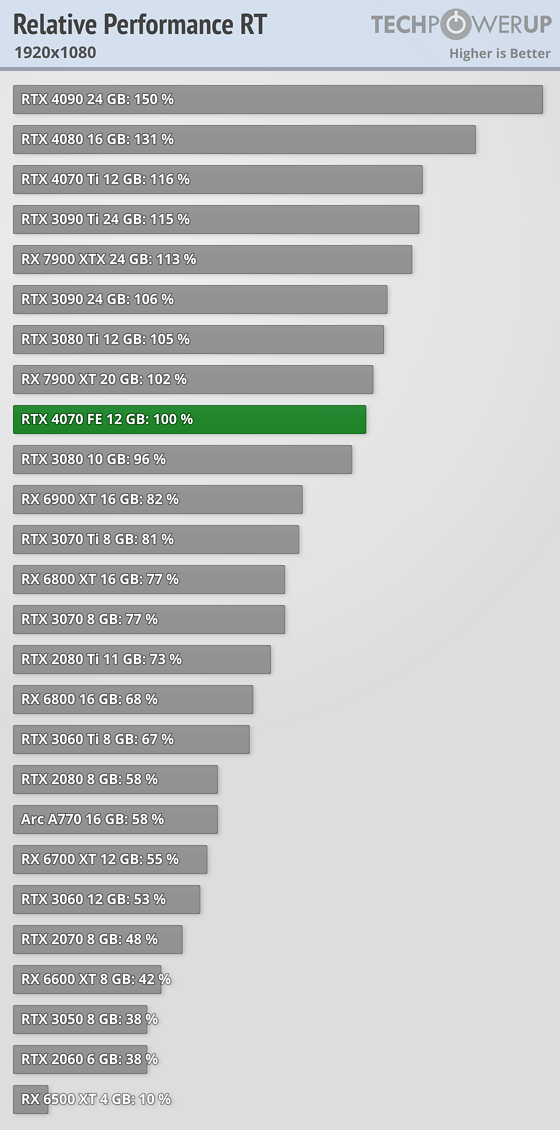

But it's slower in RT, yes. Provably so in virtually every case (except games that do very little with RT, like Dirt 5, Far Cry 6, Resident Evil Village...)

It's also slower in games that support DLSS and not FSR2. And it's potentially much slower in games that support DLSS 3 (though again, that's not quite as big of a performance jump as Nvidia promotes).

And if you do anything with AI, you'd have to be heavily sponsored by AMD to think that's the best option. Getting most AI projects to run on AMD hardware right now is a pain. It's why so many of the projects don't even bother.

But let's go back to the RT and DLSS topic. Because Nvidia has tied RT with DLSS so heavily, most games that use significant RT effects also support DLSS. So if we're being real, it's not just potentially a bit slower, it's the difference between fully playable 1440p with DLSS Quality versus not playable unless you turn off RT (on the 6950).

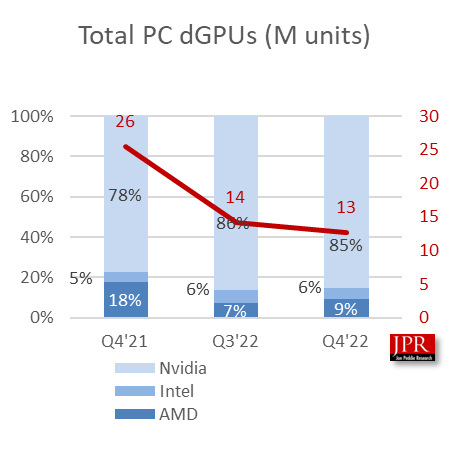

That right there is one of the biggest things in favor of Nvidia RTX 40-series right now, and even 30-series to a lesser degree. For better or worse, Nvidia has the market share to make things happen. It's pushing ray tracing and DLSS, because it knows it can win every time those are factored in. As a gamer, if I'm just playing games and not testing, I will generally turn on DLSS upscaling and Frame Generation if they're available. Even on an RTX 4090! Because again, being real, it makes games feel better (smoother) and that easily outweighs the potential loss in image fidelity or slight increase in latency (with Frame Generation).

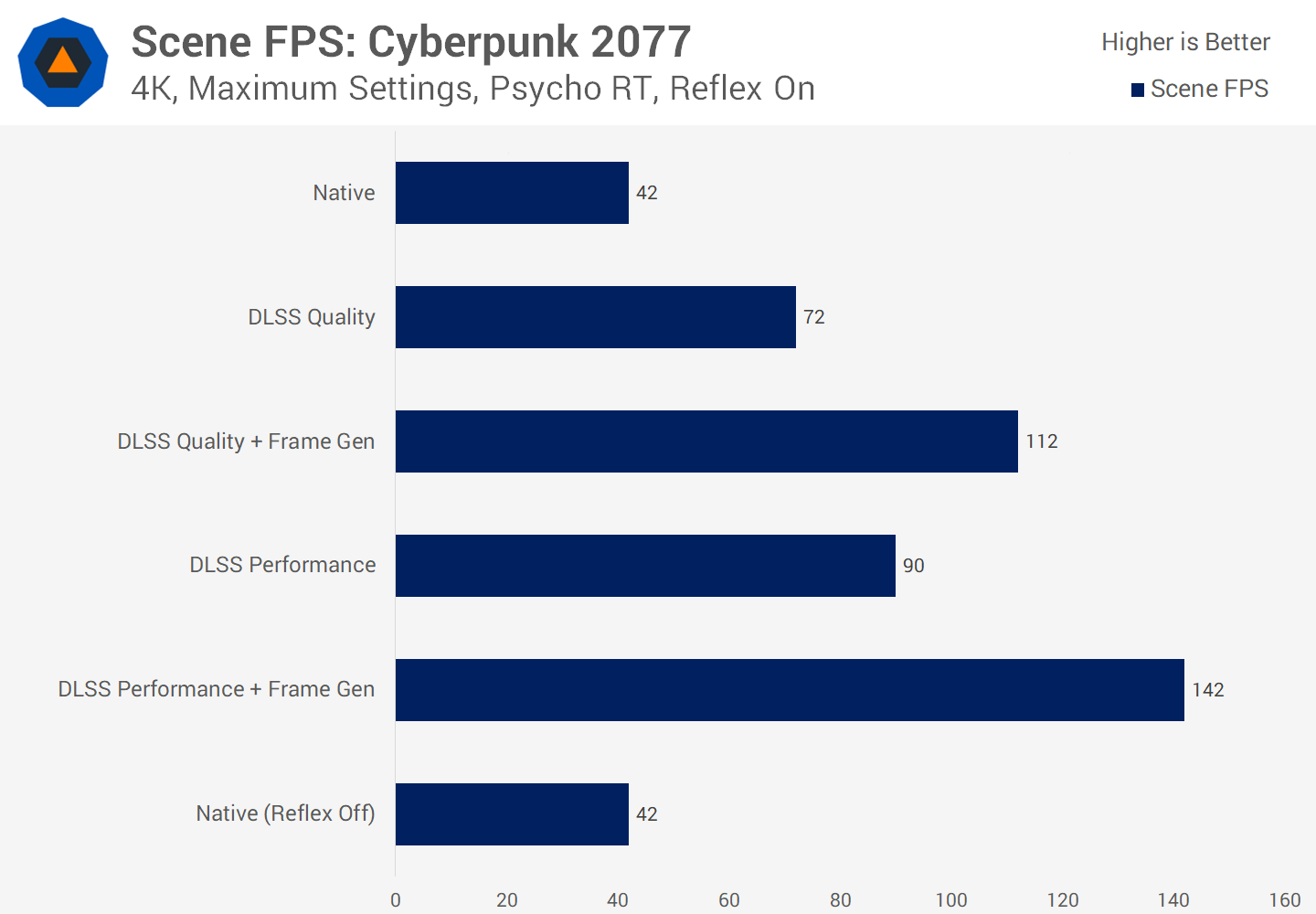

Also, it means you can do stuff like this:

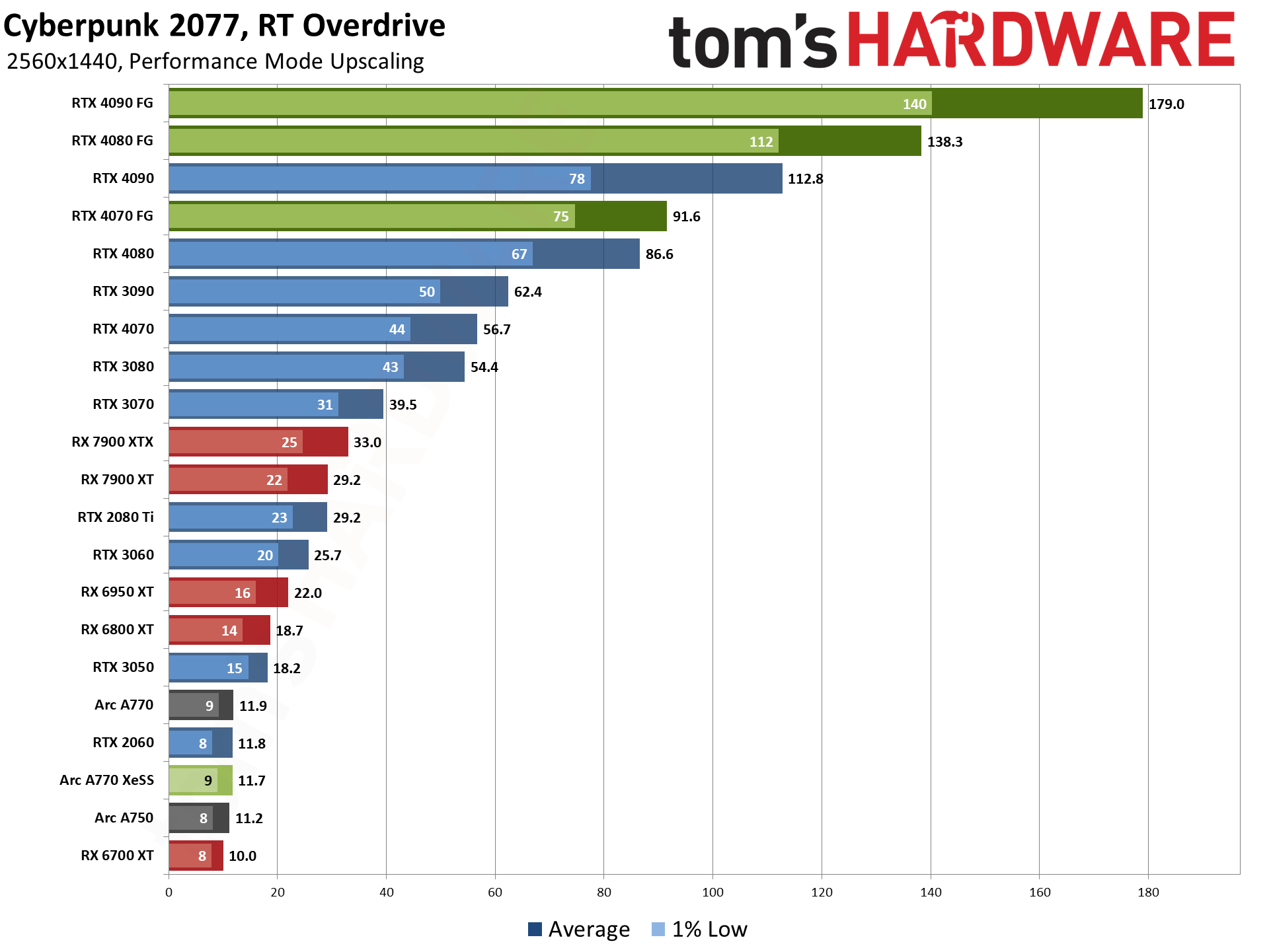

That's fully path traced, and while it doesn't make CP77 a better game, it does make it look better. 1440p with Quality or Balanced upscaling plus Frame Generation is very playable on an RTX 4070. The fastest AMD cards barely break 30 fps (with FSR2 Performance mode). That's the worst-case scenario for AMD, and while it's mostly limited to one game (unless you want to count Minecraft, Quake 2, or Portal RTX), I think we're going to see more experimentation with full ray tracing (aka "path tracing") in the coming years.

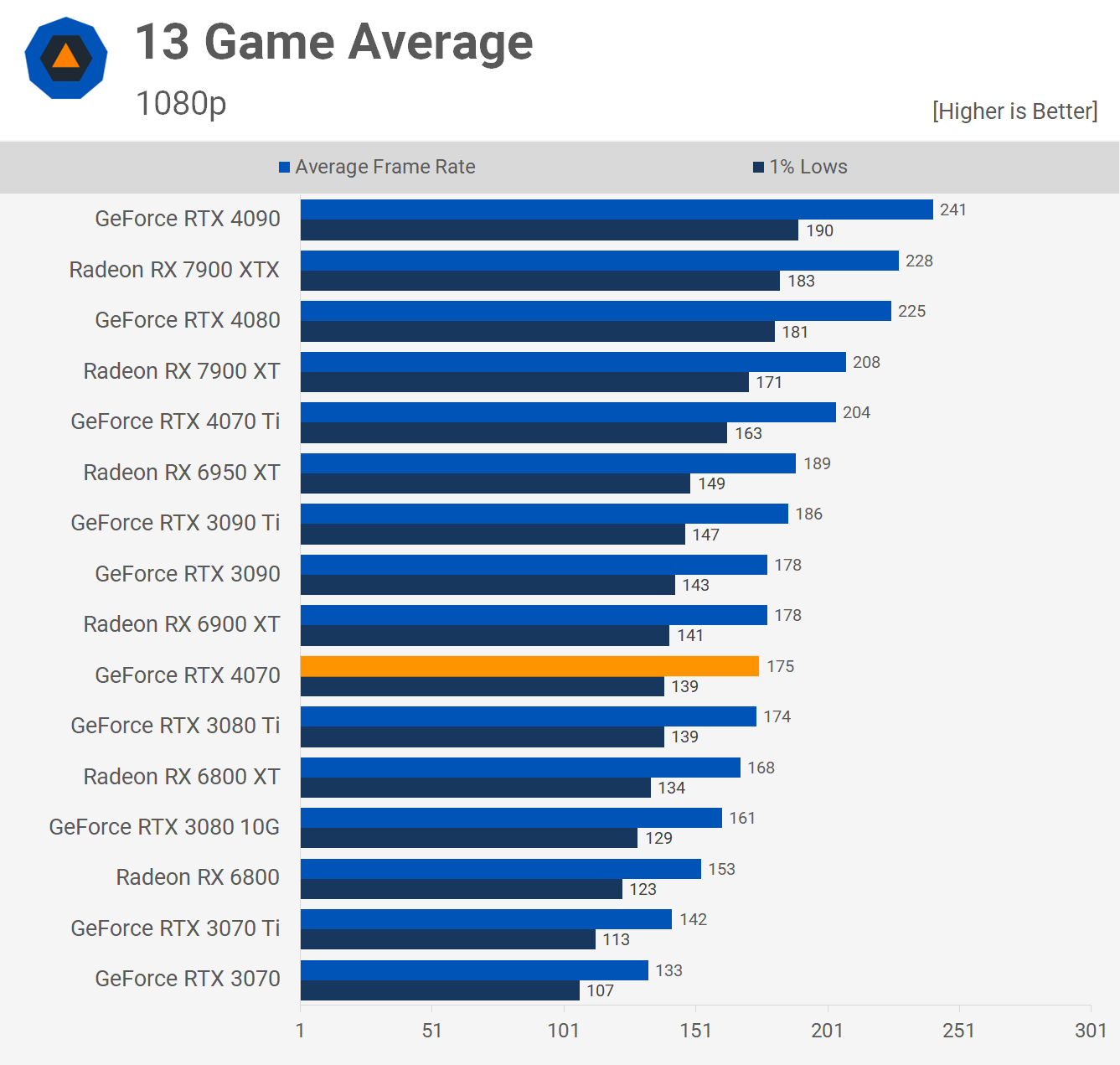

Would I sacrifice a bit of rasterization performance, dropping from ~113 fps on average to ~98 fps on average at 1440p, in order to potentially get to play around with future path tracing games? Yes, I would. And I think a lot of other gamers would as well. And the longer AMD downplays ray tracing and calls it unnecessary, the more it's going to fall behind until we reach the point where, for a lot of games, it actually won't be unnecessary.

People should stop making excuses for AMD's lack of RT performance and ask them to do better. Because more than any other graphics technology, ray tracing has the potential to truly change the way games look. We're not there yet (well, we sort of are in Cyberpunk 2077 Overdrive mode), but we're moving in that direction. If you want to hate Nvidia for pushing us there, feel free, but there's a reason CGI gets used so heavily in movies. Games are years away from Hollywood quality CGI, but they continue to close the gap. I'm looking forward to seeing more truly next-gen graphics implementations, rather than minor improvements in rasterization techniques.