Please keep it to 1 thread.

userbenchmark link: https://www.userbenchmark.com/UserRun/50058724

system specs:

Operating System

Windows 10 Home 64-bit

CPU

Ryzen 9 5900x

RAM

32.0GB Dual-Channel Unknown @ 1499MHz (22-21-21-50)

Motherboard

ASUSTeK COMPUTER INC. TUF GAMING X570-PRO (WI-FI) (AM4) 38 °C

Graphics

LC27G5xT (2560x1440@144Hz)

4095MB NVIDIA GeForce RTX 3080 Ti (EVGA) 58 °C

Storage

931GB Seagate ST1000DM003-1SB102 (SATA ) 34 °C

465GB Western Digital WDS500G1X0E-00AFY0 (Unknown (SSD))

i got an evga 3080ti and some new ram yesterday. and ive had my nvme and cpu for a while and i didnt have any problems when playing games on my 2060super but now my fps is dropping way lower than it had been when using the 2060super. i dont know whats causing everything to underperform but i need help bad!

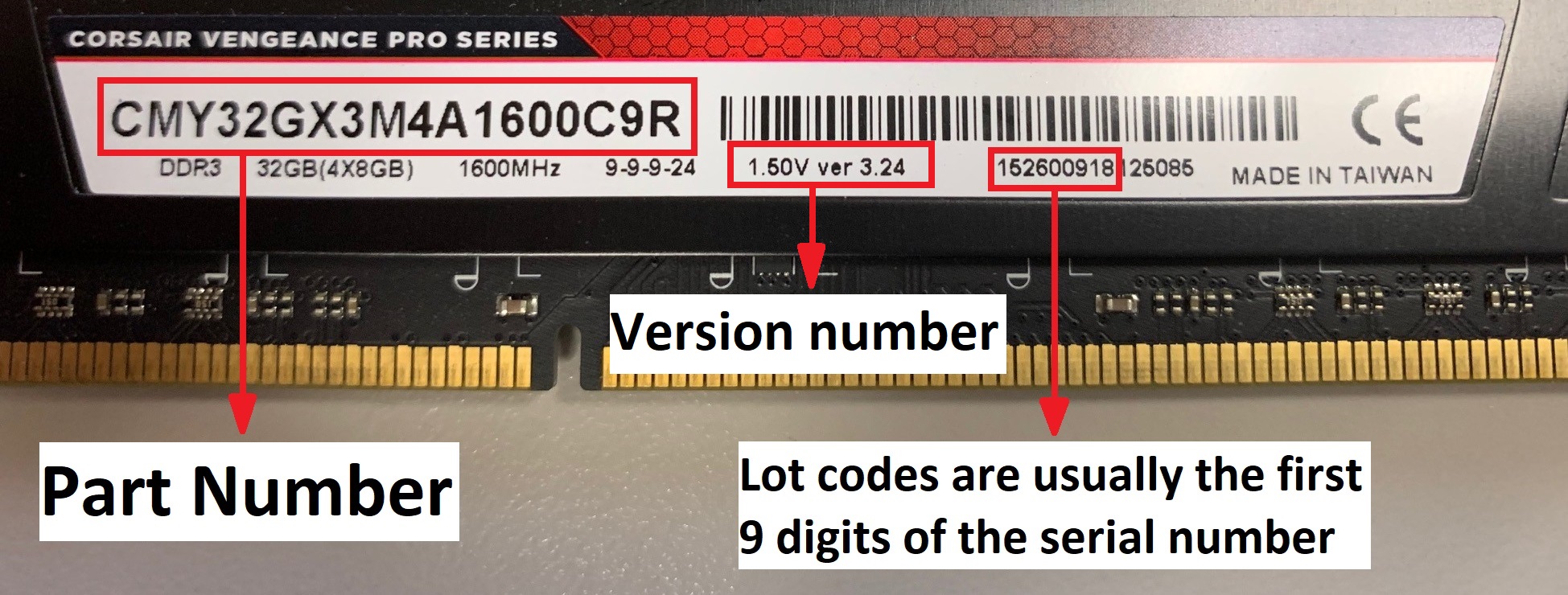

also im confused as to why speccy reads my ram speed as 1499MHz and on task manager it says 3000MHz could that be a problem as well? either way i just need someone to help me make sense of all this please

system specs:

Operating System

Windows 10 Home 64-bit

CPU

Ryzen 9 5900x

RAM

32.0GB Dual-Channel Unknown @ 1499MHz (22-21-21-50)

Motherboard

ASUSTeK COMPUTER INC. TUF GAMING X570-PRO (WI-FI) (AM4) 38 °C

Graphics

LC27G5xT (2560x1440@144Hz)

4095MB NVIDIA GeForce RTX 3080 Ti (EVGA) 58 °C

Storage

931GB Seagate ST1000DM003-1SB102 (SATA ) 34 °C

465GB Western Digital WDS500G1X0E-00AFY0 (Unknown (SSD))

i got an evga 3080ti and some new ram yesterday. and ive had my nvme and cpu for a while and i didnt have any problems when playing games on my 2060super but now my fps is dropping way lower than it had been when using the 2060super. i dont know whats causing everything to underperform but i need help bad!

also im confused as to why speccy reads my ram speed as 1499MHz and on task manager it says 3000MHz could that be a problem as well? either way i just need someone to help me make sense of all this please