A)Don't look at just the cpu alone.Actually I think its the opposite. The 9900k is $50 more but you get 8 core/16 threads vs 9700K 8c/8t. If I personally used applications requiring more than six core/threads the 9900k would probably be the better choice for only $50.

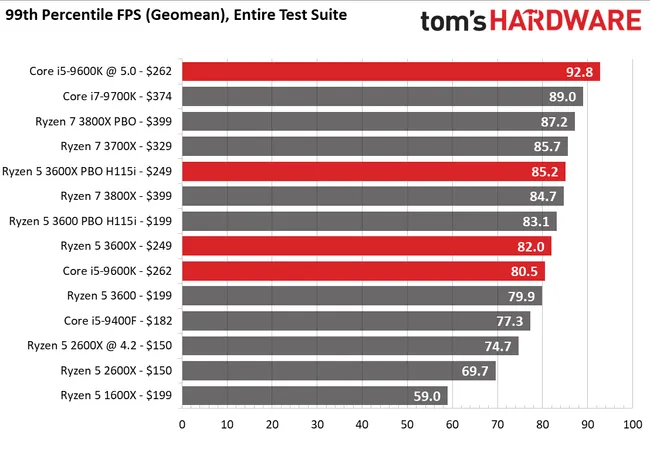

However using my 9600k for internet, I-Heart radio, internet TV, gaming and MS Office applications the 5ghz OC all core 9600K performs all those at the top of the charts.

From what the TH has indicated, pairing a 5ghz OC all-core 9600K with a 3080 would be pretty much golden for gaming.

I can see many people, whom aren't enthusiasts, looking to pair a 9900K with their cheap H and B boards - even some of the cheapest Z board are bad with this cpu - along with a Hyper 212-level air cooler, or some 120mm hybrid...

It's just gonna be a bad time for those not informed. The 9700K has far more flexibility with low-mid range quality mobos and cheap coolers.

[Maybe enthusiast wasn't the right word, but I was referring to those who are well-informed on PC hardware - who already know the ups and downs of the 9900K. The Average Joe won't know jack about this cpu, beyond it being the best of its class at the time.]

B)The article was about 9900K and 9700K price drops.

I'm not EVEN getting involved in the above 9600K debacle. Nope 🤐