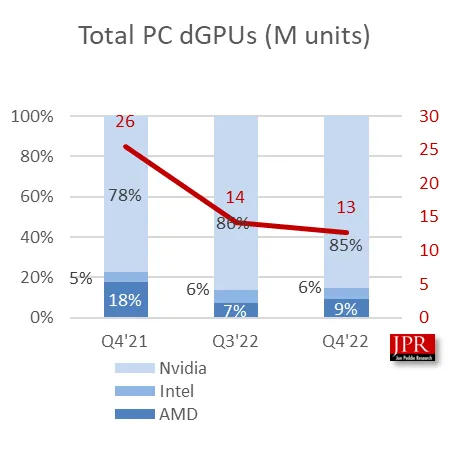

Increased revenue of Intel's AXG unit led to incorrect GPU unit shipments estimates.

Intel Claimed 6 Percent of Discrete GPU Market in Q4 : Read more

Intel Claimed 6 Percent of Discrete GPU Market in Q4 : Read more

I mean that still a heck of a turnout for a first run at it. It can only get better from here.

As noted in our previous report, JPR data is based on dGPU sell-in to the channel and says nothing about dGPU sell-out to consumers and businesses that are actually using the dGPUs. I would still wager (heavily) that there are a lot of Arc GPUs in warehouses sitting in large containers rather than in PCs and laptops. Those will inevitably get moved out to consumers over the coming year, and I suspect Intel isn't ordering more Arc wafers from TSMC at this stage. Intel grabbed 6% of the sell-in market last quarter. That is not the same as 6% of the consumer GPU market.The fact Intel grabbed 6% of the consumer dGPU market is still insane.

Is that AMD's positioning, or just how we're used to thinking of them?it does show again that while AMD positions themself as a "value alternative" essentially to nVidia,

People expecting to hold onto their GPU for a while might also really be starting to weight ray tracing performance more heavily.It could be exclusive features or it could be software stability (for me it's the latter),

Is that AMD's positioning, or just how we're used to thinking of them?

Nvidia is definitely seen as the premium brand. So, it makes sense to me that when availability returned and prices dropped, people who might've "settled" for an AMD GPU were instead opting for Nvidia - even at a price premium.

People expecting to hold onto their GPU for a while might also really be starting to weight ray tracing performance more heavily.

Yeah, that's basically what Jarred said in post #6.You all do know that this is not the number sold to consumers right?

It could be exclusive features or it could be software stability (for me it's the latter), AMD needs to finally recognize that performance isn't what's holding them back.

Seems like a chicken-and-egg problem. As long as AMD has such issues, few people will use it with this software and that means fewer bug reports and less incentive for the software vendor to focus on doing their part to make sure the AMD backend works well.I use Adobe Rush for small video projects. I use DaVinci for larger projects.

Both support Nvidia CUDA perfectly. On AMD GPU they are a stuttering nightmare.

I can not even trust AMD to give me a bug free render, let alone do it fast. With an AMD GPU I would have to rewatch every single video to make sure the render is clean. With Nvidia's CUDA I know it's clean.

Aha, calling John Pedddie research reputable is like calling areputable Jon Peddie Research,

They did state all the sales they made, not more and not less.Whenever a company such as Intel manipulates its own stock price by exaggerating fake sales (and then employees sell at the rise), they should be forced to pay tax, shipping etc on every single sale they CLAIM they made on items that don't exist.

Suddenly manipulation by doubling/tripling claimed sales wouldn't be in Intels best interests.

Followed by a full audit of every single company employee that bought OR sold stock, since thats market manipulation AND insider trading.

Steam HW Survey has had serious miscounting issues in the past, but AFAIK those are corrected now. Internet cafes was a big one, but it was fixed in May 2018: https://steamcommunity.com/discussions/forum/0/1696046342855998716/ I follow the Steam data basically every month, and for GPUs I look at the API pages so that I can see more details on the hardware — the main video cards page cuts off anything below ~0.15%, while the API page goes down to ~0.01%. There have not been any massive, unexplainable fluctuations in a long time. Again, internet cafes were a big issue for a while, because they were all being counted multiple times (every different user that got surveyed), but now Steam has a way to detect that it's a shared PC and it only gets counted once.Steam, on top of all the recurring weirdness (honestly, I've seen instance where no longer produced CPUs suddenly appeared with 2%+ of install base), it "multiple dips" users of internet cafes - so the same system is counted several times.

What's funny about that is I'm not even sure it's the most relevant metric. What seems more important isn't the number of actual hardware units exist in the wild, but how many hours of gameplay they end up seeing. Or, from a publisher's perspective perhaps how many $ are spent by the users using them.Again, internet cafes were a big issue for a while, because they were all being counted multiple times (every different user that got surveyed), but now Steam has a way to detect that it's a shared PC and it only gets counted once.

Heh, Drystone MIPS or bust.Steam doesn't even report what CPUs people are using. You've got this page that shows manufacturers sorted by clocks, and this page sorted by numbers of cores. There's no way to see, for example, how many people are using a Core i7-4770K or a Core i9-13900K, or a Ryzen 9 5900X versus a Threadripper 1920X.

Except for how crazy supply chains have gotten, plus the whole "holiday rush" thing, might indeed mean that retailers, wholesalers, and PC builders were over-ordering in the 4th quarter of last year.A modern day company using last century style manufacturing methods to stuff the channel with 1.1 million units without demand for them didn't add up with how the manufacturing world works today.

Except for how crazy supply chains have gotten, plus the whole "holiday rush" thing, might indeed mean that retailers, wholesalers, and PC builders were over-ordering in the 4th quarter of last year.

So 1.1 million is unacceptably high and sounds "off" but 780K ("0.8 million" if we're rounding) isn't a problem? I mean, sure, it's enough for a 3% overall difference in channel market share, but I'm still wondering if Intel is actually anywhere near 3% total, never mind the JPR 6% figure.At any rate, 1.1 million sounded off and it turned out it was.

So 1.1 million is unacceptably high and sounds "off" but 780K ("0.8 million" if we're rounding) isn't a problem? I mean, sure, it's enough for a 3% overall difference in channel market share, but I'm still wondering if Intel is actually anywhere near 3% total, never mind the JPR 6% figure.

Granted, a lot of Arc GPUs could be going into laptops in other markets around the world, but at least in the US, the number of people using Arc GPUs seems to be extremely small. I'd really love for Steam Hardware Survey to break Arc GPUs out from the "other" category to see if any of them are above 0.00%, but of course there's still the issue with knowing WTF Steam HW survey is sampling.