Intel doesn't push its boost power curve to 230W for fun, it needs most of that power to actually do the boosting in the first place. With most modern CPUs, there isn't much overclocking headroom beyond the default boost behavior and overriding the boost algorithm sometimes causes worse performance due to forfeiting the peak boost clocks.Lifted power limits do not increase clocks, you can do actual overclocking with 230w.

News Intel Core i9-12900K and Core i5-12600K Review: Retaking the Gaming Crown

Page 5 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

helper800

Judicious

If you cannot tell the difference between an industry leader sitting on their hands for 9 years and AMD who was literally going bankrupt I do not know what to say.Because AMD was sitting on their thumbs for 7 years giving us 0% increments of performance "gen" to "gen" coming out with new generations of the exact same CPU clocked differently.

I rather be milked and get 5% than to be milked and get 0% .

TerryLaze

Titan

I can tell the difference, I can also tell that if intel would have done anything more then they did AMD wouldn't just be literally going bankrupt, they would have literally gone bankrupt.If you cannot tell the difference between an industry leader sitting on their hands for 9 years and AMD who was literally going bankrupt I do not know what to say.

You can't play the poor card to protect AMD and also use it to attack intel.

If intel released their X hexa cores to desktop at $4-500 10 years ago then AMD wouldn't be here

TerryLaze

Titan

they don't though, PBP is 125W average and it's within 3% of using 230-240 constantly.Intel doesn't push its boost power curve to 230W for fun, it needs most of that power to actually do the boosting in the first place.

Intel Core i9-12900K, i7-12700K & i5-12600K im Test: Leistung und Effizienz P- vs. E-Core

Intel Alder Lake im Test: Leistung und Effizienz P- vs. E-Core / Wie effizient ist die Hybrid-Architektur?

I am not talking about XMP. I am talking about the Performance maximizer tool....Nope. Intel's official stance is that overclocking and even just enabling XMP...

Link: Overclock Your CPU with Unlocked Intel® Processors

KitGuru shows good overclocking headroom for the 12600K...I doubt there is much "overclocking" headroom left on a CPU...

Link: Intel Core i5-12600K - Leo and the Superhero CPU! - YouTube

https://www.intel.ca/content/www/ca...cessors/processor-utilities-and-programs.htmlI am not talking about XMP. I am talking about the Performance maximizer tool.

Link: Overclock Your CPU with Unlocked Intel® Processors

Exceed the specs for any reason in any way, even using Intel's own tools, your warranty is at least technically void.Is the boxed processor warranty void if using Intel® Performance Maximizer?

Yes. The standard warranty does not apply if the processor is operated beyond its specifications.

Last edited:

If 4k gaming is all you care about then why not go with an even cheaper option? Also it shouldn't get beaten by a cheaper CPU in the first place. Either way 5800X is worse perf per dollar.It will "beat" 5800x by 4% average in 1080p with a 3090, lmao. How about 1440p, 4k or a lesser GPU?

5800X is also gimped to DDR4. At least 12600KF provides DDR5 option if you need it. But 5800X is more expensive, gets beaten in both single and multithreaded workloads, doesn't support PCIE5.0, doesn't support DDR5, doesn't have integrated thunderbolt 4 support, doesn't have integrated wifi 6E, doesn't have upgrade path to any 16+ core CPU in future, and so on. Any reasonable person would find 5800X way overpriced for what it is.Also why don't you pair your new Alder Lake with DDR4 so you can gimp yourself in the future and need to upgrade again for when DDR5 actually makes sense, right? Because now it will actually be much more expensive for little gain to do so... pfft.

WTF are you talking about? Are you OK?There are so many holes in these Alder Lake CPUs, that the Swiss cheese will be jealous on them...

Don't assume everyone is a fanboy like you are. If Zen 3D provides better perf /dollar and better features I will surely praise it. As far as I know the features will be similar to Zen 3 and so inferior to Alder lake. What is remaining to see is the perf per dollar. If that is significantly better than Alder lake then I will forgive the feature inferiority and gladly recommend it to everyone.This barely a win Alder Lake has over Zen3 and is gonna be a laugh when Zen3D comes. Enjoy it while it lasts, it won't be for long.

helper800

Judicious

They did release a hexa-core CPU that was technically in the 500s. Intel stagnated because they were greedy and nothing more.I can tell the difference, I can also tell that if intel would have done anything more then they did AMD wouldn't just be literally going bankrupt, they would have literally gone bankrupt.

You can't play the poor card to protect AMD and also use it to attack intel.

If intel released their X hexa cores to desktop at $4-500 10 years ago then AMD wouldn't be hereright nowfor years now.

pdegan2814

Distinguished

The big winner in my opinion is the i5-12600K. If it's actually for sale at MSRP(something it's definitely not a given these days), it'll be an amazing value. AMD's power consumption numbers in those charts are still seriously impressive, though. Intel may have made great strides in that area, but AMD's still rockin'. And honestly, power consumption & heat are significant factors for me. But kudos to Intel for making this a genuine competition again. Can't wait to see what AMD comes up with to answer back.

RodroX

Dignified

......

5800X is also gimped to DDR4. At least 12600KF provides DDR5 option if you need it. But 5800X is more expensive, gets beaten in both single and multithreaded workloads, doesn't support PCIE5.0, doesn't support DDR5, doesn't have integrated thunderbolt 4 support, doesn't have integrated wifi 6E, doesn't have upgrade path to any 16+ core CPU in future, and so on. Any reasonable person would find 5800X way overpriced for what it is.

......

If you are gaming at 1080p, theres no much difference between any of this CPUs (12900K, 12600K, 5900X, 5800X, 5600X) not even if you are using a RTX 3090 or RX 6900XT. Don't get me wrong theres difference yes, but who cares once you are over 200 FPS? And thats if someone is really spending this amount of money to get such a high end system to play something like Hitman 3 at 1080p (which is really the most favorable title Ive seen soo far).

Now if you play with a more modest card, let say an RTX 3060ti, 3070 or 2080/ti or RX 5700XT or 6700 and/or at a higher resolution like 1440p the difference gets even smaller.

So the only reasons (which are very valid reasons) to go with a 12600K/12700K right now is because you are building a new system, or you are upgrading something way older/slower than the i7 8700K or the R5 3600 and you feel that old CPU is the limiting factor, and you don't mind using winodws 11 at its current "betaish" state.

As for DDR5 option, yes it is there but is very expensive for the performance they give compared to a good DDR4 kit. The will get better of course, and the price will slowly go down, Specially when AMD launch AM5 with DDR5 support too.

PCIe 5 is sorta useless altogheter (for the time been and the not soo near future too), we are still far away from saturating PCIe 4.

Thunderbolt, only a very limited number of people using Windows PC really need thunderbolt, and in fact I believe there are a very limited number of AM4 motherboard with it (theres at least one Asus).

WiFi 6E, I really don't see this as a deal breaker. Its nice to have it but its really not the end of the world. I ratter use a nice ethernet cable without a doubt

Upgrade path after the 5800X, you have actually 2 CPUs to upgrade 5900X and 5950, it may or not be worth it depending on your use case, but they are there. And the 5950X is a 16 cores / 32 thread CPU. And probably more if AMD launch the 3D-Vcache.

In any case If I was able to build a new system (either for gaming or working) I would avoid the 12600K and get the 12700K instead. Or just wait and get a core i5 12400 for even less money than all the CPU named soo far.

If you wait until saturating the bus becomes an issue before moving forward to the next standard, you are usually screwed because by the time the old standard becomes quantifiably painful, you are already peaking close to the next generation up's peak.PCIe 5 is sorta useless altogheter (for the time been and the not soo near future too), we are still far away from saturating PCIe 4.

As I have written multiple times before, 4GB GPUs would likely still be viable with 4.0x16 based on how much better the 4GB RX5500 performs on 4.0x8 vs 3.0x8. Unfortunately, there are no 4GB 4.0x16 GPUs to test that with.

RodroX

Dignified

If you wait until saturating the bus becomes an issue before moving forward to the next standard, you are usually screwed because by the time the old standard becomes quantifiably painful, you are already peaking close to the next generation up's peak.

As I have written multiple times before, 4GB GPUs would likely still be viable with 4.0x16 based on how much better the 4GB RX5500 performs on 4.0x8 vs 3.0x8. Unfortunately, there are no 4GB 4.0x16 GPUs to test that with.

Of course, and I agree with you. But we just got pcie v4.0 back in 2019 (well actually some people got it, most people (myself included) around the world are still rocking a pcie 3.0 motherboard without many drawbacks, none in my case). On the other hand we had pcie v3.0 and nothing else for how many years? (sorry I forgot when did the first consumer mobos show up with this ... but I think it was around 2012.. perhaps?

As I wrote it is useless for the time been. Its good to have it for "future proofing".

LeviTech

Commendable

The i5 is wrong too.Fixed. Obviously that should have said "@ DDR5-4400..."

Yes, Ivy Bridge was the first mainstream PCIe 3.0 CPU back in 2012.Of course, and I agree with you. But we just got pcie v4.0 back in 2019 (well actually some people got it, most people (myself included) around the world are still rocking a pcie 3.0 motherboard without many drawbacks, none in my case). On the other hand we had pcie v3.0 and nothing else for how many years? (sorry I forgot when did the first consumer mobos show up with this ... but I think it was around 2012.. perhaps?

As for 3.0x16 still being good enough today, the 4GB RX5500 closes 50-70% of the performance gap against its 8GB counterpart on 4.0x8 vs 3.0x8 in VRAM-bound scenarios, which leaves very little doubt in my mind that its performance could be much smaller if it had full 4.0x16 for tapping into system memory to compensate for its VRAM deficit. This may not be something enthusiasts care about on their $1000+ GPUs with 8-24GB of VRAM but it can make-or-break someone who refuses to pay $300+ for a GPU.

If AMD, Nvidia and Intel really want to launch "gaming-only" GPUs, the simplest way to guarantee they will only make their way into the hands of desperate gamers is to put only 4GB of VRAM on them (not enough to mine ETH) and use 4.0x16 to offset as much of the VRAM deficit as possible.

If you are gaming at 1080p, theres no much difference between any of this CPUs (12900K, 12600K, 5900X, 5800X, 5600X) not even if you are using a RTX 3090 or RX 6900XT. Don't get me wrong theres difference yes, but who cares once you are over 200 FPS? And thats if someone is really spending this amount of money to get such a high end system to play something like Hitman 3 at 1080p (which is really the most favorable title Ive seen soo far).

Now if you play with a more modest card, let say an RTX 3060ti, 3070 or 2080/ti or RX 5700XT or 6700 and/or at a higher resolution like 1440p the difference gets even smaller.

So the only reasons (which are very valid reasons) to go with a 12600K/12700K right now is because you are building a new system, or you are upgrading something way older/slower than the i7 8700K or the R5 3600 and you feel that old CPU is the limiting factor, and you don't mind using winodws 11 at its current "betaish" state.

Most of your comment is not really against what I said. Who cares once you are over 200 FPS?? May be if someone has a high refresh rate monitor, others shouldn't care. I agree. Except, that was not the point. The point is being slower than an i5 doesn't justify the price premium just because it has a Ryzen "7" in its name. The price should come down for 5800X to be competitive here and I hope it will.

Again, that was not the point. If you read my previous comments and the comments I was replying to, some people are painting the DDR5 as a drawback as if it is a requirement. It is an option, not a drawback. Some people needs the bandwidth, some people just want to future proof their system (for example, get an OKish DDR5 now and upgrade to a fast DDR5 later when price comes down without swapping CPU and motherboard). They are happy to pay the premium. Others who don't see the value, can easily opt out for DDR4 instead. Again, it is an option, not a drawback.As for DDR5 option, yes it is there but is very expensive for the performance they give compared to a good DDR4 kit.

and the AM5 or at least ZEN 4 hasn't confirmed DDR5 support yet. I have seen leaks which suggest it may be Zen 5 when it will be supported. We'll see. But again not the point. So i digress....Specially when AMD launch AM5 with DDR5 support too...

Do not agree. It is about future proofing your system. You don't upgrade your system every year I hope. Most people does it in around 5 year. What looks useless to you now will be essential down the road. Then you won't have to get a brand new system just for PCIE 5.0.PCIe 5 is sorta useless altogheter (for the time been and the not soo near future too), we are still far away from saturating PCIe 4.

You may not use TB4 but plenty of people does. It is a deal breaker for many. AM4 motherboards rarely have it because it costs more to integrate it separately. Having it integrated in the CPU is a plus.Thunderbolt, only a very limited number of people using Windows PC really need thunderbolt, and in fact I believe there are a very limited number of AM4 motherboard with it (theres at least one Asus).

WiFi 6E, I really don't see this as a deal breaker. Its nice to have it but its really not the end of the world. I ratter use a nice ethernet cable without a doubt

I am not saying Wifi 6E a deal breaker. It is an option. Not everyone has the ethernet connection right beside their PC desk. Some people don't like to run ugly wires through their room. Having the fastest wifi integrated in the cheap is awesome for some folks. Again the point is, the CPU that lacks feature shouldn't cost more just because it has a "7" in its name.

In my comment I clearly said 16+ cores. Alder lake has an upgrade path up to 24 cores which is better than the upgrade path for 5800X. All these issues wouldn't matter if 5800X was cheaper than 12600KF. But unfortunately it is not.Upgrade path after the 5800X, you have actually 2 CPUs to upgrade 5900X and 5950, it may or not be worth it depending on your use case, but they are there. And the 5950X is a 16 cores / 32 thread CPU. And probably more if AMD launch the 3D-Vcache.

It is a personal preference depending on what you need it for, how long you want to use it, and your budget. I personally would have gone for the 12700K as well.In any case If I was able to build a new system (either for gaming or working) I would avoid the 12600K and get the 12700K instead. Or just wait and get a core i5 12400 for even less money than all the CPU named soo far.

brandonjclark

Distinguished

Why? Everybody is on the power conscious trip right now, ...

LOL, trust me when I say that "everybody" is NOT.

1. The 5800x loses vs 12600k by 0-3% difference, are we really gonna do this? Is that really a win? I think anyone who has a 5800x now or wants to upgrade to it, is laughing at intel.If 4k gaming is all you care about then why not go with an even cheaper option? Also it shouldn't get beaten by a cheaper CPU in the first place. Either way 5800X is worse perf per dollar.

5800X is also gimped to DDR4. At least 12600KF provides DDR5 option if you need it. But 5800X is more expensive, gets beaten in both single and multithreaded workloads, doesn't support PCIE5.0, doesn't support DDR5, doesn't have integrated thunderbolt 4 support, doesn't have integrated wifi 6E, doesn't have upgrade path to any 16+ core CPU in future, and so on. Any reasonable person would find 5800X way overpriced for what it is.

WTF are you talking about? Are you OK?

Don't assume everyone is a fanboy like you are. If Zen 3D provides better perf /dollar and better features I will surely praise it. As far as I know the features will be similar to Zen 3 and so inferior to Alder lake. What is remaining to see is the perf per dollar. If that is significantly better than Alder lake then I will forgive the feature inferiority and gladly recommend it to everyone.

2. The price is only NOW better for intel for the CPU itself, the platform is more expensive actually, so let's call it what it is. AMD will drop prices so what are you gonna say then? Your price advantage will be gone and the 3% performance difference is as big as margin or error. I'm talking gaming not some synthetics...

3. 5800x is a 1 year old CPU, if you blame this on being gimped, let's blame Rocket Lake which is 6 months old and more gimped than the definition of gimped.

The truth is you either gimp yourself with DDR4 with Alder Lake to get (for now) better prices, or you pay a big premium over Zen3 to get a little more performance. Both are bad decisions. And the power efficiency is horrible on Alder Lake.

4. It's was a figure of speech, clearly too much for you to understand.

The "holes" in the Swiss cheese are all the minuses Alder Lake has: much more expensive platform/or gimped platform, more power hungry, hotter, needs Win11, teething issues and early adopter beta tester of a new architecture (see Zen1), etc. Get it now?

5. I'll be a "fan" as much as AMD is good enough and does not do **** like intel does and did, for 7 years milking us and giving us +5% performance increments from gen to gen, forcing MB upgrades too often, etc.

6. These so called inferior features are actually a drawback now more than an advatage: they are too new, unpolished, expensive, in beta, less availability (DDR5) and not much of a difference in performance. So for AMD, taking the smart road to only get these 1 year later with Zen4 when a lot of these issues will be gone or much less present is the best option.

TerryLaze

Titan

Sure, but if AMD has to reduce prices now then they are going to make less money for a whole year and they aren't making that much to begin with.6. These so called inferior features are actually a drawback now more than an advatage: they are too new, unpolished, expensive, in beta, less availability (DDR5) and not much of a difference in performance. So for AMD, taking the smart road to only get these 1 year later with Zen4 when a lot of these issues will be gone or much less present is the best option.

Also the next year all of the shortages will continue meaning that making a CPU will become more expensive and more difficult and time consuming because they will have to find all the components first.

And if they come out with these features in a year they will be one year late to the party, everybody that needs the features will already have them.

Also raptor lake will also come out in one year and they will be based on a platform that already has a year of experience with all the new features while the zen platform will be as new ( unpolished, expensive, in beta, ) as the alder lake platform is now.

It's neither a smart or a dum road for AMD it's what they can do, if they had the means to bring out a new gen right now with all the new things they would do it.

cc2onouui

Prominent

So you did know it's not fair, if 5% is not important to you please don't make reviews any more (with do respect) it's all PBO or not at all, you are a judge and an advisor do you prefer to be (actually to stay) like the new anandtech.. so sorry for myself that I have every time to read between the lines to understand the performance and sometimes nothing to read.. you made the complains look like fanboyism (it's ok AMD will do better with ZEN4 don't worry!!) I give up it doesn't matter which will do better the answer is not here any more, it's not personal but you did not do a good job and I'm only fair.. keep the 5%Scientific and fair would be stock performance first, follow up with overclocking. Time is limited, games like to lock you out when you run them on too many different CPUs, and Windows 10 + Windows 11, DDR4 + DDR5, Stock + OC ends up being a LOT of permutations. PBO is good for about 2-5% more performance at best. It's not even remotely going to close the gap between 12600K and 5600X. It's okay for Intel to retake the gaming performance crown. The fact that AMD had the CPU lead for several years, at least in some respects, was a good run. Zen 4 might make a good counterattack. But Alder Lake is a very impressive set of architectural and software improvements and Intel deserves credit for that.

Marvin Martian

Commendable

DDR5 and PCIE 5 and power consumption surely add cost to the system, so the price of the CPU doesn't come close to reflecting the actual cost of using this vs an AMD solution.

How about a more realistic performance / cost evaluation?

How about a more realistic performance / cost evaluation?

TerryLaze

Titan

He is using MCE in the beginning and then he turns MCE off and thinks that that means that the mobo now uses intel spec...And the power efficiency is horrible on Alder Lake.

View: https://www.youtube.com/watch?v=m061p-B-IYo

picardfacepalm.gif

If that is how smart reviewers are then the viewers have no chance at all.

Power limits are called long (for PL1) and short (for PL2) duration package power limit on ASUS boards, if at auto the mobo will use whatever ASUS wanted them to be, you have to manually set them to intel specs.

Since the baseline layer count already had to increase from 4 to 6 in order to fully support PCIe 4.0, I doubt there are any major cost increases to motherboards specifically for PCIe 5.0 and DDR5. The DDR5 DIMMs are quite a bit more expensive but they'll likely hit price parity with DDR4 in two or three years as DDR5 achieves economies of scale while DDR4 gets discontinued.DDR5 and PCIE 5 and power consumption surely add cost to the system, so the price of the CPU doesn't come close to reflecting the actual cost of using this vs an AMD solution.

Where there may be more significant cost increases at the lower-end is supporting that chunky 230W boost power as stock behavior. That will require much beefier baseline VRMs and likely another pair of power planes to comfortably handle those 200+A.

TerryLaze

Titan

The lower-end ones won't care and will just provide less watts, it's the same as previous gen anyway where lifted limits had the same and even higher power draw, so if they supported high power before there won't be any higher cost and if they didn't in previous gens they won't with this one either.Where there may be more significant cost increases at the lower-end is supporting that chunky 230W boost power as stock behavior. That will require much beefier baseline VRMs and likely another pair of power planes to comfortably handle those 200+A.

Except "power limits lifted" appears to be the stock behavior with Alder Lake (still boosts to 200+W continuous CPU power with power limits fully enforced), so the baseline requirements have effectively doubled.The lower-end ones won't care and will just provide less watts, it's the same as previous gen anyway where lifted limits had the same and even higher power draw

TerryLaze

Titan

No it's not, reviewers just do this for ...reasons.Except "power limits lifted" appears to be the stock behavior with Alder Lake (still boosts to 200+W continuous CPU power with power limits fully enforced), so the baseline requirements have effectively doubled.

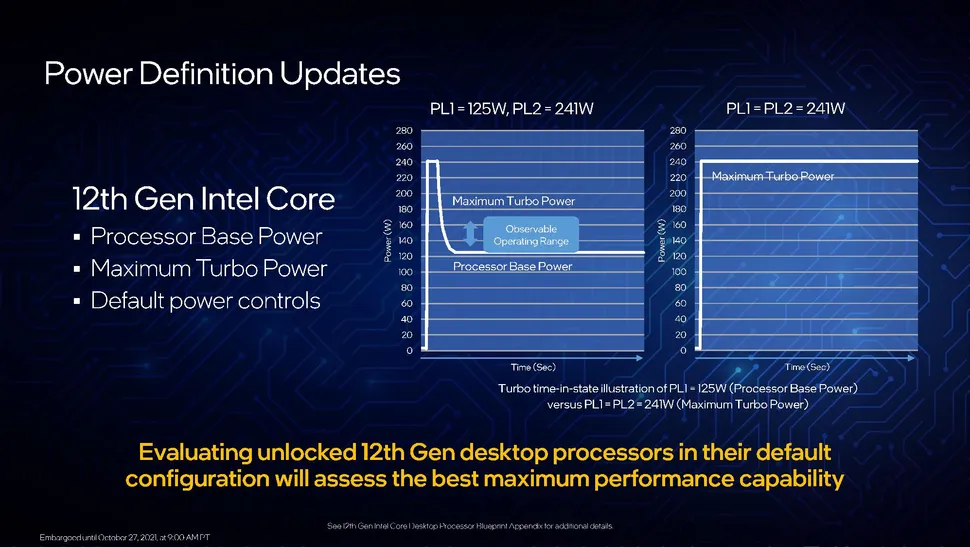

TDP(PBP) is still PL2 for x seconds and then PL1 (simplified, it's a whole song and dance to keep an average of 125)

Power limits lifted is now Maximum turbo power and is PL1=Pl2 for ever.

https://www.tomshardware.com/news/intel-alder-lake-specifications-price-benchmarks-release-date

TRENDING THREADS

-

-

-

-

Question Upgraded to Ryzen 5 5500GT — Rear USB 3.0 Ports Have Power but No Data

- Started by eccoows

- Replies: 1

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.