I may be a little off base here but I don't really see tiles on top of an interconnect fabric being a reversal of that trend at all.

You have to squint, a little bit. However, we've had mass market multi-chip packages going back at least as far as the Pentium Pro (1995), and the direction of travel was clearly towards monolithic dies.

Even concerning multi-core CPU, some of the first examples we saw merely packed multiple dies in the same package (Pentium D), later followed by integrating them on the same die.

Next to get integrated into a monolithic die were GPUs. Intel and AMD first had GPUs integrated into their motherboard chipset. Then, in the first gen of "Core"-branded CPU, Intel moved the GPU die into the CPU package, but they were still separate dies. Sandybridge was Intel's first gen that had them integrated together, in a monolithic die.

Starting with the FPU, then the Northbridge, block after block got directly integrated into monolithic dies, sometimes with a half-way point as a discrete die in the CPU package. As far as trends go, you really can't miss it.

A monolithic die SOC has been facing scaling issues for some time now.

Don't get me wrong. I'm not arguing

against chiplets or tiles. I know all the rationale behind this new shift. I just wanted to point out what a marked departure it signifies.

or at the very least get little to no gains for the expense of reinventing the wheel every time a new node comes out. Tiles allow reuse scenarios that will further increase integration.

Heh, one interesting downside Intel is facing is having to restrict the new instructions in their Arrow Lake P-cores and E-cores, because they decided to reuse the Meteor Lake SoC tile, which contains those Crestmont E-cores. Therefore, the other cores had to be held back to maintain ISA symmetry.

We are moving into an era where simply shrinking the node isn't going to increase performance.

Let's not get ahead of ourselves. Yes, SRAM scaling is an issue, and before that was I/O scaling. However, logic density and perf/efficiency are still improving with newer nodes.

The big gains to be had are going to be task specialized cores,

Beyond AI and graphics, I'm skeptical about this.

AI driven predictive scheduling / core task routing.

That's largely orthogonal to the other stuff we're talking about.

In theory tiles could actually allow SOC memory and storage to move onto the package whereas monolithic die packages were simply too inflexible to do that.

I think you're confused. The whole discussion of monolithic vs. chiplets has nothing to do with on-package memory.

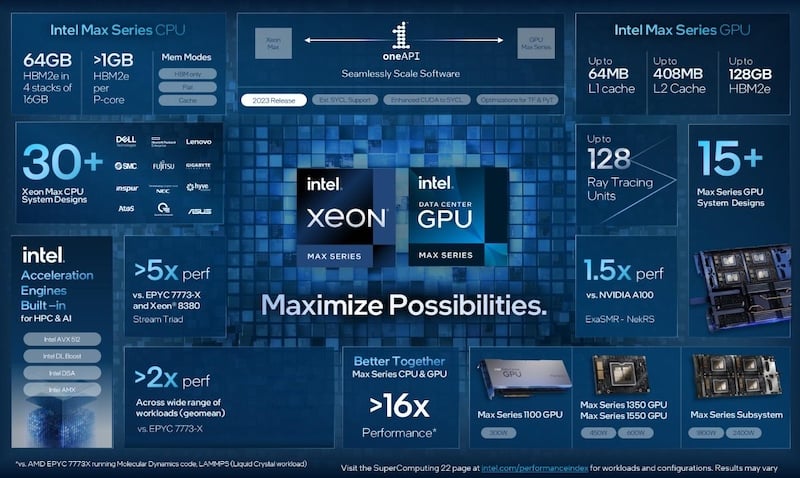

Apple still uses monolithic dies (except for M-series Ultra) and they have on-package memory. GPUs largely remain monolithic, and have used on-package HBM since 8 years ago. Around that time, Intel's Xeon Phi was also monolithic and incorporated 16 GB of MCDRAM.

As for storage, it's much less heat-tolerant (and more failure-prone) than DRAM or logic. So, it really doesn't make sense to bring it on-package (leaving aside some specialized embedded products).