I just wanted to bring this back to the fore to throw a little 'salt' on the great performance increase (over the Ryzen 5000 and 12th gen Intel) touted by Intel.

Many here know not to trust 1st person reviews, whether from AMD, Intel, NVIDIA, or whoever, due to blatant inaccuracies or non-like-for-like performance comparisons. Well, Intel has done it again.

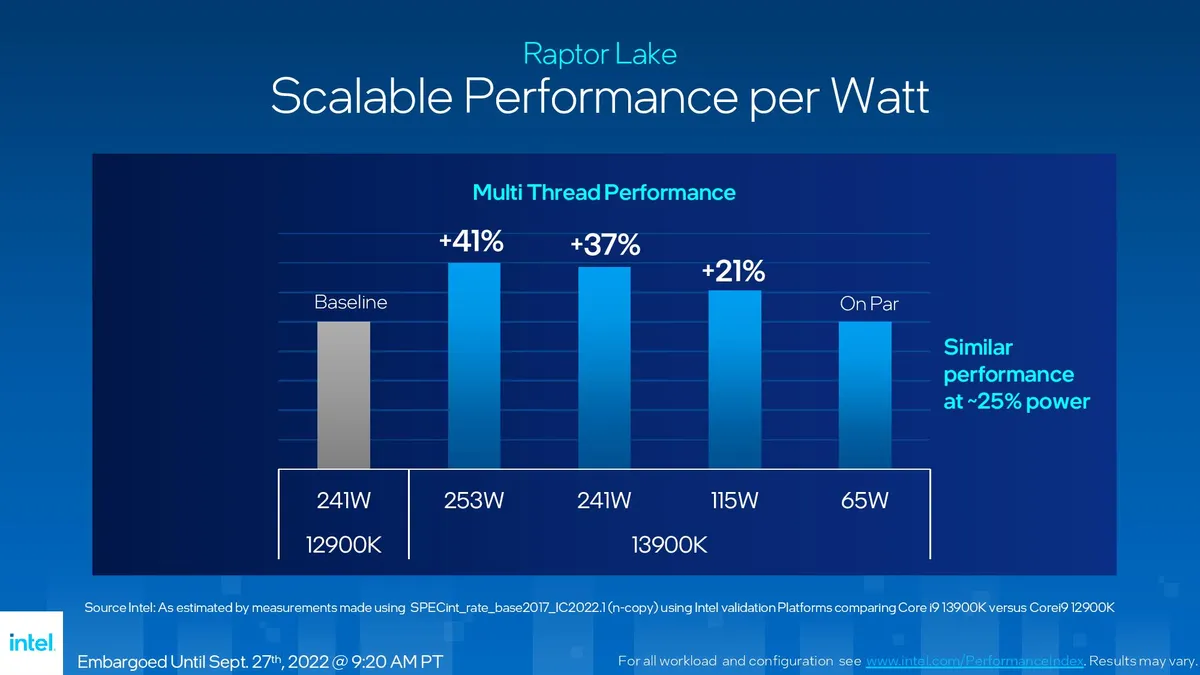

In their 'Leadership Gaming performance' slide (shown below), Intel shows a decent performance increase over their own 12th gen CPU and AMD CPUs. When you read the fine print, however, you'll see that they're pitting the 13900k with

5600MT/s RAM up against the 12900k with

4800MT/s RAM. Depending on the game, this can leave anywhere from 2-10% of performance on the table, in certain scenarios. The AMD system, was also run with only 3200MT/s RAM, no RAM timings listed,

AND limited the Ryzen CPUs to a PL of 105W (basically, PBO disabled).

Now, Intel (and many others) may be quick to point out that the RAM used is what was officially supported, and that's fine (I guess), but it is well known that Ryzen 5000 CPUs get a substantial boost from running memory up to 3600-3800MT/s. What about the 105W limit on the Ryzen CPUs though?! I guess AMD's auto-overclocking isn't supported by Intel, but Intel's auto-overclocking is?? Totally ridiculous! It turns the below graph into a completely meaningless slide.

So, if you're on a Intel 12th gen OR an AMD Ryzen 5000 CPU, just know that the 13th gen does NOT give as great a boost over the previous gen as Intel is making it seem. As always, wait for multiple, reputable, 3rd party reviews, with reviewers that actually take other factors out of the equation and test actual CPU generational performance increase as we'll see it in the real world.

https://uploads.disquscdn.com/image...0383ad177aa624aa43ec1ed2e46639d21547dcaf1.jpg