Gon Freecss

Reputable

aldaia :

Gon Freecss :

juanrga :

-Fran- :

In other less heated discussions (pun might be intended): https://techreport.com/news/33517/rumor-intel-partner-docs-add-to-eight-core-coffee-lake-cpu-chatter

Covfefe Lake "S" might come with an 8-core variant! What do you know, uh? Something, something, power delivery, years, design, something.

Covfefe Lake "S" might come with an 8-core variant! What do you know, uh? Something, something, power delivery, years, design, something.

A company as Intel has a plan A and a couple of B plans in the pipeline in case plans A fails. It is not how if engineers have designed, implemented and checked an 8-core CFL in three months.

Also, I'm really interested in knowing why they didn't just backport Cannonlake onto 14nm++. They already had an 8 core design ready back in 2016.

The canonlake backport to 14nm++ already exists and is named Coffee Lake 🙂

Coffee Lake is the third 14 nm process refinement following Broadwell, Skylake, and Kaby Lake intended for desktop.

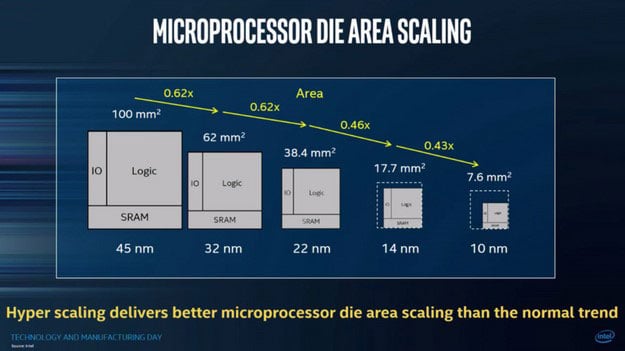

Cannon Lake is Intel's 10-nanometer die shrink following Broadwell, Skylake, and Kaby Lake intended for mobile.

Upcoming Cascade Lake is the third (or maybe forth) 14 nm process refinement following Broadwell, Skylake, and Kaby Lake intended for server and enthusiasts.

Skylake, Kaby Lake, Coffe Lake, Cannon Lake, and Cascade Lake are all the same microarchitecture.

Broadwell to Skylake was last microarchitecture change.

Cannonlake is under the same micro-architecture umbrella as Skylake, but it's not the same. It has IPC increases.