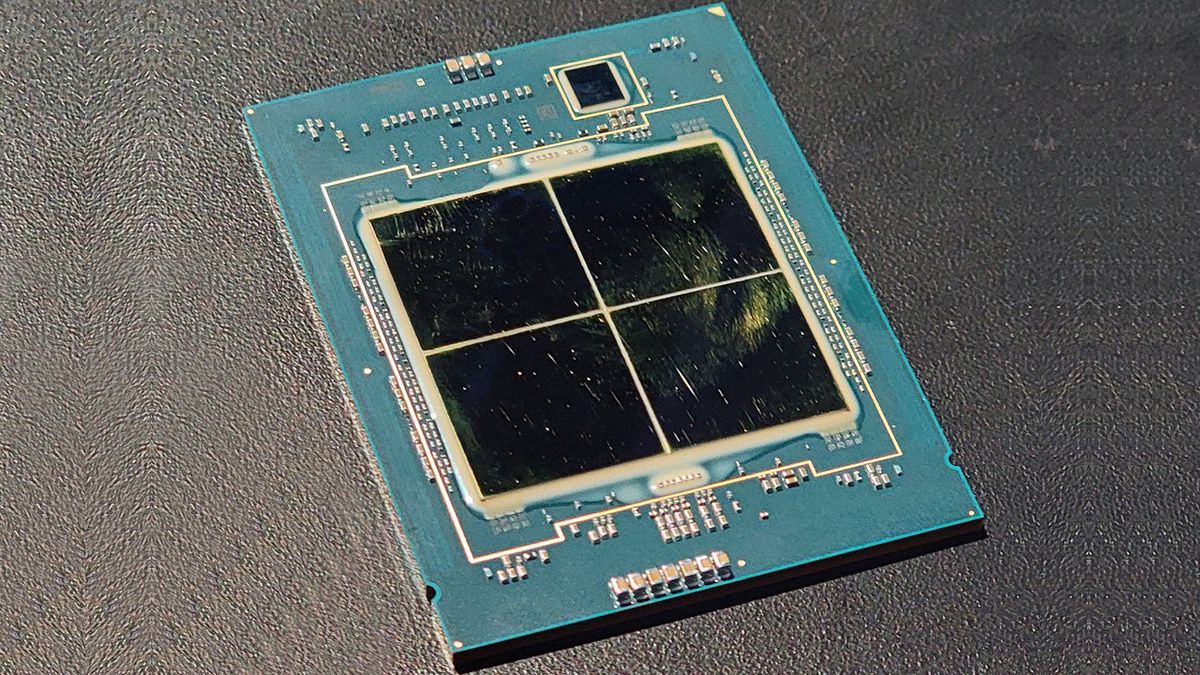

Extremely overclocked 56-core Intel Xeon CPU consumes an extreme amount of power.

Intel's Xeon W9-3495X Can Draw 1,900W of Power : Read more

Intel's Xeon W9-3495X Can Draw 1,900W of Power : Read more

Alone the fact that all of the cores are able to hit 5.5Ghz is a feat.33 watts per core. Is that bad? I am not an engineer so I'm not certain if that is good or bad. Server chips are meant to run much lower on clocks I know.

33 watts per core. Is that bad? I am not an engineer so I'm not certain if that is good or bad. Server chips are meant to run much lower on clocks I know.

well, a ryzen r9 7950x3d will draw about 10-15W per core at 5.5ghz, it's harder to tell with intel's current offering due to the two sized/speed cores; which sort of messes with the calculations. but judging by the power draw of the 13000 series 33w/ big core is probably correct. so i'd say that's normal for intel?33 watts per core. Is that bad? I am not an engineer so I'm not certain if that is good or bad. Server chips are meant to run much lower on clocks I know.

Yeah, that's the first thing I did. Actually, it's almost 34 W/core. And I was going to say I think they could go further.33 watts per core. Is that bad?

No offense, but this feels like motivated reasoning. If you have more to say about your rationale, I'd be interested in hearing it.That's actually really efficient considering the extreme ends of the spectrum we're inhabiting here.

It does use 10-15W per core, but it doesn't run at 5.5, it barely reaches 5Ghz with all cores loaded.well, a ryzen r9 7950x3d will draw about 10-15W per core at 5.5ghz, it's harder to tell with intel's current offering due to the two sized/speed cores; which sort of messes with the calculations. but judging by the power draw of the 13000 series 33w/ big core is probably correct. so i'd say that's normal for intel?

Alone the fact that all of the cores are able to hit 5.5Ghz is a feat.

The 13900k uses 32W for single thread load although it runs higher clocks, the 7950x uses 43W

https://www.techpowerup.com/review/intel-core-i9-13900k/22.html

That must be subtracting off idle power consumption. Otherwise, it's quite at odds with Anandtech's measurement for the i9-12900K, although both claim to be measuring CPU package power. In that TechPowerUp chart, they claim i9-12900K's single-threaded power is only 26 W, yet Anandtech got 78 W (or 71 W if you subtract off idle).The 13900k uses 32W for single thread load

Quote from that anand review:That must be subtracting off idle power consumption. Otherwise, it's quite at odds with Anandtech's measurement for the i9-12900K, although both claim to be measuring CPU package power. In that TechPowerUp chart, they claim i9-12900K's single-threaded power is only 26 W, yet Anandtech got 78 W (or 71 W if you subtract off idle).

I didn't notice techpowerup saying anything about it so I don't know if they are using core only or package power.Because this is package power (the output for core power had some issues), this does include firing up the ring, the L3 cache, and the DRAM controller, but even if that makes 20% of the difference, we’re still looking at ~55-60 W enabled for a single core.

So if it uses 46W for a power virus why is 33W for a normal workload so unbelievable?!Here's the single-threaded data from Toms' review of the i9-13900K:

That's a little sloppy. Your per-core utilization is actually 8.75 W and that would extrapolate to 137 cores @ 1.2 kW package power.My old xeon can idle about 75w gives 4.68w (for core) with the system 8 dimm and a gtx 1650.

The power of cpu is limited to 140w 8.5w for each cpu. With this 1200w power draw can run 141 coresits insane

Right at the top of the page you linked, they state they're measuring:I didn't notice techpowerup saying anything about it so I don't know if they are using core only or package power.

Context is key. The article quoted the 1.9 kW figure for a Cinebench workload and the post you replied to was asking about the per-core utilization of that benchmark. It's not a good answer to the question if you cite data from a markedly less-stressful workload as though it's comparable.So if it uses 46W for a power virus why is 33W for a normal workload so unbelievable?!

Could be. If you're aware of any more relevant benchmarks, please share them.Heck, this is package power as well so core alone is lower than 46W and could even be around 33W.

Yes, so does that mean package power or individual core?Right at the top of the page you linked, they state they're measuring:

"voltage, current and power flowing through the 12-pin CPU power connector(s)

TechPowerUp and Anandtech both seem to be measuring package power.Yes, so does that mean package power or individual core?

That's great for scalable workloads, but I also care about lightly-threaded performance. For my needs, desktop CPUs strike a better balance.i will sell this cpu to get the 2696v4 22 cores maybe can turbo to 3.0 all cores

No offense, but this feels like motivated reasoning. If you have more to say about your rationale, I'd be interested in hearing it.

I'm impressed, in a way. I've heard references to mainframe CPUs using multiple kW of power, but I don't know if I've ever previously heard about an x86 CPU using kW of power, and this is using nearly 2!Any CPU that has the ability to consume 1900 watts of power, is not impressive.

No, this feat isn't about energy efficiency. By definition anything being overclocked is running well beyond its peak-efficiency point - not to mention LN2 overclocking!Compare it to the EPYC genoa,

For a lot of people & industry, efficiency is a major consideration. Just not 'leet gamerz or overclockers.Efficiency should be the biggest consideration, and intel has never really been great with that,

Not this Xeon. It's from their Xeon W lineup, which I believe doesn't have any "Intel On Demand"-controlled features.remember, when you buy this Xeon, Intel still holds some features ransom until you pay them even more money!

And do you know why this is possible?!Intel still holds some features ransom until you pay them even more money!

I think you're overstating your case, but I agree that purpose-built accelerators are part of the efficiency formula for many workloads. E-cores are another important part, as is in-package memory - both of which feature in current Intel products.everybody including servers and everything has moved on from using just CPU cores to do their work, you want to talk efficiency?! Everybody in the industry is using accelerators now which is why CPU core efficiency is much less important now.

It's only still important for a very few fields that don't have any accelerators.