Eh, Intel is supposedly planning Arrow Lake's tGPU to have up to 3.5x as many shaders as we have now. To feed it, they'll presumably have to add in-package DRAM. So, things could get interesting...They may worry about "price sensitivity" now but what will it be 3-5 years down the line when game developers look at what GPUs their target audience is using and 60+% of it is using GTX2060s or worse? By pricing the entry-level out of 50+% of the market's budget today, Nvidia and AMD are forcing future game developers to either forfeit half of their potential audience or continue targeting 5-10 years old hardware.

News Jacketless Jensen Promised "Inexpensive" GPUs Back in 2011

Page 4 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The 480 is 13 years old, which raises a few questions.This is going to be fun when AMD and Nvidia announce their next round of driver support cuts for still widely used GPUs like the RX480 and GTX10xx that are still competitive

- How long exactly do you believe NVidia and AMD should be obligated to modify drivers for antiquated GPUs to accommodate the newest games and OSes?

- How do you expect those companies to cover those development costs, other than by pricing them into current-gen GPUs?

The 480 runs 1/15 the speed of NVidia's latest-gen. Anyone who honestly can't afford to upgrade a 13-year old board is someone who can't afford to purchase new games and OSes, meaning they'll be just fine continuing with their old drivers.

-Fran-

Glorious

Just a clarification: you're thinking of the GTX480, Fermi. The RX480 is Polaris.The 480 is 13 years old, which raises a few questions.

The 480 runs 1/15 the speed of NVidia's latest-gen. Anyone who honestly can't afford to upgrade a 13-year old board is someone who can't afford to purchase new games and OSes, meaning they'll be just fine continuing with their old drivers.

- How long exactly do you believe NVidia and AMD should be obligated to modify drivers for antiquated GPUs to accommodate the newest games and OSes?

- How do you expect those companies to cover those development costs, other than by pricing them into current-gen GPUs?

RX480 launch:

| Release date | 29 June 2016; 6 years ago |

|---|

GTX480 launch:

| Release date | April 12, 2010; 12 years ago |

|---|

AMD still supports the Polaris siblings and nVidia dropped anything before Maxwell not long ago:

"Nvidia announced in April 2018 that Fermi will move to legacy driver support status and be maintained until January 2019."

https://en.wikipedia.org/wiki/GeForce_400_series

Regards.

sitehostplus

Distinguished

Meanwhile, every other component in that same computer actually costs the same or less than it did back then.In today's dollars, that's $600-- and yet today, $600 will buy you a GPU about 250 times as powerful as that 3Dfx Voodoo. Thank you, Dr. Huang.

CPU's, 30% cheaper.

Hard drives? Memory? It's a freaking joke what you can buy for the same price today vs back then.

Motherboards? More features for the same money you paid back then. Back then, motherboards didn't have integrated ethernet, WIFI, bluetooth, premium sound, and barebones graphics. Show me one moboard that doesn't have those features today.

Meanwhile, GPU's have actually risen in price from $500 to $1,600. And if you think that's cool, ask yourself one question: Why is it that can you buy an X-Box or Playstation, or Nintendo with virtually the same graphics capability for 1/3 of the price of the graphics card in the PC?

Something is rotten in Denmark, and it's not the cheese.

Are you being sarcastic?Meanwhile, every other component in that same computer actually costs the same or less than it did back then.

CPU's, 30% cheaper.

Hard drives? Memory? It's a freaking joke what you can buy for the same price today vs back then.

Motherboards? More features for the same money you paid back then. Back then, motherboards didn't have integrated ethernet, WIFI, bluetooth, premium sound, and barebones graphics. Show me one moboard that doesn't have those features today.

In 2011, a top-end mainstream CPU cost about $300, or half what a top-end mainstream CPU currently goes for.

Of course, memory is cheaper per-GB, but my sense is that people usually spend similar amounts and just get more GB.

Motherboards typically did have integrated Ethernet and sound. 2011 was the year Sandybridge came out, and most boards supporting it had a pass-through for its iGPU.

No, the graphics capability of a PS5 is about 2/3rds that of a RTX 4060. The RTX 4090 you're comparing it to is like 10x as powerful.Why is it that can you buy an X-Box or Playstation, or Nintendo with virtually the same graphics capability for 1/3 of the price of the graphics card in the PC?

On-board sound and Ethernet have been pretty much standard since about 2000. Integrated graphics, albeit in the chipset, has been an option since about as long too. Most modern motherboards are available in both WiFi/BT and non-WiFi/BT variants. I would have gladly bough the non-WiFi version of my motherboard to save $20 since I have zero use for it but it wasn't available yet.Motherboards? More features for the same money you paid back then. Back then, motherboards didn't have integrated ethernet, WIFI, bluetooth, premium sound, and barebones graphics. Show me one moboard that doesn't have those features today.

sitehostplus

Distinguished

1. No, I'm not being sarcastic. When AMD first launched it's top of the line 1ghz Athlon, it actually cost about $1,000. Here's the article explaining it.Are you being sarcastic?

In 2011, a top-end mainstream CPU cost about $300, or half what a top-end mainstream CPU currently goes for.

Of course, memory is cheaper per-GB, but my sense is that people usually spend similar amounts and just get more GB.

Motherboards typically did have integrated Ethernet and sound. 2011 was the year Sandybridge came out, and most boards supporting it had a pass-through for its iGPU.

No, the graphics capability of a PS5 is about 2/3rds that of a RTX 4060. The RTX 4090 you're comparing it to is like 10x as powerful.

2. In the year 2000, you were lucky to get a motherboard with ethernet and built in hard drive controllers. Today, they include almost everything besides a CPU, memory, mass storage, and a power supply.

3. Yeah, I think I put my foot in my mouth on that one. But seriously, graphic cards have gone in the exact opposite direction of everything else. Had there been actual competition, they would have put more into R&D and they would have found ways to keep prices down. What is going on now is because there is no competition to Nvidia.

Huh? I bought a P3-650 and the cheap motherboard I got for it back in 2000 had 100TX Ethernet, sound and all of the basic IO on-board, though the old AC97 audio chips usually sucked and it wasn't until my Core 2 Duo that on-board audio got good enough for me to no longer bother with audio cards. On-board GbE Ethernet became mainstream around 2006.2. In the year 2000, you were lucky to get a motherboard with ethernet and built in hard drive controllers. Today, they include almost everything besides a CPU, memory, mass storage, and a power supply.

You have to go all the way back to the earlier days of the 486 to find boards without on-board drive controllers. Later-day 486 boards started using super-IO chips from NatSemi and others to consolidate common IOs. Some modern motherboards still use such super-IO chips for legacy stuff like serial, parallel, PC-speaker, PS/2, etc. ports.

That is the bare hardware level, but the nature of how consoles operate and the simple fact that the games are coded specifically for them makes them effectively much more powerful than their hardware would suggest.No, the graphics capability of a PS5 is about 2/3rds that of a RTX 4060. The RTX 4090 you're comparing it to is like 10x as powerful

Just look at modern games like Last of Us volume 1. You need a 4060ti to match what a PS5 can create (4k, high quality, 60fps). While PC gamers can surpass a console's performance now, doing so cost way more than the cost of a console for the GPU alone.

them leather jackets are not cheap!!Nvidia CEO idealistically talks about affordable GPUs at Stanford, back in 2011. Have Nvidia's priorities changed or do we need to look at the bigger picture?

Jacketless Jensen Promised "Inexpensive" GPUs Back in 2011 : Read more

Probably because the site recommended an old article and someone decided to throw their $0.02 at it without looking at the date. Got me a couple of times.Why'd this 5 month old thread get bumped???

That article is dated 2000. The one you're commenting on one quotes a talk he gave in 2011 - it's right in the title of this comment thread! That not a small difference!1. No, I'm not being sarcastic. When AMD first launched it's top of the line 1ghz Athlon, it actually cost about $1,000. Here's the article explaining it.

No, not really. CPUs have gotten about twice as expensive in the past 6 years or so. I'm sure price trends for other components, like HDDs and motherboards have gone up, at least if you look at the long-term trends and not just what they've been for the past 6 months or so.seriously, graphic cards have gone in the exact opposite direction of everything else.

The only way anything gets cheaper is if you price it per unit of capacity or compute. Storage, memory, etc. are cheaper per GB. But, if that's how you look at it, GPUs have actually done really well.

| Year-Month | GPU | GFLOPS | MSRP | GFLOPS/$ |

|---|---|---|---|---|

2013-11 | GTX 780 Ti | 5046 | $699 | 7.22 |

2015-06 | GTX 980 Ti | 6054 | $649 | 9.33 |

2017-03 | GXT 1080 Ti | 11340 | $699 | 16.22 |

2018-09 | RTX 2080 Ti | 13448 | $999 | 13.46 |

2020-09 | RTX 3090 | 35580 | $1,499 | 23.74 |

2022-10 | RTX 4090 | 82600 | $1,599 | 51.66 |

In less than a decade, Nvidia is giving you about 7.16x as much GFLOPS/$ and all you can do is complain!

; )

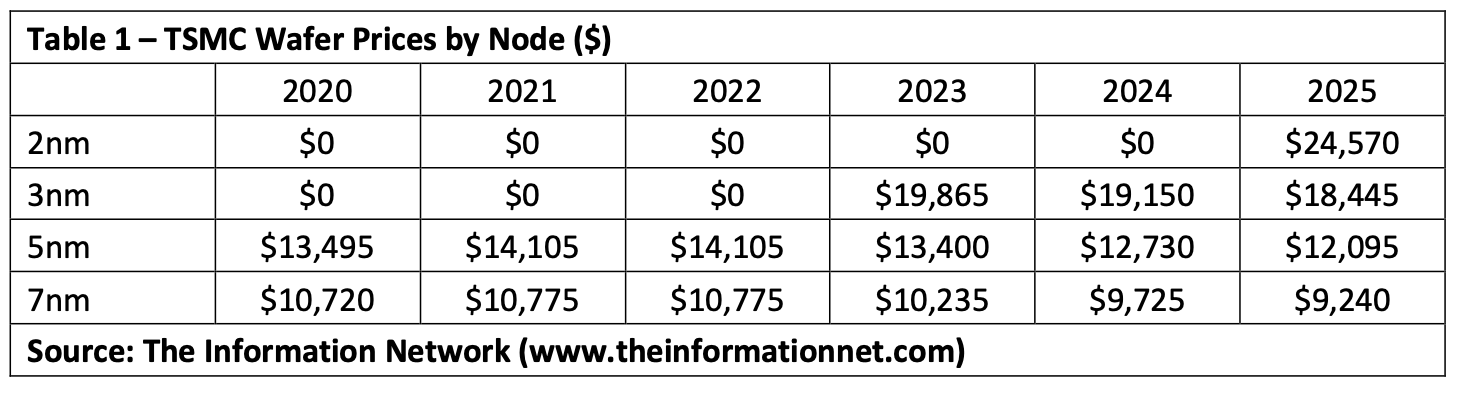

Oh, there's been plenty pumped into R&D. The main problem is that fab nodes are getting more expensive. You can't really get around that.Had there been actual competition, they would have put more into R&D and they would have found ways to keep prices down.

Also, Nvidia didn't really help the cause of raster gaming by adding deep learning and ray tracing hardware into its GPUs. AMD added ray tracing to RDNA2 and now they've added matrix-multiply hardware to RDNA3, further decreasing the raster performance-density of their GPUs. And I'm sure the increasing number and complexity of video codecs people expect their GPUs to accelerate hasn't helped, either!

All of that stuff is only adding cost to your hardware, if you're not using it. Even if you do use it, maybe you think it's free? Then again, I'm sure Nvidia has sold quite a lot of GPUs on the backs of their Deep Learning prowess, with students convincing their parents they need a powerful GPU for school. That additional volume should help them amortize NRE costs.

Anyway, the trend of ever more expensive fab nodes is only going to get worse. I think we collectively failed to imagine what it would look like when Moore's Law ends - it's not like hitting a wall, but rather just spending ever increasing amounts of money to chase ever-diminishing returns.

Only at the very top end. Below that, AMD and even Intel are able to find price points where they're competitive.What is going on now is because there is no competition to Nvidia.

Last edited:

Yes, I actually agree with you about that. A key selling point of consoles has long been that they provide a fixed optimization target for developers, making it much more likely that games will be tuned to run well on them.That is the bare hardware level, but the nature of how consoles operate and the simple fact that the games are coded specifically for them makes them effectively much more powerful than their hardware would suggest.

Just look at modern games like Last of Us volume 1. You need a 4060ti to match what a PS5 can create (4k, high quality, 60fps). While PC gamers can surpass a console's performance now, doing so cost way more than the cost of a console for the GPU alone.

That's not really how it came up, though. It wasn't a comment about the value a console represents to consumers, but rather a claim about their raw performance that I took issue with.

I'll say this: most of us seem to have been triggered by the subject of GPU-pricing and probably didn't even notice what the article was really about!Why'd this 5 month old thread get bumped???

To summarize: someone found a video of a 2011 talk where Jensen was describing Nvidia's founding mission.

“We started a company and the business plan basically read something like this. We are going to take technology that is only available only in the most expensive workstations. We’re going… to try and reinvent the technology and make it inexpensive. ... the killer app was video games.”

So, the time period he's talking about is about 30 years ago, since Nvidia was founded in 1993. And the 3D hardware he's contrasting against is like SGI workstations costing $50k and up. Back in '93, you'd be talking about at least an Indigo workstation with Elan Graphics:

SGI Indigo - Wikipedia

Or, if you had deep pockets (i.e. government, military) and were messing about with simulators or VR, maybe an Onyx RealityEngine:

With people spending so big on 3D hardware, it's understandable why it might seem daft to try and make a low-cost version for lowly video gamers. This was before development had even started on the Nintendo 64. 1993 was the same year Star Fox launched on the SNES and Virtua Fighter hit the arcades, so they were really at the leading edge of the 3D gaming revolution.

BTW, every Star Fox cartridge contained a SuperFX chip, which was basically custom RISC CPU. At the time, I think I remember reading it was thought to be the highest production-volume of any RISC CPU ever made.

Last edited:

Yeah, MetalScythe did it with some gibberish.Probably because the site recommended an old article and someone decided to throw their $0.02 at it without looking at the date. Got me a couple of times.

So while things seem bad, Nvidia is still achieving their mission.To summarize: someone found a video of a 2011 talk where Jensen was describing Nvidia's founding mission.

“We started a company and the business plan basically read something like this. We are going to take technology that is only available only in the most expensive workstations. We’re going… to try and reinvent the technology and make it inexpensive. ... the killer app was video games.”

So, the time period he's talking about is about 30 years ago, since Nvidia was founded in 1993. And the 3D hardware he's contrasting against is like SGI workstations costing $50k and up.

...

Ahh, a hit to my nostalgia. I would rent that game often. Now I play it every now and then on the PC.

TRENDING THREADS

-

-

-

-

Question Is ASUS TUF Gaming B650E-Wifi a good motherboard for this build?

- Started by Lawrence Fairfield

- Replies: 7

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.