You don't understand. At all.

GPU's take a given data set and transform it, grab the next data set and transform it. Massively parallel processing is happening here and it's actually rather simple in how it works, so to speak.

CPU's are different. They do all sorts of different computations and tasks, to understand increases in IPC, you need to understand how pipelines are created and how prediction works to keep the pipeline full. This doesn't really happen the same way with GPUs

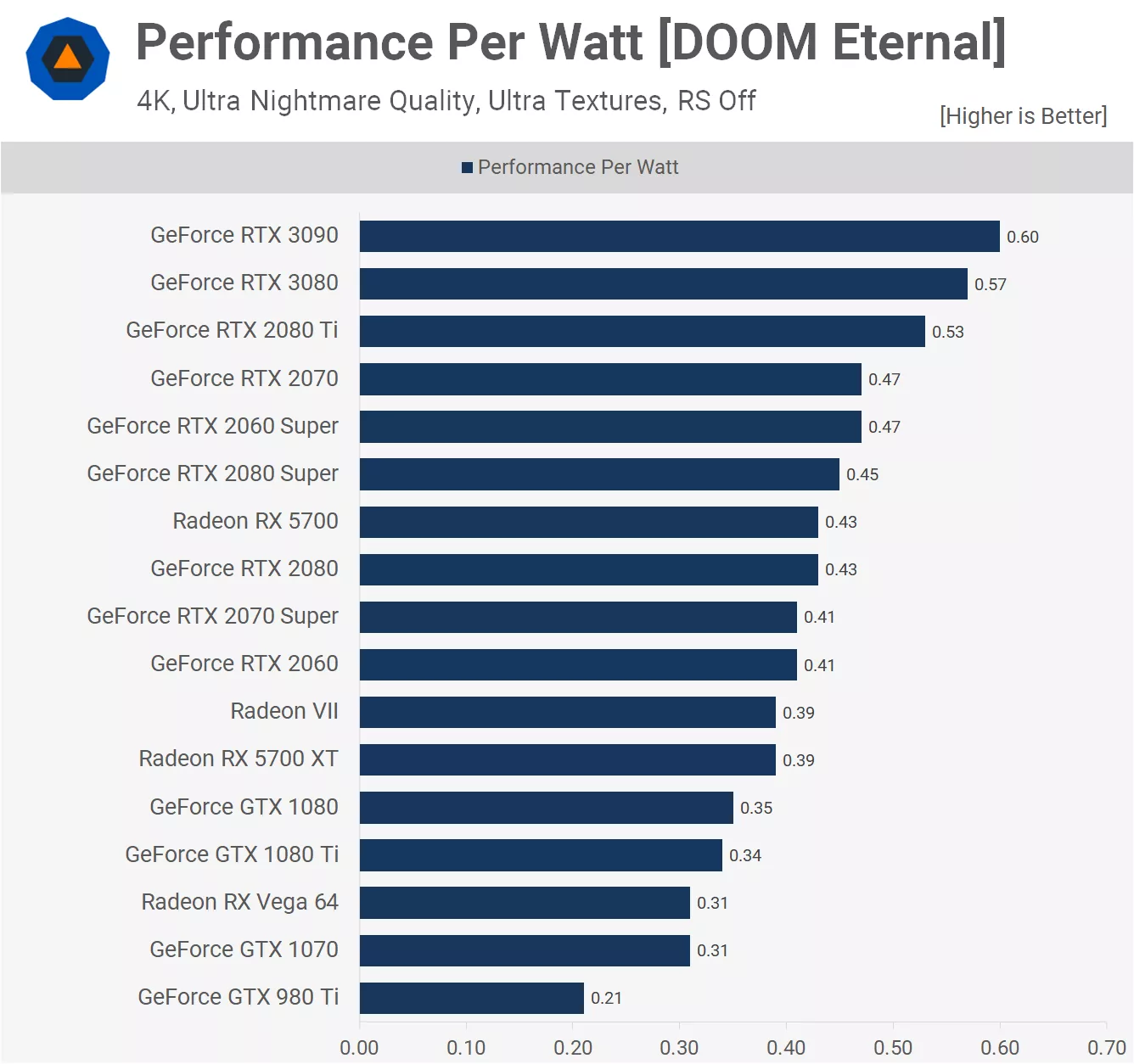

For GPUs, it requires a certain amount of transistors, running at a certain speed, to create an output of a specified resolution and FPS. Power draw is a function of what's needed to run a specific number of transistors at a given clock speed to keep errors from happening due to under or over voltage. **

Therefore, you want a higher resolution at the same FPS, or higher FPS at the same resolution, you're either increasing the transistor count, or the clock speed, either way it REQUIRES a higher power draw. A GTX 1080 just can't do any meaningful 4k resolution as it doesn't have the transistor count, nor could you increases the clock speed to what's required without liquid nitrogen.

We're into the 4k gaming era now, and we have been for at least a couple of years. The amount of power needed for that is going to be higher because the transistor count demands it for any given FPS. IF you are still running 1080p, then you won't need anything more than a 4050 when it comes out. If you're at 1440p, then a 4060 is all you will probably need and either way the power usage will be lower than what the previous generations needed for the same performance.

**Edit: Given the same node. Having a smaller node will require less power as mentioned in my first post.