Nvidia GeForce GTX 1000 Series (Pascal) MegaThread: FAQ and Resources

Page 78 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

simon12

Splendid

Looks like 480 vs 1060 in price/performance will depend on what deal you can get on what card at the time you buy with when everything is priced as it should be the 4Gb 480 is the winner and the FE 1060 is the biggest ripoff. I would expect some crazy discounts on all r9 cards and 900 cards soon if not already.

17seconds

Champion

This is pretty cool. It's the lowest recommendation from them I have seen.

Here is Guru3D's power supply recommendation:

GeForce GTX 1060 - On your average system the card requires you to have a 350~400 Watts power supply unit.

http://www.guru3d.com/articles_pages/geforce_gtx_1060_review,8.html

Here is Guru3D's power supply recommendation:

GeForce GTX 1060 - On your average system the card requires you to have a 350~400 Watts power supply unit.

http://www.guru3d.com/articles_pages/geforce_gtx_1060_review,8.html

U6b36ef

Distinguished

17seconds :

Oh by the way Melenie, nice rig! Looks like it belongs in the museum of modern art.

@melenie, yeah it does look stunning. However I can't actually make out how it is a PC. I can see roughly the motherboard, and where the liquid cooling fits over the CPU. The PSU at the bottom. Not the graphics card though; no idea where that is. It doesn't appear to be in the regular place for a GPU slot.

However I guess it's just because the re is flashy tech going on. Like I have never seen cooling like that before. Another photo would probably show more clearly what's going on.

renz496

Champion

U6b36ef :

It's quite shocking that almost two months after launch, there are virtually no custom cards for sale. Even the FE's are in limited stock.

If the chip is so hard to produce I wonder whether Nvidia will even keep going with it. Or I am worrying too much and there will loads of Pascal cards around very soon.

If the chip is so hard to produce I wonder whether Nvidia will even keep going with it. Or I am worrying too much and there will loads of Pascal cards around very soon.

Math Geek :

page 1 has a link to that nowinstock site that seems fairly accurate. but overall, stocks are still very low considering how long they have been out now.

i consider this is the norm when a card launch together with new node shrink. the last time we had node shrink was in 2012. after 600 and 7k series launch we were used to see new cards have hard launch together with custom model on day one.

renz496

Champion

king3pj :

Anyone have an explanation for why the 1070/1080 went with a single 8 pin PCIe cable when the 970 had two 6 pin cables? I personally like the single 8 pin better just because it allows me to have a cleaner case with one less cable coming out of my PSU.

From what I read it sounds like two 6 pin cables and one 8 pin cable provide the exact same 150W. On top of that GPUs are able to draw another 75W from the motherboard.

It looks like the 970 had a TDP of 145W while the 1070 has a TDP of 150W. Since they are pretty much identical in terms of TDP and two 6 pin cables are the same as one 8 pin cable why would Nvidia choose two 6 pin cables for the GTX 970 and one 8 pin cable for the 1070? To me it seems like the 970 should have been a single 8 pin cable card as well.

From what I read it sounds like two 6 pin cables and one 8 pin cable provide the exact same 150W. On top of that GPUs are able to draw another 75W from the motherboard.

It looks like the 970 had a TDP of 145W while the 1070 has a TDP of 150W. Since they are pretty much identical in terms of TDP and two 6 pin cables are the same as one 8 pin cable why would Nvidia choose two 6 pin cables for the GTX 970 and one 8 pin cable for the 1070? To me it seems like the 970 should have been a single 8 pin cable card as well.

the two 6 pin design is from the old design. i think it is mostly for old power supply. in the past the 8 pin is not common. sometimes even a good one they have two 6 pin supplied but they completely lacked the 8 pin one. but as time goes on more and more power supply have 8 pin power cable provided. so i think gpu maker realize that probably it is better just use one 8 pin for simplicity reason right now.

renz496

Champion

king3pj :

Math Geek :

Warmacblu :

I'm not sure if the Tomb Raider patch has been benchmarked yet.

here is one review i ran across http://www.overclock3d.net/reviews/gpu_displays/rise_of_the_tomb_raider_directx_12_performance_update/1

sorry forgot to link any yesterday

though for sme reason they did not include a new 1070/80 card ???

though for sme reason they did not include a new 1070/80 card ???

Yeah, this doesn't do much good for Nvidia users because the patch notes say that Async Compute was only enabled for Pascal and GCN 1.1 cards. Showing 980 Ti results when nothing was changed for those cards is kind of pointless. Still it's nice to see the improvement in AMD cards.

What I would really like to see are comparisons with Async on and off for the 1060, 1070, and 1080. The 1060 compared to the RX 480 would be interesting too.

even for AMD async is not enabled for GCN 1.0 despite those card also have ACE. what reason dev have not implementing them on those card? this is some reason why i have my doubt when we ask developer to do more stuff. actually i already saw the glimpse with Mantle. take sniper elite 3 for example. DX11 version of the game have CF support from AMD. but for the mantle version of the game rebellion decided not to support multi gpu at all. usually we consider multi gpu support that arrive two or three months later as late. now look at the new tomb raider. multi gpu support in DX12 only arrive after seven months lol when the responsibility was pushed towards game developer.

renz496

Champion

U6b36ef :

17seconds :

The GTX 1080 is sitting right in the middle of the photo with that flat shiny plate covering the water cooling.

I thought it might be that. However I can't figure out why the GPU is not at 90' to the motherboard.

that is thermal take P5. the case was build so you can show off the beauty of your hardware. they provide PCIE raiser so the gpu can be arranged like that

detroitwillfall

Reputable

im stoked on this! http://www.tomshardware.com/news/asus-gtx-1060-models-nvidia,32281.html

also on asus site https://www.asus.com/Graphics-Cards/ROG-STRIX-GTX1060-O6G-GAMING/

also on asus site https://www.asus.com/Graphics-Cards/ROG-STRIX-GTX1060-O6G-GAMING/

Design1stcode2nd

Distinguished

I've seen a number of reviews showing 1080p, 1440p and 4k are there any for widescreen, 3440 x 1440? I'm figuring I'll need a 1070 to be able to handle that res for a couple of years.

king3pj

Distinguished

Design1stcode2nd :

I've seen a number of reviews showing 1080p, 1440p and 4k are there any for widescreen, 3440 x 1440? I'm figuring I'll need a 1070 to be able to handle that res for a couple of years.

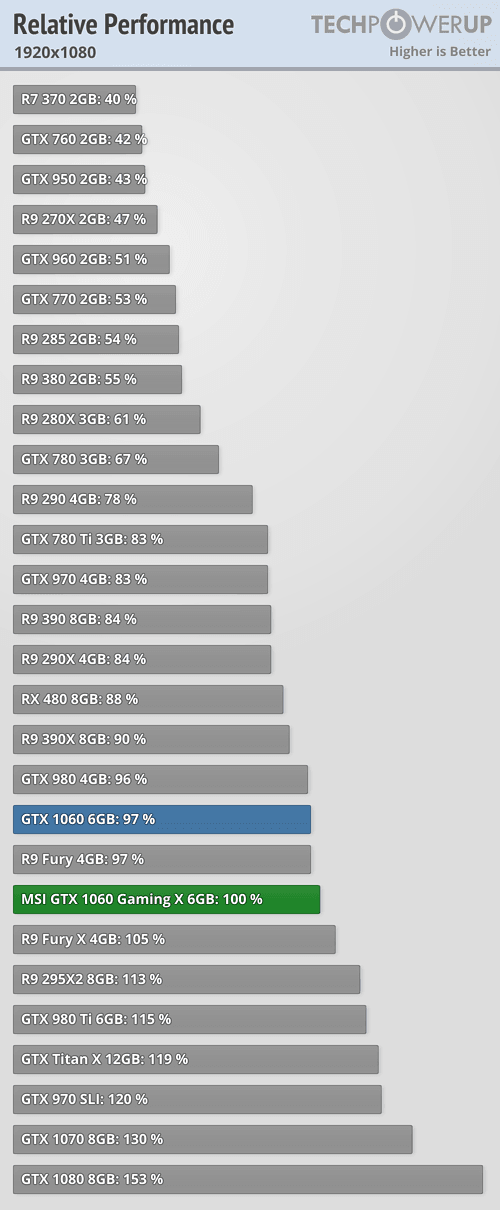

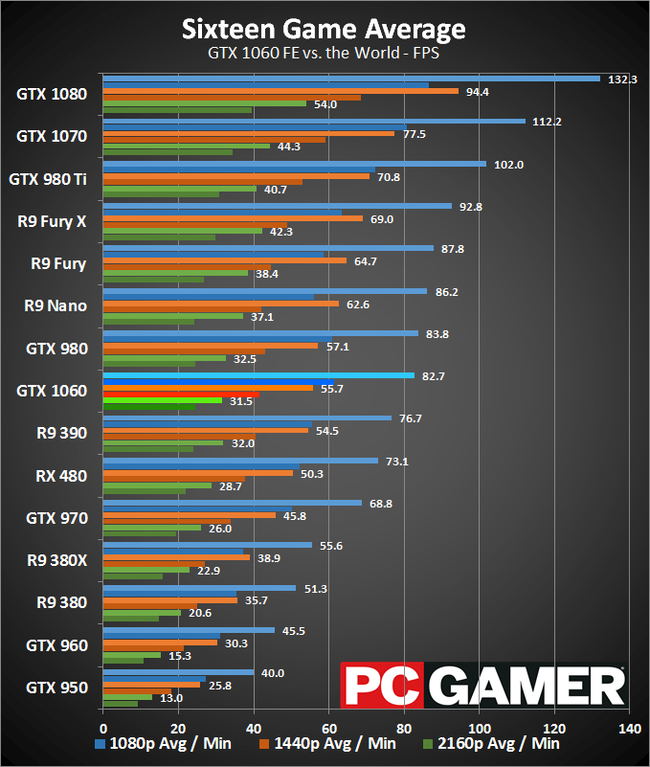

Since the 1070 is what I would recommend for standard 1440p (2560x1440) I would say it's the minimum I would want for ultrawide 1440p (3440x1440). A 1060 is an option but considering that it averages 55 FPS in PC Gamer's benchmarks for standard 1440p I think it would struggle with ultrawide 1440p unless you are willing to turn settings down in quite a few games or play at 30 FPS.

I'm very happy with my Gigabyte G1 1070 on my 1440p monitor and I think it would get the job done for you at ultrawide 1440p. Of course if you have piles of cash lying around a 1080's extra 20% of performance might be worth it for ultrawide. For me that extra 20% was not worth the extra $200.

Design1stcode2nd

Distinguished

Seeing as the monitor will be $700 and 1070's are about $450 the extra stretch to a 1080 isn't doable, I figured a 1070 would be the min just wanted some confirmation, thanks.

-Fran-

Glorious

I have a mixed feeling with the 1060... The 480 4GB is way better value at it's price point than the 3GB model and of course the 6GB version plus thinking down the road, but I can't deny for pre-DX12 stuff it is great value.

Also, from what I've read so far, the 1060 drops the ball with Async enabled. I'm willing to think the 980 is a better buy if it can be found at that price point (~$300) taking all things into account. That is, if the power consumption is not an issue.

I think I need more benchies. I also missed DOOM from Tom's review. I think that game deserves some close look. I've been monitoring it while playing and I have to say, it's well coded.

Cheers!

Also, from what I've read so far, the 1060 drops the ball with Async enabled. I'm willing to think the 980 is a better buy if it can be found at that price point (~$300) taking all things into account. That is, if the power consumption is not an issue.

I think I need more benchies. I also missed DOOM from Tom's review. I think that game deserves some close look. I've been monitoring it while playing and I have to say, it's well coded.

Cheers!

Mousemonkey

Titan

-Fran- :

I have a mixed feeling with the 1060... The 480 4GB is way better value at it's price point than the 3GB model and of course the 6GB version plus thinking down the road, but I can't deny for pre-DX12 stuff it is great value.

Also, from what I've read so far, the 1060 drops the ball with Async enabled. I'm willing to think the 980 is a better buy if it can be found at that price point (~$300) taking all things into account. That is, if the power consumption is not an issue.

I think I need more benchies. I also missed DOOM from Tom's review. I think that game deserves some close look. I've been monitoring it while playing and I have to say, it's well coded.

Cheers!

Also, from what I've read so far, the 1060 drops the ball with Async enabled. I'm willing to think the 980 is a better buy if it can be found at that price point (~$300) taking all things into account. That is, if the power consumption is not an issue.

I think I need more benchies. I also missed DOOM from Tom's review. I think that game deserves some close look. I've been monitoring it while playing and I have to say, it's well coded.

Cheers!

http://www.guru3d.com/articles_pages/geforce_gtx_1060_review,1.html

It's the only one I've seen so far.

17seconds

Champion

We need to see some non-AMD DirectX 12 games before jumping to too many conclusions (although it seems like that ship has already left the dock). There is no doubt that AMD has better Async Compute hardware, but the advantage is hard to gauge in long-time AMD titles like AotS and Hitman. Are we seeing Async or vendor specific optimizations? These game engines massively favored AMD for years, long before anyone even heard of DirectX 12.

With DOOM and Vulkan, FormatC stated in the review comments that the game has only been optimized for AMD and that the Nvidia pathway was still pending. He felt that testing Vulkan at this stage was misleading and a waste of time.

Also, with Async Compute, I think a lot of people are lumping Pascal in with Maxwell and forgetting that Pascal has new preemption hardware. This was shown in some of the new Time Spy benchmarks where Pascal showed good gains in DirectX 12, while Maxwell had none.

With DOOM and Vulkan, FormatC stated in the review comments that the game has only been optimized for AMD and that the Nvidia pathway was still pending. He felt that testing Vulkan at this stage was misleading and a waste of time.

Also, with Async Compute, I think a lot of people are lumping Pascal in with Maxwell and forgetting that Pascal has new preemption hardware. This was shown in some of the new Time Spy benchmarks where Pascal showed good gains in DirectX 12, while Maxwell had none.

detroitwillfall :

im stoked on this! http://www.tomshardware.com/news/asus-gtx-1060-models-nvidia,32281.html

also on asus site https://www.asus.com/Graphics-Cards/ROG-STRIX-GTX1060-O6G-GAMING/

also on asus site https://www.asus.com/Graphics-Cards/ROG-STRIX-GTX1060-O6G-GAMING/

how did you find that card on the asus site? i looked all over the place and don't see it anywhere. the link works but how did you get to it? there should be more 1060 cards but i don't see them on the site at all. even using the search feature for the site turns up nothing.

-Fran-

Glorious

Thanks MM!

Yeah, it is in line with what I was expecting using OGL. A ~10% difference favoring the 1060 sounds about right to me. I hope someone uses Vulkan.

@17seconds: Async was part of the DX12 API. AMD and nVidia had time to implement it according to spec. And since MANTLE was used as Vulkan's base, then it is natural for AMD to have an advantage there, but you do see nVidia having gains as well. It is a weird discussion to dwelve into, since nVidia has had a lot of time to create their own "ASync" engine that shows perfectly fine in Pascal.

Plus, "Are we seeing Async or vendor specific optimizations?". That question is kind of weird. Like I said, ASync is part of the DX12 API, not something AMD developed in secret and just showed it to the world with DOOM nor Ashes.

And testing DOOM is not a waste of time. I disagree with that statement. There are no OGL games in the mix, so adding DOOM would be welcome. Even if you're not using Vulkan, you're leaving OGL out of the picture completely. And I just noticed that! I'm baffled I didn't notice it before and said something to be honest. They used to have RAGE in the benchies.

Cheers!

EDIT: Added comment.

Yeah, it is in line with what I was expecting using OGL. A ~10% difference favoring the 1060 sounds about right to me. I hope someone uses Vulkan.

@17seconds: Async was part of the DX12 API. AMD and nVidia had time to implement it according to spec. And since MANTLE was used as Vulkan's base, then it is natural for AMD to have an advantage there, but you do see nVidia having gains as well. It is a weird discussion to dwelve into, since nVidia has had a lot of time to create their own "ASync" engine that shows perfectly fine in Pascal.

Plus, "Are we seeing Async or vendor specific optimizations?". That question is kind of weird. Like I said, ASync is part of the DX12 API, not something AMD developed in secret and just showed it to the world with DOOM nor Ashes.

And testing DOOM is not a waste of time. I disagree with that statement. There are no OGL games in the mix, so adding DOOM would be welcome. Even if you're not using Vulkan, you're leaving OGL out of the picture completely. And I just noticed that! I'm baffled I didn't notice it before and said something to be honest. They used to have RAGE in the benchies.

Cheers!

EDIT: Added comment.

-Fran-

Glorious

For those in the UK: http://www.ebuyer.com/nvidia/geforce-1060

Some are listed as pre-order, but if you're fast enough, you might be able to buy one. They are really expensive though...

Cheers!

Some are listed as pre-order, but if you're fast enough, you might be able to buy one. They are really expensive though...

Cheers!

gaming x in stock for $429 CAD http://www.ncix.com/detail/msi-geforce-gtx-1060-twin-89-133514.htm

https://www.overclockers.co.uk/pc-components/graphics-cards/nvidia/geforce-gtx-1060 overclockers has a bunch of different models in stock right now as well.

newegg showing a few in stock as well http://www.newegg.com/videocards/PromotionStore/ID-1170?Tpk=GTX%201060

https://www.overclockers.co.uk/pc-components/graphics-cards/nvidia/geforce-gtx-1060 overclockers has a bunch of different models in stock right now as well.

newegg showing a few in stock as well http://www.newegg.com/videocards/PromotionStore/ID-1170?Tpk=GTX%201060

this is probably why no sli for the 1060 http://www.gamersnexus.net/guides/2519-gtx-1060-sli-benchmark-in-ashes-multi-gpu

TRENDING THREADS

-

-

-

Question MSI MAG B550 TOMAHAWK MAX WIFI - Cpu Red Light

- Started by DARRENBRIEN55

- Replies: 3

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.