News Nvidia GeForce RTX 3080 Founders Edition Hands On and Unboxing

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Name of the dinosaurs would be a better this time aroundJust change the names

Aidivn HForce TheBigOne

Aidivn HForce NumberTwo

Aidivn HForce CheaperthanNumberTwo

Aidivn HForce AffordableOne

HyperMatrix

Distinguished

That depends on what fps you're talking about.

150 to 155 fps? Meh. 30 to 35 fps? That would be significant.

As InvalidError points out, it's mostly the 4GB 5500 XT cards that saw large improvements in performance from PCIe Gen4, because the lack of VRAM leads to situations where more data has to go over the PCIe bus ... plus it's limited to an x8 link width. I don't think anyone has shown games where Gen3 vs. Gen4 x16 slots result in more than a 1-2% improvement, and even then that's only if you're not fully GPU bottlenecked.

I think it'll start to become significantly more important once Direct Storage API (or RTX IO, based on Nvidia branding) is put into the mix. Because all of a sudden you have games loading/dumping a ton more data on the fly. Won't be much of an issue for this generation since Direct Storage will be out as a preview build next year, and will take time before it's integrated into game design.

Shouldn't be much of an issue for GPUs since NVMe at 4.0x4 is 8GB/s, only half of 3.0x16's 16GB/s.I think it'll start to become significantly more important once Direct Storage API (or RTX IO, based on Nvidia branding) is put into the mix.

The only thing that will truly stress 4.0x16 is the GPU needing to use system RAM for supplemental storage. The rest of the time, gains will be the usual 0-4% from reduced PCIe round-trip latency.

darknate

Distinguished

wouldn't being able to limit the pcie slot in the bios be able to balance the tests on a ryzen platform?I am! But obviously anyone buying this card hopefully isn't focused on 1080p gaming. Even the 2080 Ti was basically overkill at 1080p (unless you enable ray tracing). As for PCIe Gen3 vs. Gen4, I'm planning on testing with Ryzen 9 3900X and Gen4, but that's a different platform and CPU than i9-9900K and Gen3. It might make a difference at 4K, though. Maybe? Like, when you're GPU limited at 4K the added PCIe bandwidth might be useful. Next week ... start the 7 day countdown to launch day!

hawkwindeb

Distinguished

When you do the benchmarks with a RTX 3080 (and later when you get the 3070 and 3060), please consider using 1440p 144Hz with G-SYNC (such as on the ViewSonic XG2700QG) at both reasonable looking (read balance perf vs eye-candy) typical mid-high settings as well as ultra settings with the i5-10600K 16GB memory (XMP-3600) on mid to highend Z490 at stock (with a great performing air or AIO cooler). Then OC it.

The i5-10600K has been touted as a great gaming CPU, but I haven't seen it in your latest benchmarking. (besides when you tested this CPU against other CPU's)

Please also above but with i9-10900K, 9900K (Z390), etc, so folks with current mid to highend processors can see performance and not just high-end. Please add other popular games with above such as ARMA 3, Insurgency Sandstorm, DCS A-10C, for folks that have been playing these games that have been around for a while. Some of these games typically have lower than desirable FPS. Some people have been waiting for the next gen GPU's to upgrade or replace their gaming rigs with faster newer CPU's memory, etc, but want some justification with the games they play most of the time. I'm one of them.

We want to see just how CPU and/or GPU bottlenecked these games are with different combinations vs justification for upgrading/replacing current gaming rigs. Also, add to your testing of the new Microsoft Flight Simulator for the same reason: on an i5-10600K will it be CPU and/or GPU bottlenecked with a RTX 3080 using settings listed above.

Thanks

The i5-10600K has been touted as a great gaming CPU, but I haven't seen it in your latest benchmarking. (besides when you tested this CPU against other CPU's)

Please also above but with i9-10900K, 9900K (Z390), etc, so folks with current mid to highend processors can see performance and not just high-end. Please add other popular games with above such as ARMA 3, Insurgency Sandstorm, DCS A-10C, for folks that have been playing these games that have been around for a while. Some of these games typically have lower than desirable FPS. Some people have been waiting for the next gen GPU's to upgrade or replace their gaming rigs with faster newer CPU's memory, etc, but want some justification with the games they play most of the time. I'm one of them.

We want to see just how CPU and/or GPU bottlenecked these games are with different combinations vs justification for upgrading/replacing current gaming rigs. Also, add to your testing of the new Microsoft Flight Simulator for the same reason: on an i5-10600K will it be CPU and/or GPU bottlenecked with a RTX 3080 using settings listed above.

Thanks

Why? Using whatever-sync introduces a handful of extra variables to test repeatability that you don't have to worry about with vsync off.When you do the benchmarks with a RTX 3080 (and later when you get the 3070 and 3060), please consider using 1440p 144Hz with G-SYNC

nofanneeded

Respectable

Why? M$ F$ 2020 is not CPU or GPU bound, it is DirectX 11 bound. Until M$ and the company they worked with gets there act together no need to worry about it. Now seeing if one of the new CPUs with graphics built in can handle Crysis Remastered will be nice, and compare it to a system with the new GPUs - sweet.Forget Crysis Remastered. Everyone just wants to see a MSFS2020 benchmark.

Yes, I am one of those people that would like to see GTX 10#0 vs RTX 20#0 vs RTX 30#0 , and how the bus slots (PCIe gen 3 vs gen 4) effect things, with a good mix of CPUs of course

Will it run Crysis Remastered on "Will it run Crysis?" settings?Now seeing if one of the new CPUs with graphics built in can handle Crysis Remastered will be nice, and compare it to a system with the new GPUs - sweet.

alan.campbell99

Honorable

"I'm still not at all convinced this was necessary or even a good idea, but it's here now and we get to live with it."

Even after moving from PCGamer, Jarred Walton remains being The Man.

Even after moving from PCGamer, Jarred Walton remains being The Man.

spongiemaster

Admirable

Virtual Link has been discontinued.No USB-C on this one?

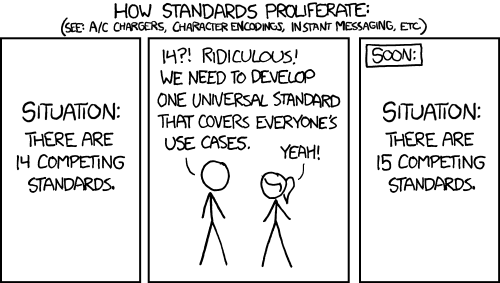

Alt-mode sickness. Pushing 50 different standards down a single plug is madness and asking for trouble. Now we have a "universal" standard where some ports and cables are more universal than others so you have to keep tabs on which non-universal 'universal' port goes with which non-universal 'universal' cable to get things to work right.Virtual Link has been discontinued.

GenericUser

Distinguished

Alt-mode sickness. Pushing 50 different standards down a single plug is madness and asking for trouble. Now we have a "universal" standard where some ports and cables are more universal than others so you have to keep tabs on which non-universal 'universal' port goes with which non-universal 'universal' cable to get things to work right.

hawkwindeb

Distinguished

Why? Using whatever-sync introduces a handful of extra variables to test repeatability that you don't have to worry about with vsync off.

Agreed - leave out the sync part, but I would still love to see the rest tested. (and maybe also leave out the MS FS2020 portion of my request until it's using DX12, if ever)

Thanks

TRENDING THREADS

-

-

-

Question Blackscreen and RAM debug light changed to VGA debug light - - - - Is it gone for good ?

- Started by SuperCitizen

- Replies: 5

-

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 3K

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.