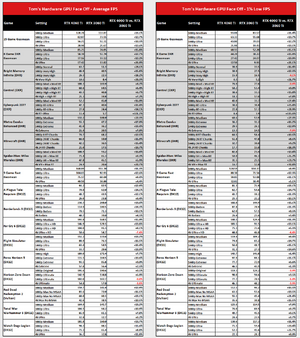

Or potentially the people saying, unequivocally, that "it's slower than the RTX 3060 Ti at 1440p and 4K" are the biased ones. This isn't all really directed at you, but at the whole zeitgeist that's trying to make things worse than they perhaps really are. Anyway... here's the data comparing the RTX 4060 Ti and RTX 3060 Ti, again:

View attachment 255

Based on those results, there is almost no case to be made for saying the RTX 3060 Ti is

universally faster at 1440p. I'm not saying there are NO games where it would be faster, but in general, the 4060 Ti is clearly going to win out at 1440p in any test suite that's reasonably comprehensive. Also note that anything less than about 3% in the above table can't really be considered "faster" — for either GPU. It's a tie.

What about at 4K? There are more games where the additional memory bandwidth of the RTX 3060 Ti helps it come out ahead at 4K. And we can probably toss the Cyberpunk 2077 4K results for the 3060 Ti as being old/wrong. It happens. (A game patch, drivers, or some other factor may have improved performance on the 4060 Ti; I need to retest the 3060 Ti.) But using the sample data of 15 games? Yes, the RTX 4060 Ti will still generally beat the RTX 3060 Ti. Anyone pushing a stronger narrative about it being slower might not be doing it for the right reasons, IMO.

Furthermore,

the cases where the 4060 Ti loses don't matter. Why? Because 4K generally doesn't matter on either of these cards. Even if we discount the DXR results, because they're not even remotely viable on either GPU, we get rasterization performance of 40.5 fps on the 4060 Ti versus 38.8 on the 3060 Ti. That's pretty close to a tie, yes, but the 4060 Ti has four clear "wins" (10% or more faster) while the 3060 Ti has only two "barely" wins.

Based on this, I still stand by the review and score and feel that I would rather have RTX 4060 Ti with typically better performance, AI improvements, and the potential to use DLSS 3, then to have an RTX 3060 Ti with potentially slightly better performance at 4K in scenarios where it often doesn't matter. (RTX 3060 Ti doing 15 fps versus RTX 4060 Ti doing 10 fps as an example would have the older card "winning" by 50%, but it's a meaningless win because both are far too slow.)

Of course there's also "bias" built into my selection of games. Brand new games may be more demanding — and they may also be horrendously buggy. (See: Star Wars Jedi: Survivor, Last of Us Part 1, Resident Evil 4 Remake, and Lord of the Rings: Gollum for examples of "buggy" / "badly coded.") However, I have a long-established test suite and a reasonably wide selection of games. I didn't just add some random new game that might have horrible performance/bugs for the sake of this review.

(Yes, some places basically did that. For the record, I disagree with the use of Hogwarts Legacy, Last of Us Part 1, Star Wars Jedi: Survivor, and Resident Evil 4 Remake in a current GPU test suite. All of them were and are questionable due to bugs and other concerns. Go ahead and test those games, like I tested Gollum, but don't forget to point out that they're outliers and feel broken on a technical level.)

Finally, as I've pointed out elsewhere, the best fix for the RTX 4060 Ti and RTX 3060 Ti and other 8GB GPUs running out of memory is... well, there are a few options. First, don't play new/demanding games at 4K on 8GB cards, because

4K can use significantly more VRAM.

Second, turn down the texture settings one notch. Maxing out at 1K textures instead of 2K textures will prevent a GPU with 8GB from running out of VRAM on nearly any reasonably designed game. And by that, I mean a game made for current hardware — which includes consoles! Also, show me a game where the difference between 1K and 2K textures, at 1440p, is even remotely significant. It basically doesn't happen, outside of bad coding, because most objects use less than 1K resolution textures, and even if you're at 1440p and do get a 2K texture, it won't really look much better. A 2X upscaling of texture is not a problem. 4X or more? Yes, but not <2X.

Back to the console comment I just made, until we have consoles with more memory devoted to textures and GPU stuff, we're not likely to see games truly need more than 10GB of VRAM, and 8GB is close enough that it would rarely matter (i.e. if you turn down the texture quality one notch). This is especially true for any cross-platform games. Xbox Series X has 10GB dedicated to the GPU and 6GB for the CPU, with the CPU side handling OS, game logic, etc. PlayStation 5 isn't as clear on how much is really for the GPU versus the whole system, but it doesn't really matter because the

Xbox Series X already drew a line in the sand: Don't use more than 10GB of data for textures and GPU related stuff. No PS5-exclusive ports might conceivably use 12-13GB VRAM, but that's a worst-case scenario. Dropping texture quality one step meanwhile normally cuts VRAM requirements by at least a third (i.e. back to <8GB).

When things do go wrong with the above, however, the answer is almost universally that the game in question needs better coding, particularly for the PC side of things. Someone (bit_user) earlier mentioned the potential to use tiled resources. Low-level APIs do support this, but it's almost never used, because it adds complexity and can cause stutters. That's basically what I heard from Nvidia technical people when I was asking questions for the "Why does 4K use so much VRAM" article. I don't have any reason to doubt them.

Tiled textures (or only loading the part of a texture that's needed "on demand") might sound interesting, but often in practice you end up with something like a game loading part of a texture for one frame, but then

the very next frame (or at most a few frames later), the viewport has changed enough that other parts of the texture are now in view. So if a game engine tried to optimize VRAM use by only loading in part of a texture, it can end up having to do that for every part of every texture over a relatively short time. And again, determining what's in VRAM and what's paged out is more complex, so there are performance implications that can make things messy.

id Software's Rage (idTech 5 engine) actually did do something like this ("megatextures") and was quite good at it. But when you look at how many games licensed idTech 5 (or idTech 6 / 7), it's a very small number. I don't know if this ever changed, but back in the day when Unreal Engine was the upstart, the word on the street among game developers was that id's engines ran faster but were far more difficult to understand / modify, while Unreal Engine was slower but easier to understand. You only need to look at how many games now use Unreal Engine to see how that played out. (Epic also radically changed its licensing costs/royalties, which in turn boosted use of UE4 and later.)

We'll see more games push VRAM use beyond 8GB in the coming years. Will those games truly

need 16GB, though, or are they just pushing the limits because "bigger is better?" I'd venture it will be 99% the latter. Even now, there are relatively few games that won't run reasonably well on a GTX 970 at 1080p medium. That's a nine years old GPU. We're a way off from 8GB becoming the new 4GB in my book, because there

are diminishing returns. For example:

View attachment 256

View attachment 257

View attachment 258

Which of those is running maxed out "epic" settings, which dropped shadows and textures to "high," and which has shadows and textures at "medium"? Even flipping through the uncompressed PNG files, I wouldn't be able to tell you for certain if I hadn't taken those screenshots.

Put another way, a "high-end" GPU from late 2014 that cost $330 at the time of launch still performs roughly on par with a budget $170 GPU from 2019 (GTX 1650). 2GB of VRAM is now basically dead (certain games won't really even try to run, at all, without seriously degraded settings). It's been dead for maybe three years. 4GB is now nearing the end of usability; it's not dead yet, but it's not getting better and it's not going for a walk anytime soon. 6GB is "fine" for budget GPUs but that's about as far as I'd take it. 8GB is fine for mainstream GPUs and will likely handle 1080p and medium (or higher, depending on the game) settings for at least another five years.

Anyway, back to the heart of the matter. My testing and experience with games indicate that:

- RTX 4060 Ti in general beats the RTX 3060 Ti. Period. If you only play a few games and one of those happens to be in the category of favoring the RTX 3060 Ti at 1440p, fine, but those games are the exception rather than the rule. And that's without DLSS3, SER, OMM, or DMM.

- Playing games at settings and resolutions that work poorly on an 8GB GPU, like the 4060 Ti, is the wrong approach. Most reasonably optimized games look almost as good running ~high settings rather than ~ultra and won't exceed 8GB of VRAM use, even at 1440p. (And this really isn't a 4K card.)

- Caveat to point 2: There are definitely games that have been poorly coded that will exceed 8GB VRAM use even at medium to high settings. (See: Gollum.) Any current test suite that has a large number of games where 8GB at 1080p is truly a problem has probably intentionally selected games to try to prove the point that "8GB VRAM GPUs are dead!"

- 8GB will eventually be a problem with more games, even at medium/high settings. When will that happen, on popular games that aren't ridiculed as being poorly coded? Probably not in significant numbers until the 4060 Ti has been superseded by the 5060 Ti, 6060 Ti, and maybe even 7060 Ti.

- Is the RTX 4060 Ti a 3-star or a 3.5-star GPU? If it's a 3-star, then the RX 7600 also becomes a 3-star. I definitely feel, in retrospect, that I overscored the RTX 4070 at 4-stars. That's largely thanks to being under a time crunch to get things done, and ultimately one "point" (half-star) higher or lower isn't a huge deal to me. There are many days where I'd be happier not having to assign a score at all. The text explains things, you can read it and decide on your own internal ranking what a GPU deserves. Because that's what happens anyway.