Somewhat tempting (in a context of upgrading to 4K gaming). But then again, rumours on the street seem to have it, that RDNA 4 will come later this year with a sub-$600 GPU, which will have a (rasterization) performance almost like the RTX 4080 Super.

And such GPU will potentially already make use of PCIe 5.0 lanes, which would not be an issue for my MB (which did cost me a bit more, as Tom's Hardware keeps reminding when talking about AM5, but already supports enough PCIe 5.0 lanes also for NVMe SSD, while Intel's latest still goes only with 16 PCIe 5.0 lanes - so basically, money saved, as I don't need a second MB upgrade).

Not counting on the rumours. But I suppose by now I may as well hold out, and als check Ryzen 9xxx offerings, starting in April apparently.

At $330 the RX6700XT ASRock Challenger (12GB VRAM) is a great value. It has performance about equal to the 4060Ti 16GB that starts $100 more expensive. I got one last year and I get 85 FPS in Red Dead Redemption 2 (no FSR 2) at 3440x1440 resolution on highest settings. Borderland 3 I get 82 FPS at the same settings and resolution without using FSR. Note that the 7600XT costs the same as the 6700XT. Only advantage the 7600XT has is 4GB more VRAM but it is still 10% slower on average than the 6700XT.

Yeah, I can't complain neither. Plenty good for 1440p gaming, the 6700 XT is. And a bit more choice among 1440p monitors, for a not very expensive one with 120+ Hz.

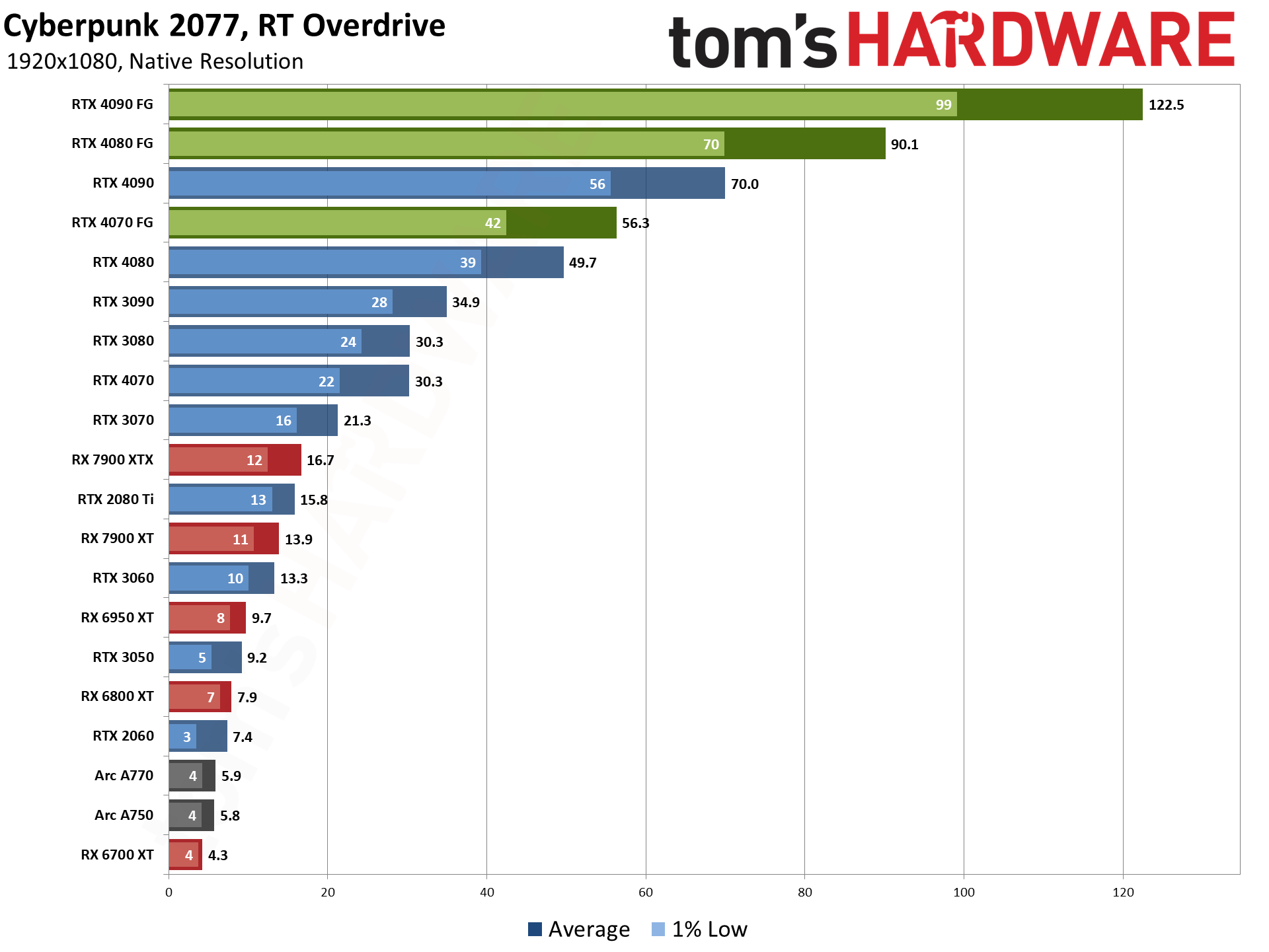

The ray-tracing performance as such, that isn't great. But in games such as Metro Exodus, there is other things to focus on, than to be sightseeing all the time.

In any case, if one isn't determined to go "4K gaming, and nothing else", then there are plenty of options for a rig (even if the top GPUs come with a high price point - but they are way over the top i.e. for 1080p gaming these days).

In any case, if one isn't determined to go "4K gaming, and nothing else", then there are plenty of options for a rig (even if the top GPUs come with a high price point - but they are way over the top i.e. for 1080p gaming these days).

In any case, if one isn't determined to go "4K gaming, and nothing else", then there are plenty of options for a rig (even if the top GPUs come with a high price point - but they are way over the top i.e. for 1080p gaming these days).