To be fair that video unpacks what nvidia has done which certainly seems to be shady. This is not to say FrameView is wrong, bad or providing false information though. For MFG nvidia claims to have made some optimizations to/for MsBetweenDisplayChange however they've not contributed this to PresentMon. That would mean there's no way to independently verify results from FrameView.

PresentMon had already moved past using MsBetweenPresents and MsBetweenDisplayChange which is what allowed GN to figure out roughly what iteration FrameView was likely based on. This supposedly isn't sufficient for MFG results according to nvidia. Meanwhile PresentMon appears to properly support AMD/Intel FG and has added new features for identifying generated frames.

Again I'm not saying there's something wrong with FrameView but this is bad behavior on the part of nvidia. Of course this also seems to be par for the course when it comes to nvidia and the open source community.

I do wonder about PresentMon vs OCAT vs FrameView. Intel created (and open sourced) PresentMon back in the day. It was usable but also severely lacking in features for a long time. When OCAT came out, I switched to that as it was more easily usable. Then Nvidia did FrameView and integrated support for its PCAT and I was sold — real in-line power data from every gaming benchmark I run! Intel has talked about doing a similar power capture device with a branch of PresentMon, and that would be fine by me as well. Really, I just want something that works.

Anyway, back to my thought: Intel "owns" the original PresentMon. If Nvidia were to come along and submit a bunch of changes to support some feature it wants, would people be happy or would they just reject the changes? Probably a bit of both, but the main owner of the Github repository has final say, right? So it's probably a lot easier and less politically charged for Nvidia to fork off PresentMon and then do everything it wants and not worry about it.

AMD did that with OCAT. CapframeX did it as well. So did Nvidia with FrameView. There must be a good reason these alternatives aren't just submitting direct code changes to PresentMon itself, right?

Now, there's also potentially stuff going on in Nvidia's code that it wants to keep secret. I have no idea. But the company does have a penchant for not open sourcing a lot of stuff. And I really wish it would, because FrameView isn't a shining pillar of UI either. It works, but you can't change the font size, you can't change the text color, you can't put a transparent but dark background on it to make the green text readable, you can only choose between two shortcut keys, etc.... There are a lot of things missing from FrameView. Not that I'd have the time to try and fix them, but it would be nice to have

someone that would fix these things.

There have been requests to Nvidia to allow the use of a different font color and change the font size for years, basically since FrameView first came out. I have no idea why it doesn't just get fixed. Possibly it's just something that only gets maintained by one person in their spare time. I just wish something else supported the PCAT. (I'm not aware of any support in the base PresentMon, but maybe I'm wrong on that?)

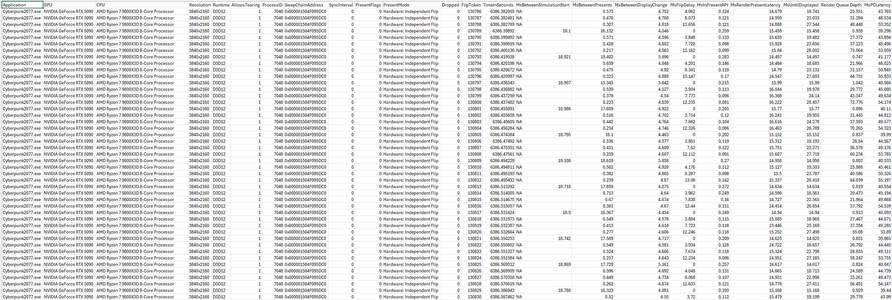

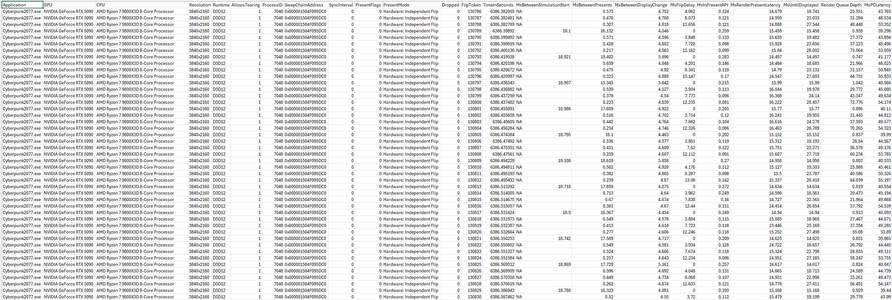

And now of course we need support for MsBetweenDisplayChange or a similar metric, because with the new MFG stuff you get garbage data in MsBetweenPresents. Like this: