nofanneeded

Respectable

? Both the 3090 and this picture have 2 fans.

I thought the leaked design had one large fan only?

? Both the 3090 and this picture have 2 fans.

I have EVGA Supernova 750 G3 80+ Gold so you think I'll be okay? No risk of burning out or significantly shortening the life?It will work dont worry but will be little noisy for the PSU fan will be at max speed thats all the negative about it. Also you should check the wattage on 12V Rail not the total watt.

I have EVGA Supernova 750 G3 80+ Gold so you think I'll be okay? No risk of burning out or significantly shortening the life?

one of the best in the market

one of the best in the marketThe GOOD:

The BAD:

- amazing voltage regulation

- better than excellent ripple control

- smooth power on transient behavior

- fully modular

- lots of connectors

- semi-fanless mode can be disabled

Score : 9.8/10

- HAHAHAHA!

There are two fans, one exposed on the top of the card the other at the bottom, at opposite ends of the card.I thought the leaked design had one large fan only?

There are two fans, one exposed on the top of the card the other at the bottom, at opposite ends of the card.

https://www.tomshardware.com/news/nvidia-geforce-rtx-3090-caught-on-camera

When I saw the power connector my immediate thought was, "Well, that's clearly going to break." And I'm not sure why you "couldn't do that with 6 or 8 pin connectors," aside from probably being a bad idea.

That's definitely the 3090 cooler. The short PCB that allows air to be pulled from below the card and exhaust it above is about as innovative as we have seen in quite a while.

This is clearly not the 3090 it has two fans not one

What if he/someone else has an overclocked 10900K400W GPU, 200+W CPU, 60W MB/USB/Fans etc. Thats very close to the upper limit of the PSU. Probably thats the reason 850 might become a norm with that card.

When I saw the power connector my immediate thought was, "Well, that's clearly going to break." And I'm not sure why you "couldn't do that with 6 or 8 pin connectors," aside from probably being a bad idea.

Thats a very good power supply , and has 748 watts on 12V . you will be not only okay , you will be super fine. and no burn outs dont worry. Even if you overclock , it will work STRONG!

plus it comes with 10 years warranty. which means it is guaranteed to work 10 years giving 750watts 24/7 .

here read this about your power supplyone of the best in the market

https://www.jonnyguru.com/blog/2016/12/26/evga-supernova-750-g3-power-supply/6/

100% fine! Even if you slightly OC both of them. The efficiency would slightly reduce which is alright but its not a good idea to run very close to the max limits even though the PSUs are rated for continous operation. I personally wouldnt run a PSU at 75%+ loading if its for longer durations. I remember reading a post where a person got a 1200Watts CM platinum PSU and mined at 1150 watts and the PSU failed in 1 year. But then that might be one off extreme case.I do have a 10700k but I don't intend to OC. Think I'll be safe if I went with 3090??

One major reason the GTX 690 suffered was because it - like all x90 cards before it - was a dual-GPU card at a time when game developers were starting to ignore SLI/Crossfire. Like two GTX 680s on a single board. The then emerging - now common - deferred rendering techniques are largely incompatible with the traditional ways of doing multi-GPU.

Game developers chose to not waste time supporting SLI since less than 1% of gamers would benefit from it. When you couldn't use SLI, the card offered performance similar to a single GTX 680.

Mmmmm, who doesn't love blowing hot exhaust air right into their CPU intake? Totally what I've always wanted.

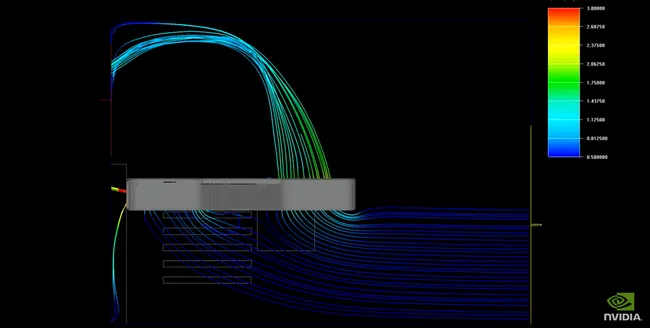

There wasn't much and they didn't address it directly, but they did show a picture of the air flow which this article even screen captured for you.

That's definitely the 3090 cooler. The short PCB that allows air to be pulled from below the card and exhaust it above is about as innovative as we have seen in quite a while. This would be quite beneficial for multi card setups where the top cards are often starved for air. They also show a render of the card right at the end of the clip.

Probably because the colors in that picture are indicating air speed, not temperature. Not sure why Nvidia would waste their time faking a simulation just to put a still shot of it in a promotional video.I love how this screenshot of a fluid-dynamics render doesn't simulate the hot air from the front fan getting pulled directly into the CPU air cooler...