If you are always gaming at native 4K at high or ultra graphical settings, then you likely won't notice the impact of a faster CPU. But in reality, even if you sport a RTX 4090 or even 5090, you will end up running DLSS at some point. Meaning to say that you are not running native 4K, and therefore, the CPU may become the bottleneck.Someone correct me if I'm wrong... but aren't the x3D chips pretty irrelevant if you are gaming in 4K? I've seen the comment that they are irrelevant at 4K made by many in various threads... and every x3D benchmark video shows 1080p performance.

Asking because I game in 4K which is all on the GPU... so I would assume the answer is yes? I remember 1080p HD coming out in like 2007... but don't remember the last time I actually used that resolution on my PC.

That being said... gonna keep on trucking with my 9950X and 4090 until the 5090 is readily available at MSRP.

News Nvidia warns of gaming GPU shortage this quarter, recovery in early 2025 — Chipmaker rakes in record profits as net income soars by 109% YoY

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

As someone still uses a 24" 1080p 144hz monitor I think there are a few reasonsYeah I saw that mentioned somewhere recently. Not enough info though IMO and still doesn't answer the question. Why is a resolution coming up on 20 years of age still relevant?

Certainly isn't because of visual quality. Must be the GPU prices because I'd bet those same people surveyed are all running 4K HD panels in their living rooms.

1) PPI - if you don't use ultra wide or extra large display, the PPI is good enough for anything 24" or below, at a lot of area in earth, housing isn't as spacious as the west and sometimes when one place a decent speaker or the desk isn't solely for the gaming PC, you just can't fit a 21:9 4K monitor in

2) C/P ratio - Given how doubling the resolution hamper your FPS, even with frame gen, being able to game at 1080P at ultra vs 1440P or 4K at medium to low settings just provide more eye candy to the player, and you don't die due to stutters as easily.

Also one can keep the old hardware for 1-2 more gen for ultra detail gaming also, and with the crazy hardware price since COVID lockdown era, this is a big plus, not anyone make as much disposable income and fewer among those will spend those mostly on PC hardware.

For the hardware specs that most reviewers are using, 1080p don't sound like a reasonable resolution. But as I mentioned above, 1080p is becoming increasingly relevant because most games are so GPU taxing that even the fastest GPU may not get past 60 FPS consistently at native 4K resolution. So you will have to resort to DLSS that drops resolution and scale it back up to 4K. For most gamer, halo products like the RTX 4090 is not something they can afford or want to spend on, so as you move down the GPU line it gets worst quickly. So for someone to run latest AAA titles on 4K with say a RTX 4080 Super, you may need to down DLSS quality. At DLSS balance mode for 4K, you are techinically rendering at 1080p, native resolution and scaled up to 4K.Not a HUB subscriber... about as useful as Jayz2cents IMO. I did glance at the article though and I'm definitely a "casual 60 fps" gamer (4K) and I also grinned at that part about people questioning 1080p benchmarks being relevant... because that's something I've always wondered as well.

Why are we still seeing 1080p benchmarks in 2024? Must be for those hardcore FPS junkies wanting their max fps because for me 1080p gaming is about as visually exciting as watching a movie on DVD.

To each their own.

If you have a 9950X then you don't need to worry about it, your good.Someone correct me if I'm wrong... but aren't the x3D chips pretty irrelevant if you are gaming in 4K? I've seen the comment that they are irrelevant at 4K made by many in various threads... and every x3D benchmark video shows 1080p performance.

Asking because I game in 4K which is all on the GPU... so I would assume the answer is yes? I remember 1080p HD coming out in like 2007... but don't remember the last time I actually used that resolution on my PC.

That being said... gonna keep on trucking with my 9950X and 4090 until the 5090 is readily available at MSRP.

The issue with big Blackwell was between the cowos layer, the interconnects and the actual diesI am 100% certain that at the beginning of 2024, Nvidia fully intended to launch RTX 50-series this fall. But plans have to be flexible, and sacrificing tens of billions in GB200 orders in order to make several billion in the gaming sector just doesn't make any kind of sense on a business level.

AD102 production has long since halted I'm sure. AD103? Probably also halted around the same time. I'd wager only AD104 and above are still being produced, and even that is probably winding down now to make room for the lower tier Blackwell GPUs in the spring/summer timeframe.

Afaik they do not need a cowos layer for consumer cards that will use single chips and gddr

aberkae

Distinguished

If the 5090 is indeed at least 50% delta performance gains then we can expect the same performance delta with 1 resolution higher from my outlook. 720p data for 1080p gaming, 1080 data for 1440p gaming and 1440p data for 4k gaming.Someone correct me if I'm wrong... but aren't the x3D chips pretty irrelevant if you are gaming in 4K? I've seen the comment that they are irrelevant at 4K made by many in various threads... and every x3D benchmark video shows 1080p performance.

Asking because I game in 4K which is all on the GPU... so I would assume the answer is yes? I remember 1080p HD coming out in like 2007... but don't remember the last time I actually used that resolution on my PC.

That being said... gonna keep on trucking with my 9950X and 4090 until the 5090 is readily available at MSRP.

thisisaname

Distinguished

Its time we begin boycotting NVIDIA and supporting their competitors, AMD and Intel; may glory go to them!

I'm not so sure AMD is much better value while AMD price theirs similar to NVIDIA.

P.Amini

Reputable

Competitive players an Esports player don't care about how realistic or immersive a game is or how good or exciting it looks, they get excited when they win and use every tool and technique they can too win. Not only they sacrifice visuals but they sacrifice sound too as they kill the bass (which is an important factor for immersion in the heat of a battle) to better discern and recognize the direction of footsteps or shots.Not a HUB subscriber... about as useful as Jayz2cents IMO. I did glance at the article though and I'm definitely a "casual 60 fps" gamer (4K) and I also grinned at that part about people questioning 1080p benchmarks being relevant... because that's something I've always wondered as well.

Why are we still seeing 1080p benchmarks in 2024? Must be for those hardcore FPS junkies wanting their max fps because for me 1080p gaming is about as visually exciting as watching a movie on DVD.

To each their own.

Right, but if a bunch of GB200 dies got wasted because of CoWoS issues, those dies have to be replaced. And even if it's not too small of a number, Nvidia is trying to crank out as many GB200-based accelerators as possible, as fast as possible. Anything to accelerate that is more important than gaming.The issue with big Blackwell was between the cowos layer, the interconnects and the actual dies

Afaik they do not need a cowos layer for consumer cards that will use single chips and gddr

TeamRed2024

Upstanding

If you don't understand why nothing higher than 1080p is relevant in a CPU benchmark/review then you don't understand benchmarking/reviews. In a CPU review if you're testing resolutions which are GPU limited then you're not actually testing the CPU.

I get all that... what I don't understand is what has been mentioned upthread... the fact that 1080p is still relevant in gaming. For me it isn't... but according to Steam surveys it is.

Maybe it's just me... I embrace technology and move forward. I have a 2024 phone... 2024 motorcycle... 2022 car... 2024 CPU and related hardware with a 2022 GPU... a 4K TV in every room and on my desktop.. with my laptop being 1440p only because I couldn't get 4K from Alienware.

I don't use DVDs... 1080p resolutions... MySpace... etc... etc... The early 2000's called me a long time ago telling me they wanted them back. 🤣

Competitive players an Esports player don't care about how realistic or immersive a game is or how good or exciting it looks, they get excited when they win and use every tool and technique they can too win. Not only they sacrifice visuals but they sacrifice sound too as they kill the bass (which is an important factor for immersion in the heat of a battle) to better discern and recognize the direction of footsteps or shots.

That's what I was thinking... the only thing it could be. OMG I need 8000 FPS to win! I like to win as much as the next guy... but turning off sound and downgrading to 1080p isn't necessary in MMO's, RPGs, ARPGs... Flight/Racing sims... etc... that I play.

I've drifted off topic enough so gonna close my end of this relevancy discussion.

TeamRed2024

Upstanding

If you are always gaming at native 4K at high or ultra graphical settings, then you likely won't notice the impact of a faster CPU. But in reality, even if you sport a RTX 4090 or even 5090, you will end up running DLSS at some point. Meaning to say that you are not running native 4K, and therefore, the CPU may become the bottleneck.

I haven't used DLSS yet... my list of titles is pretty short though. Diablo 4 and BG3 are the games I've been grinding in 2024 and have made sure DLSS was off.

We'll see what happens with MSFS2024.

spongiemaster

Dignified

The majority of those Steam systems are going to be laptops. 1080p testing with an X3D CPU using a 4090 has no applicable value to those users. It's the same reason 720p had a large presence on Steam for a while despite no one using 720p monitors.I get all that... what I don't understand is what has been mentioned upthread... the fact that 1080p is still relevant in gaming. For me it isn't... but according to Steam surveys it is.

Yup. It's also why far less demanding games are the most popular on Steam. You can run Counter-Strike 2 on a laptop with integrated graphics.The majority of those Steam systems are going to be laptops. 1080p testing with an X3D CPU using a 4090 has no applicable value to those users. It's the same reason 720p had a large presence on Steam for a while despite no one using 720p monitors.

8086

Distinguished

The intent of the boycott isn't about performance gains but the fact that nvidia is scalping its customers and using it's monopoly against us. GPU prices are insane and their margins are often 10-30x.As long as the RX 8800XT delivers on the rumours, then it's my next card. This means 7900XT/XTX raster, 50% faster RT than 7900XTX, lower power than 7900XT, 16GB $599 max. Oh and it better be able to support FSR4 when it's available.

I don’t think anything has a 30X margin, not even close. The physical hardware for an H200 might only cost $1500, and it sells for $30-$45K or whatever. But the amount of time spent designing the hardware and software is a huge part of the cost.The intent of the boycott isn't about performance gains but the fact that nvidia is scalping its customers and using it's monopoly against us. GPU prices are insane and their margins are often 10-30x.

I’m not saying Nvidia is t making some amazing profits, but compared to all its costs it’s probably a 3X to 4X margin. And much of that is on the data center side recently. The expense of designing and releasing the Ada architecture probably works out to around a 1.5-2X profit margin. But the retail price also has to support the graphics card manufacturers and distributors as well.

The design is done once and gets spread over the millions of units that they are selling.I don’t think anything has a 30X margin, not even close. The physical hardware for an H200 might only cost $1500, and it sells for $30-$45K or whatever. But the amount of time spent designing the hardware and software is a huge part of the cost.

I’m not saying Nvidia is t making some amazing profits, but compared to all its costs it’s probably a 3X to 4X margin. And much of that is on the data center side recently. The expense of designing and releasing the Ada architecture probably works out to around a 1.5-2X profit margin. But the retail price also has to support the graphics card manufacturers and distributors as well.

the software is a running cost that should be spread over tens of millions sales.

They are doing at least 10x

thestryker

Judicious

They've been reporting gross margins of around 75% lately while datacenter (highest margin parts) is making up almost 90% of their revenue. Even if we're to say software development is included that's right in the 3-4x range.The design is done once and gets spread over the millions of units that they are selling.

the software is a running cost that should be spread over tens of millions sales.

They are doing at least 10x

You don't just push a few buttons and have everything work. Even if the core architecture is the same, design, testing, and validation need to happen on every single part. It takes time and money.The design is done once and gets spread over the millions of units that they are selling.

the software is a running cost that should be spread over tens of millions sales.

They are doing at least 10x

If you want to say that Nvidia's stock is incredibly overvalued with a market cap of ~$3.39 trillion, yes, I'd say that's 10X to 30X too high. LOL. But the earnings reports are pretty straightforward.

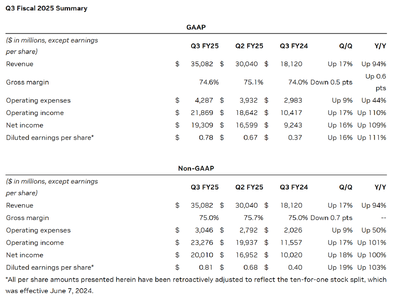

That shows $35 billion in gross revenue, $20 billion in net revenue (give or take depending on GAAP/non-GAAP). So in effect, Nvidia spent $15 billion and earned $35 billion. Compared to last year, it spent $8 billion and earned $18 billion. So it nearly doubled expenses and income in the past year.

Either way? It's more than 2X and less than 3X overall margins. And yes, that includes bonuses and a bunch of other stuff, and you can argue maybe the "real" numbers should show a margin of maybe up to 4X. But 10X? Not a chance. 10X would be if Nvidia spent $3.5 billion to make $35 billion, which it absolutely could not do right now. Just the data centers and main HQ building are going to have costs in the billions I suspect.

TeamRed2024

Upstanding

The intent of the boycott isn't about performance gains but the fact that nvidia is scalping its customers and using it's monopoly against us. GPU prices are insane and their margins are often 10-30x.

Insane when compared to the $699 I paid for the 1080 Ti back in 2017... sure. People complained back then too though.

Nvidia cards are the best... and I don't mind paying for the best. I also make upwards of $250k per year... so anyone reading this that may work at McD's for 1/5 that amount I wouldn't expect to agree with me.

If factoring tax I made similar to you, like 10% less, but I still don't like to pay for that at 2 grand in a gaming tech, which will be outdated 2 years later... there are so much more to enjoy in life at that budget, as a 30 year gamer I would prefer spending on cameras, speakers, cars, travel with family... but not GPU for that sake,Insane when compared to the $699 I paid for the 1080 Ti back in 2017... sure. People complained back then too though.

Nvidia cards are the best... and I don't mind paying for the best. I also make upwards of $250k per year... so anyone reading this that may work at McD's for 1/5 that amount I wouldn't expect to agree with me.

valthuer

Notable

Nvidia cards are the best... and I don't mind paying for the best. I also make upwards of $250k per year... so anyone reading this that may work at McD's for 1/5 that amount I wouldn't expect to agree with me.

So, basically, one monthly salary of yours, should pretty much cover the expenses for the next 7-8 Nvidia's flagship cards.

Neat!😎

TeamRed2024

Upstanding

which will be outdated 2 years later...

I just got 7 years out of a 1080 Ti. Something tells me that the 4090 will be relevant for longer than that.

So, basically, one monthly salary of yours, should pretty much cover the expenses for the next 7-8 Nvidia's flagship cards.

Neat!😎

Something like that. It's all about priorities. Since I can't afford the latest and greatest $5M homes in the area I decided to splurge on my GPU.

TRENDING THREADS

-

Review Nvidia GeForce RTX 5080 Founders Edition review: Incremental gains over the previous generation

- Started by Admin

- Replies: 147

-

News Reviewer reports RTX 5080 FE instability — PCIe 5.0 signal integrity likely the culprit

- Started by Admin

- Replies: 35

-

News Trump to impose 25% to 100% tariffs on Taiwan-made chips, impacting TSMC

- Started by Admin

- Replies: 37

-

-

Review Nvidia GeForce RTX 5090 Founders Edition review: Blackwell commences its reign with a few stumbles

- Started by Admin

- Replies: 224

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.