kerberos_20

Champion

config file is editable, cvar can be added, just make file read only so game wont overwrite itThis is completely missing from my file oO

What's going on?

but you should probably limit texturepool if you dont have plenty of vram

config file is editable, cvar can be added, just make file read only so game wont overwrite itThis is completely missing from my file oO

What's going on?

I know it's editable. You made it sound like your game itself added that, though, not you.config file is editable, cvar can be added, just make file read only so game wont overwrite it

but you should probably limit texturepool if you dont have plenty of vram

Interesting. And weird. My 12700K definitely behaves differently. Is this another instance of optimization for only AMD, maybe? That doesn't make much sense, though... but I do notice that only your lower cores (CCD1?) are used. So there does seem to be some parking going on.

No 2 PC are the same, even if they have exact same parts. So this forum is full of people posting cause they watched a video and can't match performance on their PC. It happens.

Just thought I mention that as sometimes you just have to be happy with what you get

he doesnt know that changing ingame graphic settings on fly causes cpu/gpu usage bug, that game is missing "some settings changed, game needs to be restarted" popup

4090 is at ~50%.

The top video the 4090 is around 50% with the CPU around 35%.Both videos show this issue.

Nvidia to the rescue again? For many folks who are always complaining about price and power consumption, this just makes the case for a next gen console for these people!Pre-release versions of 'Star Wars Jedi: Survivor' have revealed detrimental performance issues with the PC version. High-end GPUs like the RTX 4090 and 3080 Ti are only managing 35-50 frames per setting on average.

Pre-Launch 'Jedi: Survivor' Eats 21GB of VRAM, Struggles on RTX 4090 : Read more

Your trick indeed does work and it has its reason for this (it only shows how the config of the renderengine based on the displaydriver is messed up, this is something they have to fix), since it’s all based on Unreal engine I had a look at several different config files, but the programmers from Respawn changed a whole lot to it, best is to wait for patches

Installed that 3GB patch they released today. Now my game keeps crashing, often directly after loading into the save. Thanks, EA -.-

It looks like the reason, after the initial issue was resolved by repairing the game files, was a certain meditation circle... that's so weird. But I have been playing for an hour now without issues at least.That sucks... I didn't play yesterday so I'll update when I get home and see what happens.

I'm not sure what the COUNTLESS replies you've made on this topic are implying. Are you saying you have the most powerful gaming PC ever conceived and this game requires the extreme level of performance? Or, are you stating everybody else's PC is incapable of playing this game? Or, is it that everyone is lying when they say they're having issues with the game?The top video the 4090 is around 50% with the CPU around 35%.

Mine ran with 4090 at 70-80% and CPU around 20%... which is exactly what is shown in the bottom video with the same CPU/GPU.

I personally didn't have a problem with it but all the Youtube trolls are telling me that I don't know what bad performance looks like... 🤣 🤣 🤣

I mean... if a smooth 60 fps in 4K Ultra with RT on with a frametime around 13ms is bad performance then I guess my system is guilty. 😉

I'm not sure what the COUNTLESS replies you've made on this topic are implying. Are you saying you have the most powerful gaming PC ever conceived? Or, are you stating everybody else's PC is incapable of playing this game? Or, is it that everyone is lying when they say they're having issues with the game?

Interesting. And weird.

No 2 PC are the same, even if they have exact same parts.

That video you put up has a major problem.

game doesn't use enough cores but I don't know if that will help reduce memory usage.

More games to come that use more vram as the devs swap from PS4 with 8gb to PS5 with up to 16gb.

The usage of this needs to be reduced in some aspects to work on console as they only have 16gb ram.

A quick benchmark on my gaming PC with a 3080 FE and 3900X of the new Star Wars: Jedi Survivor game at 4K with FSR 2 and raytracing enabled. The game is poorly optimised and due to a big CPU bottleneck there is no improvement to my framerate by lowering the settings beyond those shown in the video (my optimised settings).

Settings recommendations are therefore impossible, really, although you can disable ray tracing to claw back some performance. Basically, Star Wars Jedi: Survivor is not utilising the hardware presented to it at all in a meaningful way.

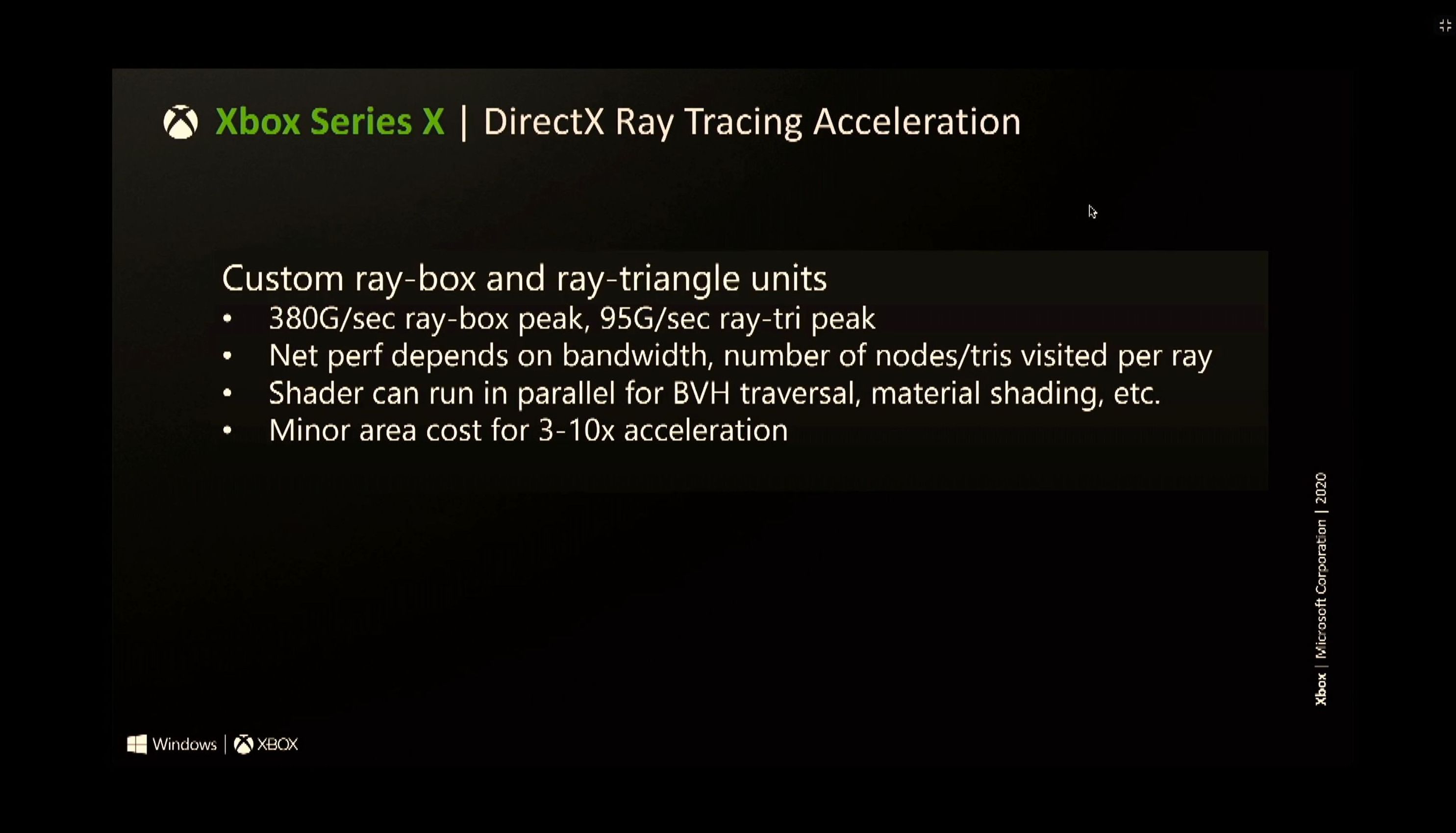

What do gamers think. Well...In short, Star Wars Jedi: Survivor is essentially ignoring the fact that CPUs have entered the many-core era. With higher settings it is even more disastrous – with ray tracing active, more smaller cores are tasked with maintaining RT's BVH structures, but ultimately, performance drops still further to the point where I've observed CPU-limited scenes on a 12900K that just about exceed 30fps. On a mid-range CPU like the Ryzen 5 3600, for example, it is even more catastrophic.

| RT Feature | RTX GPU | GTX GPU |

|---|---|---|

| BVH Creation | No | No |

| BVH Traversal | Yes | No |

| Ray-Gen | Yes | No |

| Ray Batching | Yes (Partial) | No |

| Ray/Triangle Intersection | Yes | No |

| Shading | Yes (Legacy GPU Shading techniques) | Yes |

| Denoising | No (Potential to use Tensor cores) | No |

[0023] The ray tracing pipeline operaties in the following manner. A ray generation shader is executed. The ray generation data sets up the data for a ray to test against and request the ray intersection unit test the ray for intersection with triangles.

[0024] The ray intersection test unit traverses an acceleration structure...

...For triangles which are hit, the ray tracing pipeline triggers an execution of an any hit shader. Note that multiple triangles can be hit by a single ray.

Over on YouTube, PureDark (opens in new tab) has uploaded a video showcasing a modded version of the game running at much better framerates. They've implemented a DLSS Frame Generation mod, which according to the video evidence brought their game up from 45 to 90 actual fps. That's a marked improvement over what many are seeing, especially Steam's ticked-off comment section.Modder doubles FPS in Star Wars Jedi: Survivor using DLSS 3

https://www.pcgamer.com/modder-doubles-fps-in-star-wars-jedi-survivor-using-dlss/

"I had a breakthrough and [am] now trying to replace FSR2 with DLSS, that would make the image look much better."

So DLSS 3 and 2 like mod on the way. What AMD paid to keep out.