So this might be a bit of a stupid question but still...

So I have had my SSD for around 2 years. Recently I realized that I never checked the health on it and now that I did Im paranoid about it.

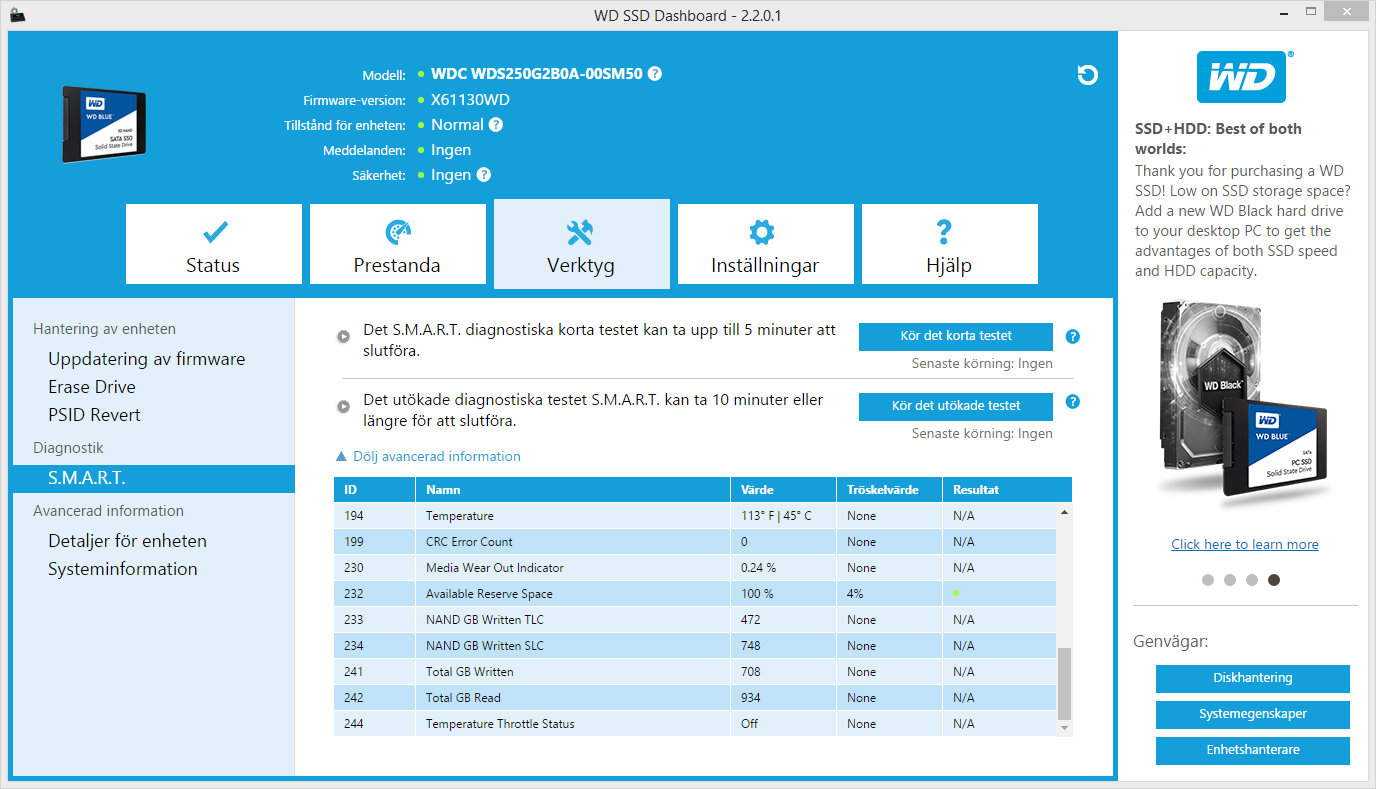

So I checked with HWInfo first and I got a 87% but then using CrystalDiskInfo I got 63% and all of the Writes/Reads seem quite big.

So which one should I trust ?

When is it gonna be time to replace the drive without losing any data or ect. considering my OS and some information is on it?

Is there any way to decrease the writes overtime with any OS settings so life is extended ?

Thank you for answers in advance. Kind of paranoid. 😀

(I have a WD Green 240GB Drive by the way.)

CrystalDisk result: View: https://imgur.com/q1iayPu

HWInfo result: View: https://imgur.com/RUnSzpx

So I have had my SSD for around 2 years. Recently I realized that I never checked the health on it and now that I did Im paranoid about it.

So I checked with HWInfo first and I got a 87% but then using CrystalDiskInfo I got 63% and all of the Writes/Reads seem quite big.

So which one should I trust ?

When is it gonna be time to replace the drive without losing any data or ect. considering my OS and some information is on it?

Is there any way to decrease the writes overtime with any OS settings so life is extended ?

Thank you for answers in advance. Kind of paranoid. 😀

(I have a WD Green 240GB Drive by the way.)

CrystalDisk result: View: https://imgur.com/q1iayPu

HWInfo result: View: https://imgur.com/RUnSzpx