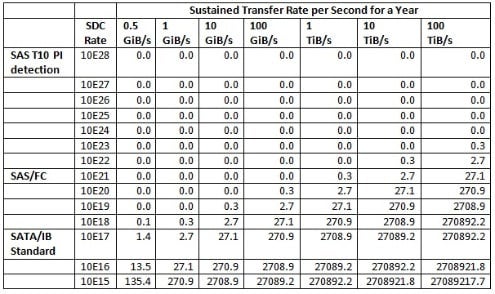

I've read some articles about Silent Data Corruption across SATA channels.

Apparently there are some mechanisms in SATA HDDs to prevent (or at least reduce) the rate of SDC.

What is this mechanism, and can I rely on it?

Do I need to worry about SDC in a 100TB storage array for instance?

My intention is to build such an array and write to it only once, although I expect circumstances will arise where I'll need to do additional writing (so to be safe, I'd like to plan for 500TB of writing and then read from then on).

If SDC could be an issue for me, how would you suggest I overcome it?

Apparently there are some mechanisms in SATA HDDs to prevent (or at least reduce) the rate of SDC.

What is this mechanism, and can I rely on it?

Do I need to worry about SDC in a 100TB storage array for instance?

My intention is to build such an array and write to it only once, although I expect circumstances will arise where I'll need to do additional writing (so to be safe, I'd like to plan for 500TB of writing and then read from then on).

If SDC could be an issue for me, how would you suggest I overcome it?