You sound like an audiophile trying to convince us we all need a

$1300 HDMI cable to experience what the engineers intended to be the true 4k experience. No matter how much made up technobabble (remember, that $1300 HDMI cable has: "Dielectric-Bias System (DBS US Pat # 7,126,055) Significantly Improves Audio Performance") you come up with to justify your stance, the general public isn't going to be able to tell the difference from cheaper mainstream options and won't care.

@spongiemaster @NightHawkRMX As the resident refresh rate celebrity -- Blur Busters may write "eagerly" like those silly HDMI stuff that often has no basis in science --

but we are 100% real science.

I'm in research papers such as this

NVIDIA scientific paper (Page 2) and

pursuit camera paper (Co-author). More publicly, we write in an engaging and easy Popular Science format, to bridge between the mainstream and the boring science papers.

In Motion Contexts, Bigger than 4K vs 8K (as long as GtG near 0)

Many people do not realize how big a difference it can make in certain material, such as virtual reality, where it can mean the difference between nausea and no-nausea. And the theoretical Holodeck Turing Test (can't tell apart real life from VR) also requires retina refresh rates.

We are 100% based on real science. In various situations, the Hz differences we're talking about is bigger than 4K versus 8K for example. 240Hz vs 360Hz wouldn't be it, but 240Hz vs 1000Hz is already proven in the lab (to 95% of humans) be a bigger difference than 4K and 8K. For now, 8K 1000Hz is a pipe dream.

Pixel response and lower resolution has long been a limitation in revealing Hz benefits. Faster pixel response and higher resolutions amplify limitations of low Hz. GtG tiny fraction of a refresh cycle, as well as the fact that higher resolutions means more pixels per inch (for motion blurring or stroboscopics) -- increasing the appearance difference between stationary images and moving images.

There are multiple thresholds of human detections of display frequencies:

1. Flicker Detection: ~70Hz

Approximately ~70Hz to ~100Hz, varies from human to human. This is when direct detection of flicker stops.

Note: People may still get some headaches beyond ~100 Hz even if the flicker is hard to detect, though -- the threshold is very fuzzy and human-dependent. A few super sensitive humans may see flicker beyond 100 Hz, while others may not see flicker to a threshold well below 70 Hz. Not everybody's vision is identical, nor are their brains. Also sensitivity of peripheral vision is higher, especially in brighter environments and where the flicker is a sharp squarewave duty cycle. However, 70Hz-100Hz is an approximate range.

2. Single-Frame Identification: ~300Hz

The famous "Fighter Pilot study" everybody cites as a human vision limitation -- about 1/250sec - 1/300sec for a one-frame flash of an object. This assumes non-brightness compensated (it doesn't compensate for the Talbout-Plateau Theorem -- need to double brightness to make a half-length flash equally visible).

However, games aren't always single-frame. Everything is in motion, and there are other effects to consider such as below. Read more:

3. Latency Sync Effects: Beyond 1000 Hz

In augumented reality situations, where you need sync between real-world versus virtual, virtual graphics will lag behind real graphics. A higher refresh rate helps solves this. It also applies to sync between a physical finger and a virtual screen image. Here's a Microsoft Research Video of 1000Hz.

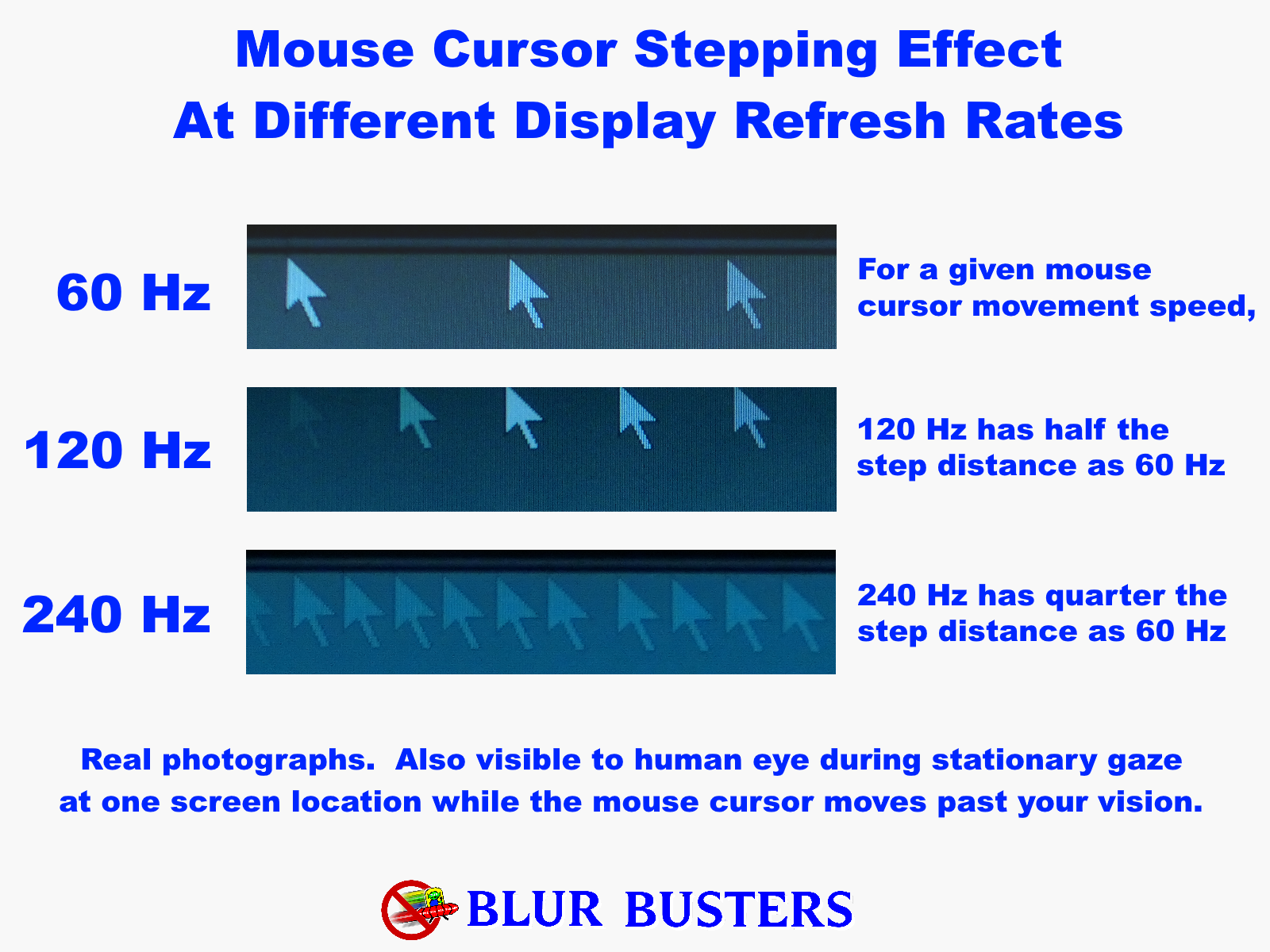

4. Stroboscopic Artifacts: Beyond 1000 Hz

This is the common mousearrow effect, as seen at

testufo.com/mousearrow and is also visible in games, as explained at

blurbusters.com/stroboscopics .... A mouse cursor moving one screenwidth per second on an 8K display would require 7680Hz in order to be a continuous blur without any stepping. Likewise for bright objects on dark backgrounds during mouseturns in FPS games, for example (see screenshots in above link).

This does not just apply to mice, but also any fast motions, panning, turning, scrolling, especially when tere's both stationary and moving objects simultaneously on the same screen -- giving opportunity for different targets for eyes to fixate on (to more easily detect stroboscopic gaps). Many scientific papers exist, such as this one and this one.

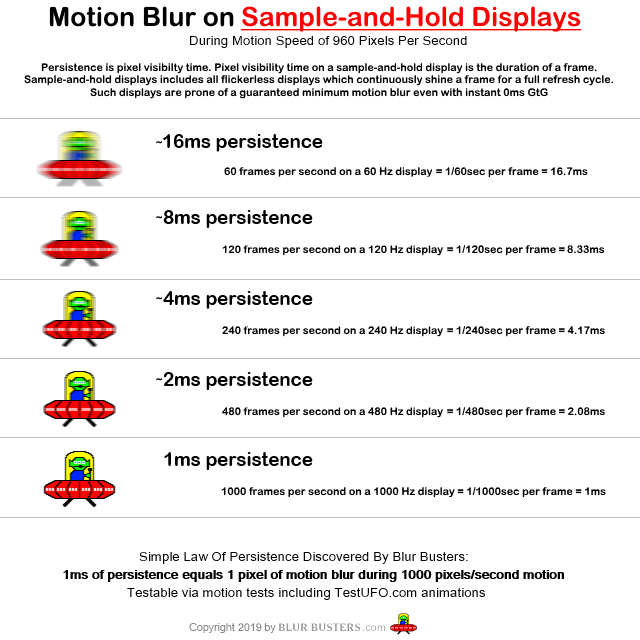

5. Persistence blurring: Beyond 1000 Hz

See for yourself at

testufo.com/eyetracking .... Refresh cycles are displayed statically for the full duration of a refresh cycle.

When you track your eyes on a moving object, your eyes are in a different position at the beginning and end of a frame's duration, since your eyes are in analog continuous pursuit. At 1000 millmeters per second eye tracking (along the screen plane) -- 60Hz means your eyes have pursuited 1/60th of that (about 16-17 pixels) -- creating 16-17 pixels of eye-tracking-based motion blur on those static 1/60sec frames.

You can see for yourself in

this additional motion animation, and in many science papers written over the decades about something called the "Sample And Hold Effect" -- even at places like Microsoft Research.

In photography contexts, a 1/1000sec sports shutter is clearly sharper than a 1/100sec shutter for fast-action stuff. There are diminishing curve of returns too, but the motion blur equivalence is exactly the same (for GtG=0, using the full MPRT 100% measurement instead of industry standard MPRT 10%->90%).

You can even see for yourself in motion tests such as

testufo.com/eyetracking and

testufo.com/persistence

On a non-impulsed display (sample-and-hold), doubling frame rate halves motion blur. That's assuming a display that doesn't use flicker, strobing, BFI, phosphor (CRT), or other temporal impulsing method to reduce motion blur. The closer you get to retina refresh rates, the more persistence-based motion blurring disappears.

While, 240Hz vs 360Hz is more subtle, the comparison between 240Hz vs 1000Hz is much more dramatic.

The jump from 60Hz->144Hz and 144Hz->360Hz are more representative comparisions, and that's why ASUS' booth compared 144Hz vs 360Hz. There is a need to jump geometrically up the diminishing curve.

As long as GPU power is available for stratospheric framerates in modern games (

it will eventually be), the benefits are there -- and is more easily noticeable than 4K versus 8K for motion contexts. Especially if the retina Hz (ultra high Hz) is concurrently used with retina resolution (ultra high resolution).

Science-minded people who would like to understand better, should read the

1000Hz Journey article. Those who don't believe beyond X Hz tend to be mainly the anti-science people. It's not as simple as "Eyes can only see X Hz" because there are a lot of variables that interact with each other (i.e. higher resolution amplify limitations of a specific Hz when it comes to noticing blur or stroboscopics).

Yesterday, 1080p was a fortune. Today, 1080p is free. Formerly, many thought retina screens were a silly waste. But now it's in every smartphone. So will higher Hz, too. From bleeding edge to mainstream, 120Hz will be mainstream already in a few years (it's coming to the next iPhone and Galaxy).

Even if you hate the silliness high Hz (much like hating the silliness of 4K back 15 years ago) -- it's coming anyway -- but it is useful to understand why it's useful, why it's coming (even high Hz helps browser scrolling), and why high Hz will be free in a decade or two (much like retina spatial resolution).