vmN :

We are basically agreeing, but my point is, that the general person would be streaming with 30/60FPS. This can be divided over many cores, that is truly correct, but there will be a point where you will divide it to more cores where you wont get any performance increase. If it wasn't streaming where you were 'limited' by a certain amount of frames per second, then yes, it would be the best scalable process.

I never argued about the PD been stronger than SB(Or the other way around).

EDIT:You are comparing an SB i5 to PD, meanwhile I'm comparing an haswell i5 to PD.

The TS article the above poster referred to, and the one you criticized, was fx8350 vs i5-2500K and i7-2600K, not vs Haswell.

The refresh rate has absolutely

zero to do with how effectively you can divide up the work. The only thing that matters is what is the smallest unit of division and whether the algorithm utilizes dynamic or static scaling. A static scaling model would divide up the total work into equal segments and then dispatch then to the worker threads, dynamic models would do some heuristics and history analysis to determine if some area's of the work are more intensive then others and then try to divide up the work according to total processing need instead of equal unit distribution.

Because streaming has real time requirements they utilize the same model that VTC processors use in that the compression quality is dynamically scaled based on past rendering times. If a frame is taking too long it will get dropped and the next frame will be rendered at a lower Q value, if a frame is done early then the next frame will be rendered with a slightly higher Q value. Some algorithms will utilize a form of pre-rendering analysis to quickly determine optimum Q values to get the frame done within the allocated time frame. In all cases the work is easily divided up amongst a near infinite number of targets because of how large the screen your talking about is. Assuming 16x16 segments are used (256 pixels of work per unit) a 1080 resolution would have 8,100 units worth of work to be distributed. If you use 32x32 segments (1024 pixels) you get 2025 units or work to be distributed. You can keep going bigger and bigger but even at 512x512, which is f*cking huge, you get 8 units of work to be distributed. And so having four to six additional cores of unused processing (most games are two heavy cores and 1~2 worth of random extra threads) power is easily a big boost for additional rendering.

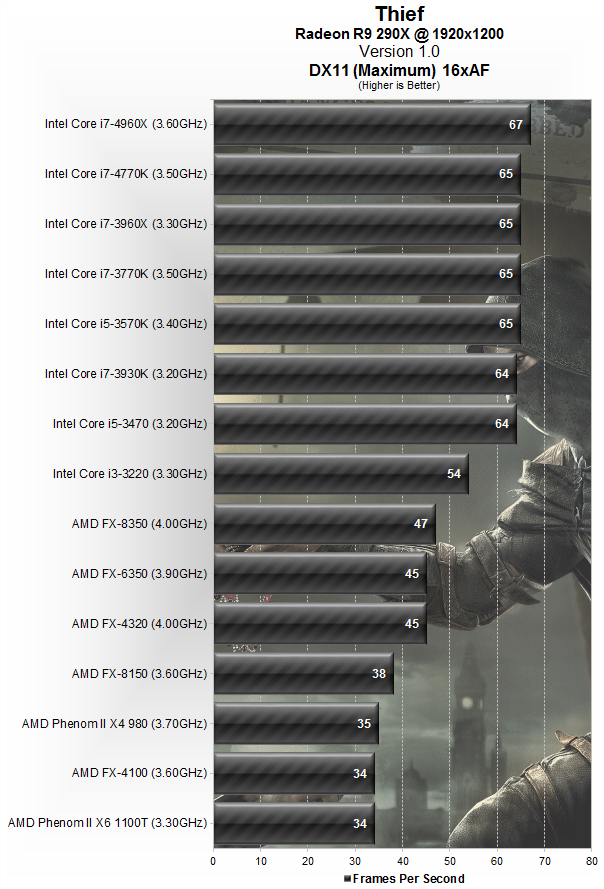

So what you experience is that, under normal play, the Intel i5 (any of them) would be rendering higher FPS then the fx8350 due to it's advantage in single thread performance accelerating those two heavy threads. Turn on the streaming software and enforce a static Q value with the algorithm set to render on four threads and you'll see some interesting events taking place. The fx8350 slows down only slightly, due to the 10~20% penalty it takes when both cores on a module are active, the i5 takes a big hit as it's got to share those four cores with both the compression software and the active game. Most benchmarks we use today leave large portions of the CPU underutilized, this is evident when you see i3's scoring similarly to i5's (same clock rate) even though the i3 is, quite literally, half the CPU of an i5.