Will my i7 2600k running at stock speeds bottleneck a GTX 1080?

- Thread starter redness

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Solution

redness :

Ecky :

Any CPU will bottleneck any GPU sometimes, but it probably won't matter, because a 2600K will still deliver an excellent experience in the vast majority of games.

Even at stock speed?

Yes.

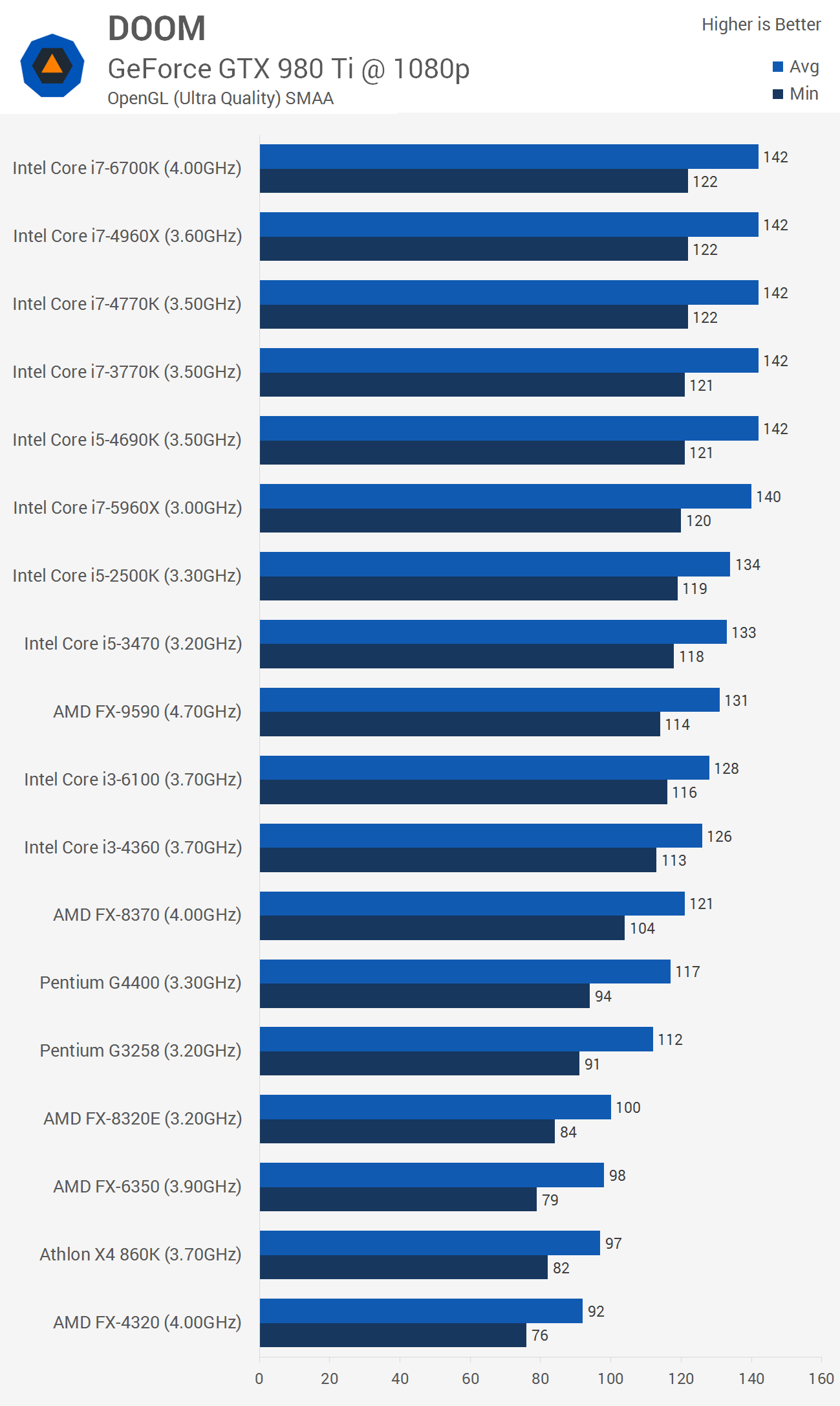

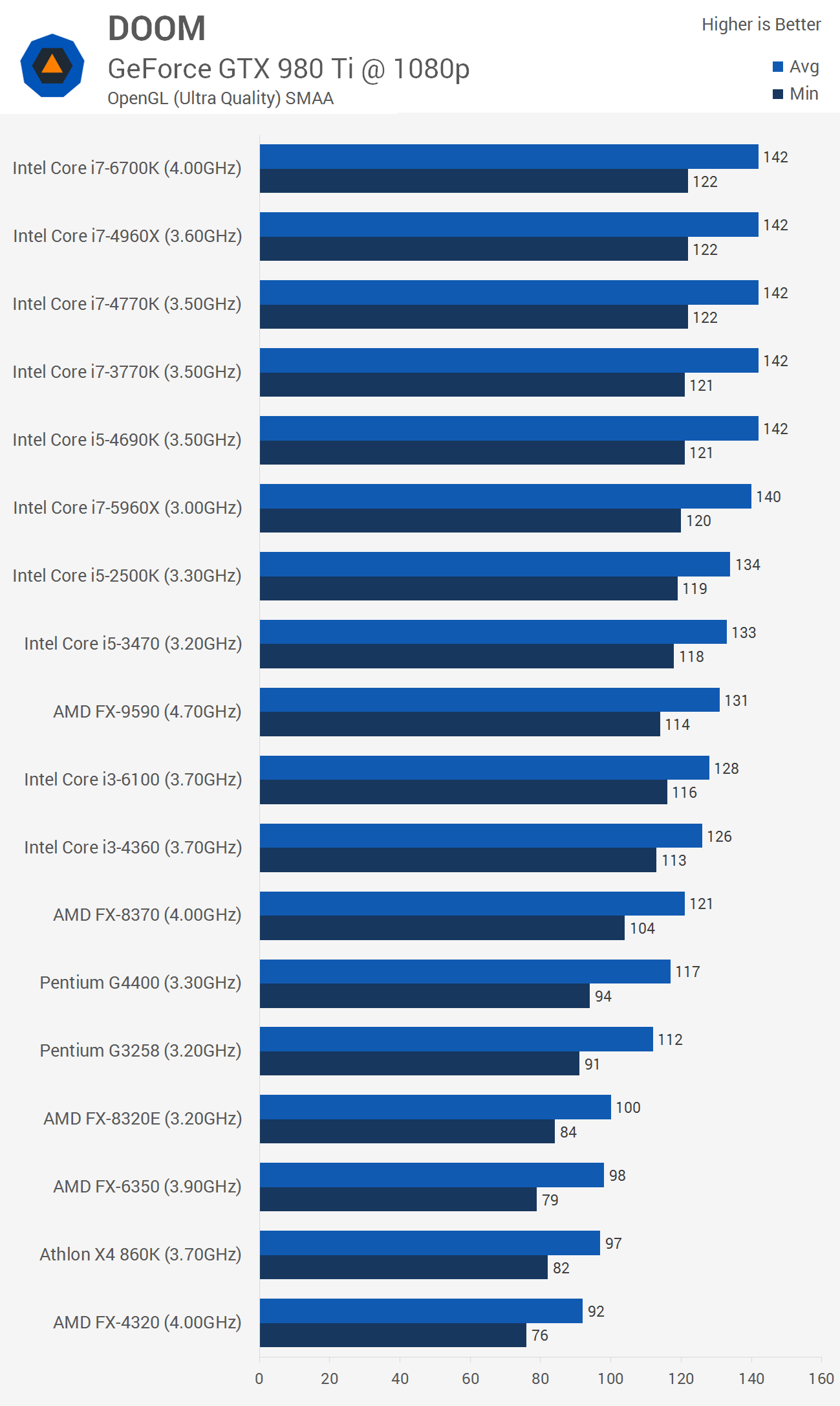

Here's an example from Doom:

Notice how all the i7's going back to the 3770k [the 2600k wasn't benchmarked, but likely performs the same] cap out at about 140 FPS? That's a GPU bottleneck; the GPU simply can't render frames any faster, even though the CPU can. So you can guesitmate, based on 3770k/2500k performance levels, that a 2600k could push at least 140 FPS in DOOM, and likely more.

Point being, pretty much every game benchmark review shows that Intel i7 CPUs...

I highly doubt you will have any bottleneck issue, especially if your overclocking that CPU, so you should be fine with the GTX 1080. As far as heat goes whats your typical ambient temp (room temp) and what is your budget for a aftermarket CPU cooler? (If you don't have one already)

While I understand what you mean, I also live at tropical weather, anything you did on your PC won't make it broke. The Sandy Bridge platform had enough fail-safe mechanism to protect CPU from damage. Unless you somehow disable it. The motherboards however, has none. So the chances are, if anything broke, it would be motherboard.

To answer your question, yes your CPU will bottleneck it. However, no so much so that the 1080 will gets outperfomed by at least 2 tiers below it. Even with far better CPU.

To answer your question, yes your CPU will bottleneck it. However, no so much so that the 1080 will gets outperfomed by at least 2 tiers below it. Even with far better CPU.

Ecky

Illustrious

rush21hit :

gamerk316 :

A stock 2600k is still fine; GPU will bottleneck before the CPU ever will.

Until you run 3x1080p with all guns blazing..

Not sure what you mean. More pixels = more GPU load, doing this you're likely to run into a GPU bottleneck first.

2600K will bottleneck any video card sometimes, but in a vast majority of games it won't matter, because your framerate will still be plenty high.

Regarding overclocking, I wouldn't worry. Intel CPUs are notoriously hard to kill.

WildCard999 :

I highly doubt you will have any bottleneck issue, especially if your overclocking that CPU, so you should be fine with the GTX 1080. As far as heat goes whats your typical ambient temp (room temp) and what is your budget for a aftermarket CPU cooler? (If you don't have one already)

I do have one. I have a 212x evo. You think I'll be safe to overclock now?

As for my ambient room temperature. I dunno. It's hot enough that my dog wants to get out of my room because it's hot.

rush21hit :

To answer your question, yes your CPU will bottleneck it. However, no so much so that the 1080 will gets outperfomed by at least 2 tiers below it. Even with far better CPU.

Oh nooooooo. I thought it would be fine 🙁

Ecky

Illustrious

Most people don't have a clear understanding of how CPU and GPU bottlenecks work, it seems. In simple terms:

In a given scene, there will be a certain amount of CPU power need for a given framerate. I'll make up some numbers - your CPU may be able to deliver 90fps at stock clocks in a particular scene in a game. If you drop in a GTX 1080, at low settings, it may be able to deliver 200fps, so your CPU will be bottlenecking you at 90fps. However, if you crank up the graphical settings, the CPU will still be able to deliver 90fps, but the GPU may only deliver 50fps, so you're now "GPU bottlenecked". One is always bottlenecking the other, or you'd have infinite frames per second.

The amount of CPU and GPU power needed is contantly varying. The goal is to have enough of either to have the framerates you want, at the graphical settings you want. However, you can always reduce GPU load by lowering graphics, but you can't do anything about it if your CPU is limiting you to a framerate that's less than you want, other than overclock or replace the chip.

Any CPU will bottleneck any GPU sometimes, but it probably won't matter, because a 2600K will still deliver an excellent experience in the vast majority of games.

In a given scene, there will be a certain amount of CPU power need for a given framerate. I'll make up some numbers - your CPU may be able to deliver 90fps at stock clocks in a particular scene in a game. If you drop in a GTX 1080, at low settings, it may be able to deliver 200fps, so your CPU will be bottlenecking you at 90fps. However, if you crank up the graphical settings, the CPU will still be able to deliver 90fps, but the GPU may only deliver 50fps, so you're now "GPU bottlenecked". One is always bottlenecking the other, or you'd have infinite frames per second.

The amount of CPU and GPU power needed is contantly varying. The goal is to have enough of either to have the framerates you want, at the graphical settings you want. However, you can always reduce GPU load by lowering graphics, but you can't do anything about it if your CPU is limiting you to a framerate that's less than you want, other than overclock or replace the chip.

Any CPU will bottleneck any GPU sometimes, but it probably won't matter, because a 2600K will still deliver an excellent experience in the vast majority of games.

You should be fine to overclock, just keep an eye on your temps. Here's a guide to overclocking that CPU.

http://www.tomshardware.com/forum/265056-29-2600k-2500k-overclocking-guide

http://www.tomshardware.com/forum/265056-29-2600k-2500k-overclocking-guide

Ecky

Illustrious

redness :

Ecky :

Any CPU will bottleneck any GPU sometimes, but it probably won't matter, because a 2600K will still deliver an excellent experience in the vast majority of games.

Even at stock speed?

I would imagine so, yes. In cases where it DOES bottleneck, you can increase your framerates with an overclock. It just depends on what your expectations are, and what game you're playing.

gamerk316

Glorious

redness :

Ecky :

Any CPU will bottleneck any GPU sometimes, but it probably won't matter, because a 2600K will still deliver an excellent experience in the vast majority of games.

Even at stock speed?

Yes.

Here's an example from Doom:

Notice how all the i7's going back to the 3770k [the 2600k wasn't benchmarked, but likely performs the same] cap out at about 140 FPS? That's a GPU bottleneck; the GPU simply can't render frames any faster, even though the CPU can. So you can guesitmate, based on 3770k/2500k performance levels, that a 2600k could push at least 140 FPS in DOOM, and likely more.

Point being, pretty much every game benchmark review shows that Intel i7 CPUs are not CPU bottlenecked, going back to the 2000 series. You might lose a handful of FPS compared to a newer architecture, but no more then that.

TRENDING THREADS

-

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.