The Q6660 Inside

Honorable

palladin9479 :

blackkstar :

gamerk316 :

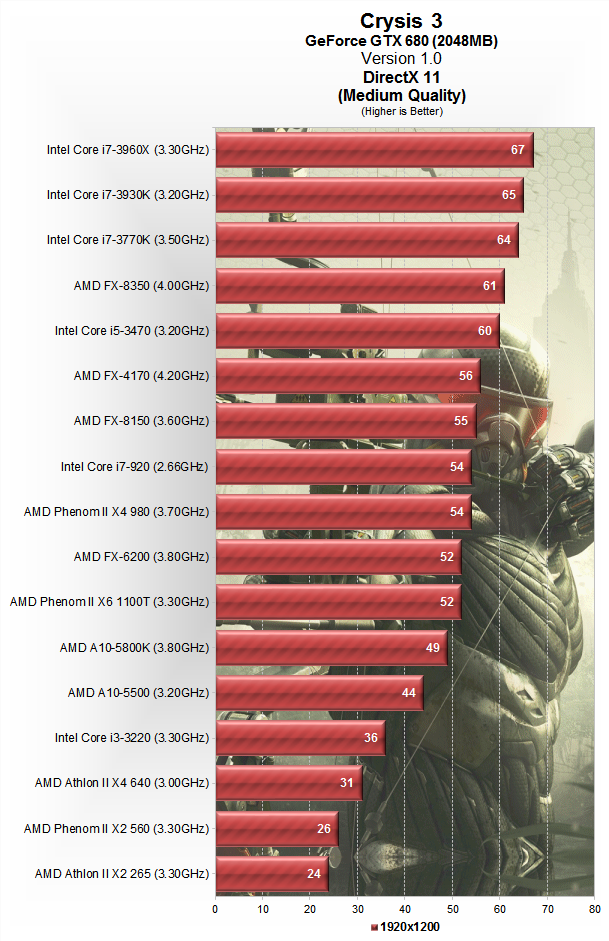

For the most part though (Crysis 3 aside, and I've made my points on that approach very clear), notice how AMD doesn't gain a performance lead. Why? Because the CPU isn't driving performance, and it hasn't been for some time now. I'd imagine a C2Q/PII could still pump out some surprising numbers if anyone was willing to test them...

I don't get your fixation with Crysis 3. They offloaded the work from one thing that was bottlenecking performance (the GPU) and placed it on something that wasn't bottlenecking (all those extra CPU cores that are sitting around doing nothing).

If you have a bottleneck, you move things away from what's bottlenecking and put them on things that aren't bottlenecking.

If you're being bottlenecked by the GPU, you don't keep giving the GPU more work to do and giving the rest of the system less work to do, that's not efficient use of system resources at all. Ideally you'd want the entire system at 100% utilization as that'd mean there were no specific bottlenecks and the system was running at optimal usage.

Yes, I realize you think you're some sort of awesome game developer and your mind is exploding because they did something non-traditional, but the bottom line is that they relieved the GPU from some work when the GPU is bottlenecking the system, which is what you want to do to maximize performance as it frees up the GPU to do more work.

He's fixated on it because Crysis 3 is a game that totally broke his "games can't use more then 1~2 cores!" statement. Essentially Gamer was making the argument that the only performance that really mattered was single thread performance because programs are too hard to make work multi-threaded.

A while back I made the statement that the era of "all you need is a dual core" is officially over. Tek syndicate also released some info that pretty much had the fx8350 winning lots of high end gaming benchmarks vs the "favored CPUs", especially when you started to do live-streaming. They broke out of the sterile "clean room" environment and actually bench-marked how we play and got different results the other sites. This was similar to what happened when BF3 was bench-marked in multiplier with large maps vs single player mode.

Basically there are many things you can use additional processor resources for and developers are starting to develop for that. Opinions and sport team loyalty will not stop the progress of technology. That is what makes SR exciting, AMD is getting over the initial R&D curve with it's new uArch and tweaking it / tightening it down. Lowering latencies, lowering power usage, finding bottlenecks and inefficiencies and tightening them up.

+1 This is the end-all be-all answer to basically all the Intel fanboys on this thread and the reason for the thread in the first place.