8350rocks

Distinguished

i3's can't keep up with the FX 6300...they won't hold a candle to the 8350.

Comparison:

http://us.hardware.info/reviews/3314/23/amd-fx-8350--8320--6300-vishera-review-finally-good-enough-fx-8350-vs-i5-3550--fx-6300-vs-i3-3220

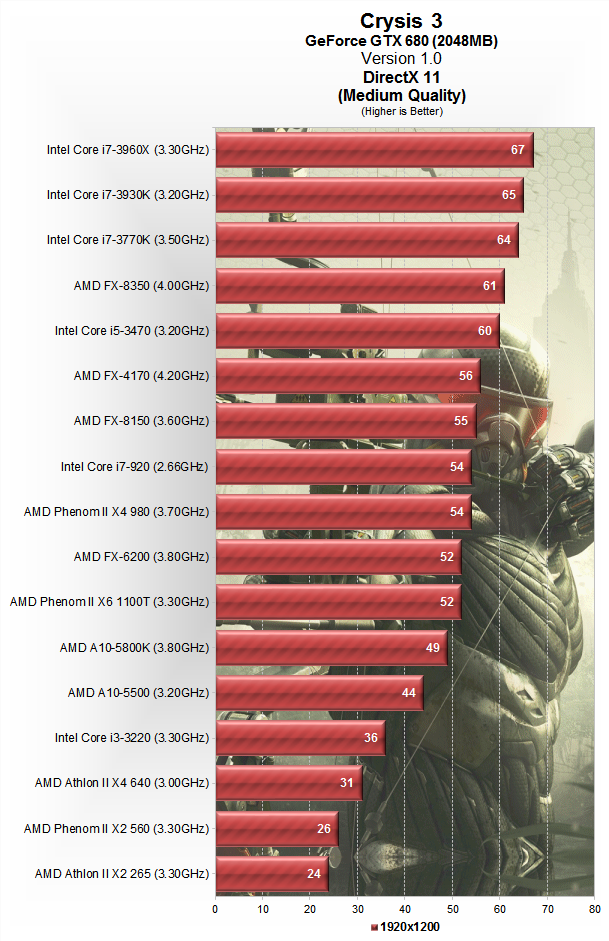

Crysis 3 FPS numbers...note the i3 with a GTX 690 can barely break 30 FPS...that isn't fine...

This shows core usage...the i3 is clearly a bottleneck...the FX 4300 is at full usage but just barely not a bottleneck. While the 8350 is at about 70-80% usage average between all the cores.

EDIT: If you consider the 2600k is using hyper threading and pulling resources from the real cores...each core usage average is 89% on the 2600k, which is about what you should expect to see on a 3570k for all 4 cores.

The 8350 is at 75.8% utilization across all 8 cores average, meaning the 8350 has headroom, where the only intel with similar or greater headroom in the data above is the 3970x, and it has greater headroom than the 8350, but by the time you factor in hyper threading, the margin is less than you might think at 50.5% utilization.

Comparison:

http://us.hardware.info/reviews/3314/23/amd-fx-8350--8320--6300-vishera-review-finally-good-enough-fx-8350-vs-i5-3550--fx-6300-vs-i3-3220

Crysis 3 FPS numbers...note the i3 with a GTX 690 can barely break 30 FPS...that isn't fine...

This shows core usage...the i3 is clearly a bottleneck...the FX 4300 is at full usage but just barely not a bottleneck. While the 8350 is at about 70-80% usage average between all the cores.

EDIT: If you consider the 2600k is using hyper threading and pulling resources from the real cores...each core usage average is 89% on the 2600k, which is about what you should expect to see on a 3570k for all 4 cores.

The 8350 is at 75.8% utilization across all 8 cores average, meaning the 8350 has headroom, where the only intel with similar or greater headroom in the data above is the 3970x, and it has greater headroom than the 8350, but by the time you factor in hyper threading, the margin is less than you might think at 50.5% utilization.