I disagree in that it's a modern, 64-core server CPU with 8-way superscalar cores & 2x 256-bit vector FP + 8-channel DDR5 + PCIe 5.0. That should give you a ballpark idea of where performance is at. For a more detailed comparison, people can go and find Graviton 3 benchmarks - or, they can even run their own!

The power data, on the other hand, is less readily available. Here's my source on it, BTW:

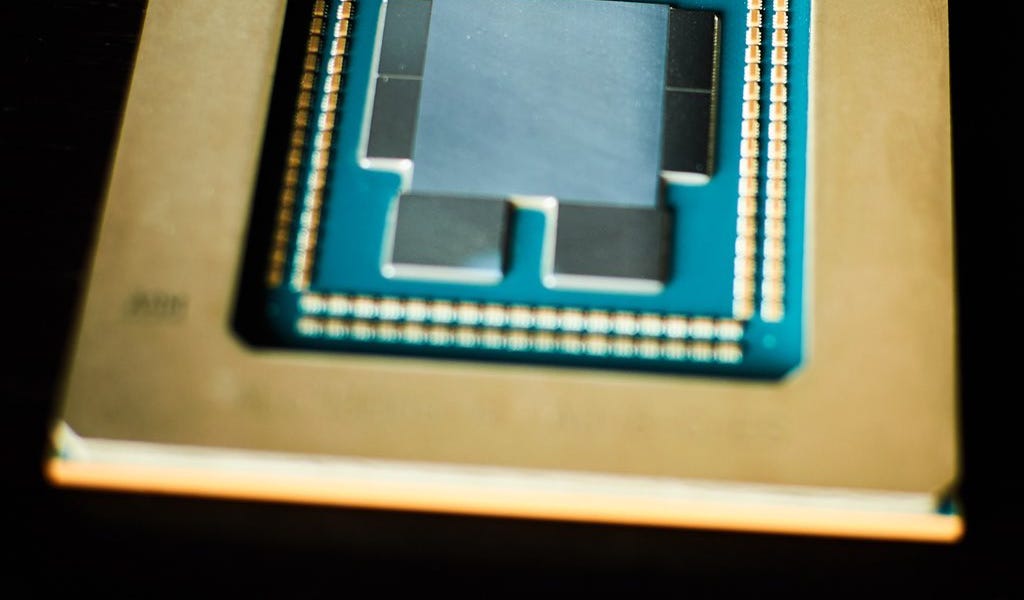

Shots are being fired at merchant silicon.Amazon's Graviton3 completely commoditizes generalized CPU compute while also bringing advanced packaging, PCIe 5.0, and DDR5 to the server market ~6 months before Intel and AMD.Server and rack level system architecture choices propel Graviton 3 to new...

www.semianalysis.com

While I'm at it, here are some benchmarks. If you want better ones, find them (or run them), yourself:

www.phoronix.com

There was a follow-on exploration of compiler tuning:

www.phoronix.com