Palladin is wrong and it looks like you haven't even understood his disingenuous arguments.

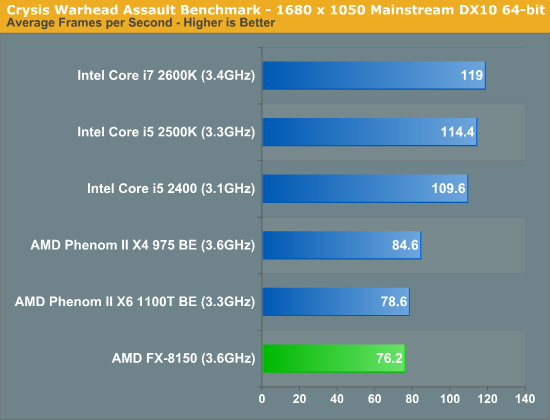

Bulldozer has a clock speed advantage over SB, it has a core advantage, it has pricing, thermals and energy use that one could live with, so where does it fall down against SB, other than clearly on IPC.

Well, I agree in the IPC disadvantage, but you're forgetting the process advantage Intel has. It's a ridiculously big advantage so BD took the most painful kick right there and as a consequence has higher power consumption in the target speeds to be at least "competitive" with the new approach, which gives the problem of heat and stuff. They can't clock it higher, so at the end of the road, it just trails everything in the market, including it's own previous gen.

Just to add a little more to that; having "low IPC" is the result of a bad scheduling and cache on the design and construction part. Intel has an obvious advantage in process, and AMD wanted to bet on GF delivering a better 32nm after the Llano expedition... It wasn't good enough.

So, all in all, IPC is not the "only one", but process and a little more in-depth in the IPC argument, some key problems for the construction itself. So palladin is not wrong in what he states, since that's how a CPU works actually.

The cache problems could be a bad decision call or just a problem with the construction/process. The good thing, is that both of them can be fixed down the road and will have the consequence of showing higher IPC (just a little, maybe), but the most important will be the mature 32nm process IMO (higher clocks, not stupidly high consumption).

It's really entrapped in the same/similar argument and hard to tell apart, but if you want to summarize the "BD fail", IPC alone is not enough IMO.

but we are still limited by software not able to use more core effectively

Ugh, to be honest, I side with gamerk a little there... More cores won't solve what software design can't. Solving the problem of parallelism is not a trivial one, even more when most programs have to be designed from the beginning with parallelism in mind (this is thinking about current APIs and Frameworks that are not well threaded).

At some point, you can thread stuff (thinking about a single program at a time) very good, but you'll always hit the "sync wall". For a lot of parallelism, you'll need to coordinate and watch the threads for balance and overlapping (depending on how you thread, is what you must watch). That's an OS + programming problem. The more "fine tuning" you make and parallelism you want, the more difficult it gets for a CPU. Adding that to what the computer is running on a daily basis, then the OS takes the ball with the scheduling approach it chooses to use (at least in Linux you can see what you want to use and how to use it).

Balance is the key word here. Intel is sitting comfortable at 4 solid cores at the moment in the Desktop, because the Industry is not pushing more than that. It will change down the road and AMD doesn't have the leveraging power to do so, unfortunately, but we'll get there eventually, hahaha.

Cheers!