And why is it that CPUs have lower memory bandwidth in the first place?

The main reason is that they simply don't

need more, at the consumer tier. The number of memory channels starts to get a little silly with the bigger server CPUs, but the main issue for servers is capacity.

Once CPUs have on-package memory as mainstream, the memory can be whatever the manufacturer wants it to be. Had Apple wanted to, it could have gone 2-4xHBM3E.

Cost. There's a reason consumer GPUs use GDDR memory and not HBM. It's also the main reason Nvidia used LPDDR5X in grace, rather than the HBM as we'd have expected.

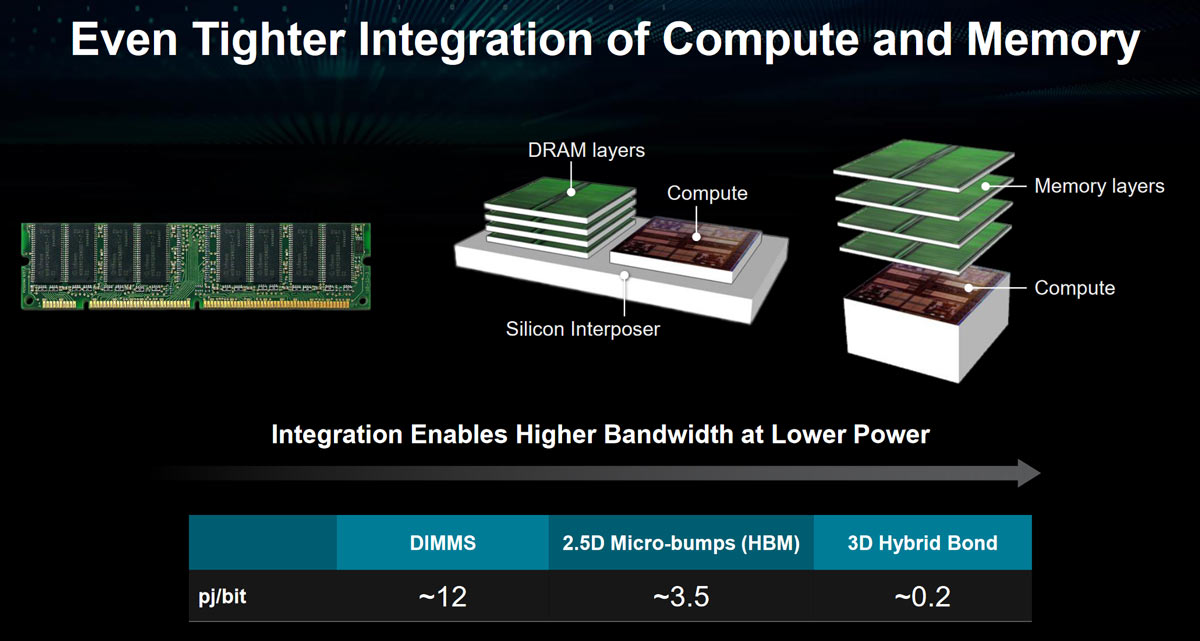

"Power efficiency and memory bandwidth are both critical components of data center CPUs. The NVIDIA Grace CPU Superchip uses up to 960 GB of server-class low-power DDR5X (LPDDR5X) memory with ECC. This design strikes the optimal balance of bandwidth, energy efficiency, capacity, and cost for large-scale AI and HPC workloads.

Compared to an eight-channel DDR5 design, the NVIDIA Grace CPU LPDDR5X memory subsystem provides up to 53% more bandwidth at one-eighth the power per gigabyte per second while being similar in cost. An HBM2e memory subsystem would have provided substantial memory bandwidth and good energy efficiency but at more than 3x the cost-per-gigabyte and only one-eighth the maximum capacity available with LPDDR5X.

The lower power consumption of LPDDR5X reduces the overall system power requirements and enables more resources to be put towards CPU cores. The compact form factor enables 2x the density of a typical DIMM-based design."

The bandwidth they get to their directly-connected LPDDR5X is only about 546 GB/s (@ 32-channel -> 512-bit ?). So, the bandwidth tradeoff vs. HBM is real, and yet for reasons of capacity and cost they went with LPDDR5X.

Getting back to the premise of replacing dGPUs, I find it a little hard to swallow that we're going to put a 500+ W, $1.5k monster GPU + a 320 W, $800 gaming CPU + probably like $500 of HBM in a single package that can only be cooled with chilled water and you have to

completely toss out, if any part of it breaks or you want to upgrade your memory, CPU, or GPU. That's why I think iGPUs will be limited to laptops and low-to-mid -range desktops. Or, exotic server chips like AMD's MI300.

Like the dinosaurs that ruled the earth for millions of years, dGPUs are very good at what they do. It will similarly take an industry-smashing asteroid to make them go extinct.