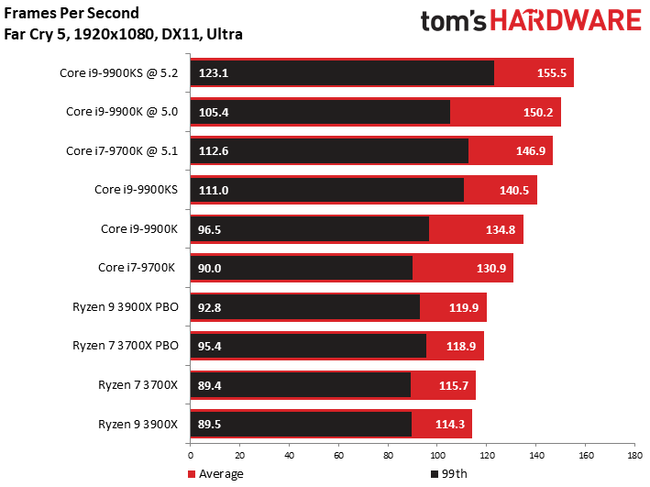

Intel does hold a slight edge in gaming at the very top end, but even then, the benefits of AMD CPUs outside of that easily outweigh such a slight lead

. They have a better upgrade path too, as AMD promises existing motherboards will continue to work with new AMD chips in 2020

What game edge would Intel have at the top end?

https://www.tomshardware.com/reviews/intel-core-i9-9900ks-special-edition-review/6

https://www.tomshardware.com/reviews/intel-core-i9-9900ks-special-edition-review/6

My 3800x OC 3800MT/s CL15 with an RTX 2080 OC is from fraps an AVG 174.910fps. This is stock cores, only an OC on the RAM side and IF @ 1900Mhz, my 3600MT/s 8-pack b-die kit can't even do CL14. Basically I get away with this OC because AMD's stock boost algorithm keeps it all stable. I hope forever.

In the benchmark, fraps was run starting as close to the numbers incrementing at the beginning as possible and stopping as close to the numbers stopping as humanly possible.

The 9900k @5GHz 3600MT/s RAM has a RTX 2080 ti. The AVG score is 176.3FPS. All the CPU scores in the bar chart are the RTX 2080 ti.

Intel has 1.4FPS lead with a faster GPU. Or I did something wrong.

Here my 3800x RTX 2080 vs. the mighty 9900ks with 3600MT/s and a RTX 2080 ti.

My 3800x OC and RTX 2080 OC. Nets 147FPS at 1080p ultra. Nice 114FPS lows.

World of Tanks EnCore RT

46115/166.66 = 276.70FPS Not sure about this one. Was expecting Intel to win hands down.

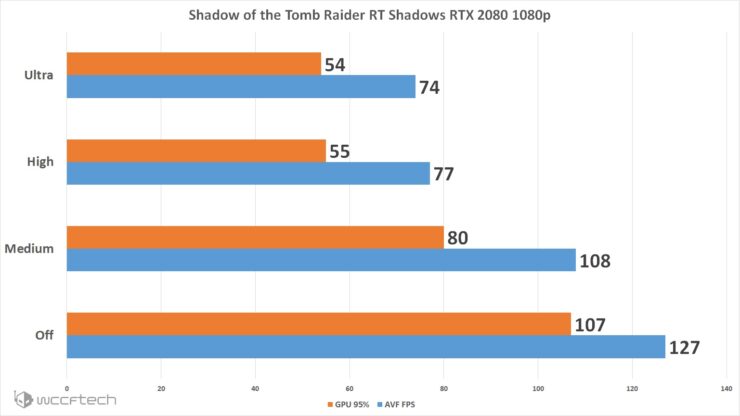

Shadow of the Tomb Raider

1080p

The 9900k system

| Components | Z370 |

| CPU | Intel Core i9-9900k @ 5GHz |

| Memory | 16GB G.Skill Trident Z DDR4 3200 |

| Motherboard | EVGA Z370 Classified K |

| Storage | Crucial P1 1TB NVMe SSD |

| PSU | Cooler Master V1200 Platinum |

https://wccftech.com/shadow-of-the-tomb-raider-ray-traced-shadows-and-dlss-performance/

127FPS @1080p

3800x OC 2080. 141FPS

We have the final piece in Nvidia's Super puzzle. Coming in at the same $700 price point, the new GeForce RTX 2080 Super offers some performance increases,...

www.techspot.com

For testing we’ve used our usual gaming rig comprised of an Intel

Core i9-9900K clocked at 5 GHz with 32GB of DDR4-3400 memory.

4k

3800x OC RTX 2080. Average 56FPS 95% 50

Assassin's Creed Origins

https://www.gamersnexus.net/hwrevie...paste-delid-gaming-benchmarks-vs-2700x/page-4

3800x RAM OC RTX 2080 132 FPS average. Low 78FPS 0.1%

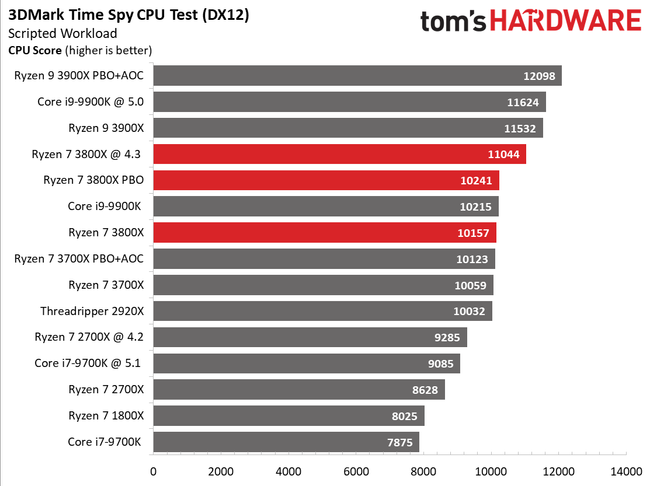

Time Spy CPU

https://www.tomshardware.com/uk/reviews/amd-ryzen-7-3800x-review,6226-2.html

9900k @ 5GHz with DDR4-3466

Fastest run 3800x OC

Best out of 15, 3800x boost is funny in 3dmark.

1# 11508

2# 11373

3# 11373

4# 11373

5# 11573

6# 11506

7# 11526

8# 11504

9# 11503

10# 11495

11# 11510

12# 11525

13# 11379

14# 11527

15# 11361

Dialing in the CPU.

If the air temperatures increase to 21c then scores drop to a maximum of 11400 in time spy cpu

https://www.3dmark.com/spy/11936283 but if the temperature drops to 5c time spy cpu increases to 11600 maximum

https://www.3dmark.com/spy/11990955.

Highest score 6c ambient

https://www.3dmark.com/spy/12006927 11611 Time spy cpu. Time spy system info causes issues with score, if systeminfo crashes sometimes. This is not the normal average as well and should be taken as a rare event. Add a chiller to the water loop and this 'could' be the norm. The main take away is ambient temps don't affect game performance that much. The 3800x will hit =>4.4GHz happily. It's also cheaper to overclock too 4.4GHz for a great time spy cpu score or use the EDC bug; than buy a chiller.

Got to love 3dmark were the 9900k gets faster as time goes on. This is a more likely 9900k overclock. Love how the stock 9900k is faster by 1000 points and all overclocked 9900k CPU's are 5GHz all cores. 5.1GHz 9900ks cpu run at 1.4volts vcore.

| 9900K | 5.00GHz | 4.80GHz | 1.300V | Top 30% |

| 9900K | 5.10GHz | 4.90GHz | 1.312V | Top 5% |

https://siliconlottery.com/pages/statistics

Eight cores at 5GHz, 127W TDP - but is it worth it?

bit-tech.net

Stock 9900k is the same as a stock 3800x. So in this chart we have a big overclock.

Test System bit-tech.net

- Motherboard MSI MEG Z390 Ace

- Memory 16GB (2 x 8GB) Corsair Vengeance RGB Pro 3,466MHz DDR4

- Graphics card Nvidia RTX 2070 Super graphics card

- PSU Corsair RM850i

- SSD Samsung 970 Evo

- CPU cooler EKWB Phoenix MLC-240

- Operating system Windows 10 64-bit

See how the 9900k losses to the 3800x OC but the 3800x equals the 9900ks at stock. Then the 9900ks pulls away with overclocking. Good luck getting a 9900ks btw.

Cinebench R20

Single Thread

Mutli-Thread

With the 3800x you need good ventilation in the room. An open windows works well. Scores can drop as low as 5140 if the room has no ventilation. Drop to 6c ambient outside.

The scores ensure that you remain on power with the stock 9900ks and beat it with a room that has lower temps and/or good ventilation. Outside temp was 16c for that result.

CPU-Z

https://valid.x86.fr/5x63d4

Just imagine this system with a RTX 2080 ti if I am trading blows and the 3800x system has just a RTX 2080. Would be nice to bench if I could only afford a RTX 2080 ti. The AMD 3800x is the best 8 core gaming CPU (tongue in cheek but also true to an extent). Based on silicon lottery and the time you put in. A 3800x system overclocked correctly is no sloth in gaming performance. Only the more extreme 9900k/ks overclocks match the 3800x with a RAM overclock with very tight timings in games.

This is without me added the all cores 4.4GHz overclock on top. If I do then may time spy score is ~11800 and the Cinebench r20 will be well above 5300. CPU-z ~6100 Multi core.

With the EDC bug its ~11600-11750 time spy cpu and approx. 5300 in cinebench r20.

Games scores are not massively changed. It's really is not worth overclocking the core manually for gaming. Maybe a RTX 2080 ti will show a difference.

EDC is better but has more affect on non gaming benchmarks. If you use the EDC bug, with scalar x10 and auto-OC +200Mhz then things do change. You can hit 4.6GHz all cores ingame

View: https://www.youtube.com/watch?v=4_zjsbavV7M

.

and I hope it will be completion between AMD, Intel, Nvidia and maybe even others down the road..

and I hope it will be completion between AMD, Intel, Nvidia and maybe even others down the road..

Really at this point its more of the same which we all know.

Really at this point its more of the same which we all know.