News AMD Zen 4 Ryzen 7000 Specs, Release Date Window, Benchmarks, and More

Page 4 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

alceryes

Splendid

I have no intent of throwing gasoline on this discussion but just wanted to point out that there is evidence showing that e-cores (in their current iteration) are more of a detriment than a benefit to gaming. Maybe when games themselves become e-core aware (when they can drop something like physics to all the e-cores) they can be a benefit but we're not there yet.If those p-cores are that good, then I guess that only proves that they are sufficient. Also, considering that my e-cores are running at 30-40% during gaming and only at around 5% when not gaming.

And what real life negatives do they have in games? Like, actual, noticeable negatives, not measured ones. Last time I checked, everything pointed towards none.I have no intent of throwing gasoline on this discussion but just wanted to point out that there is evidence showing that e-cores (in their current iteration) are more of a detriment than a benefit to gaming. Maybe when games themselves become e-core aware (when they can drop something like physics to all the e-cores) they can be a benefit but we're not there yet.

View: https://youtu.be/wqB96Bdsb4M

TerryLaze

Titan

Oh come on now, this only works because he starts with a dual core as a baseline... A DUAL CORE in 2022, with 8 e-cores.I have no intent of throwing gasoline on this discussion but just wanted to point out that there is evidence showing that e-cores (in their current iteration) are more of a detriment than a benefit to gaming. Maybe when games themselves become e-core aware (when they can drop something like physics to all the e-cores) they can be a benefit but we're not there yet.

View: https://youtu.be/wqB96Bdsb4M

So chop off the cores that windows wants to run the games on and lo and behold suddenly the games run worse...OMG how ever did he manage to do that?!

The only thing this video shows is that the thread manager works well, at least with these games, keeping the games on the performance cores only.

Next up take a 5950x an use only one core on each ccx to play a game and look how well that is going to go.

Wolverine2349

Commendable

And what real life negatives do they have in games? Like, actual, noticeable negatives, not measured ones. Last time I checked, everything pointed towards none.

They have lots of real negatives if a game thread gets stuck on one ouch. If not and they are avoided not so bad.

But they drag the ring clock down so much and a faster ring clock is great for increased gaming performance.

The e-waste cores stink and are not to be used for now.

The 12900K and 12700K should be thought of as 8 excellent core CPUs for gamers. They are nothing more than that.

Who even asked you? Also, stop replying to me, I have nothing more to say to you.They have lots of real negatives if a game thread gets stuck on one ouch. If not and they are avoided not so bad.

But they drag the ring clock down so much and a faster ring clock is great for increased gaming performance.

The e-waste cores stink and are not to be used for now.

The 12900K and 12700K should be thought of as 8 excellent core CPUs for gamers. They are nothing more than that.

Ikr? But it fuels the crap people like the guy above love to throw around without ever really experiencing the CPUs at all.Oh come on now, this only works because he starts with a dual core as a baseline... A DUAL CORE in 2022, with 8 e-cores. So chop off the cores that windows wants to run the games on and lo and behold suddenly the games run worse...OMG how ever did he manage to do that?! The only thing this video shows is that the thread manager works well, at least with these games, keeping the games on the performance cores only. Next up take a 5950x an use only one core on each ccx to play a game and look how well that is going to go.

TerryLaze

Titan

Meh, you get 20% higher latency inside the cache, if and when an e-core has to do something.They have lots of real negatives if a game thread gets stuck on one ouch. If not and they are avoided not so bad.

But they drag the ring clock down so much and a faster ring clock is great for increased gaming performance.

The e-waste cores stink and are not to be used for now.

The 12900K and 12700K should be thought of as 8 excellent core CPUs for gamers. They are nothing more than that.

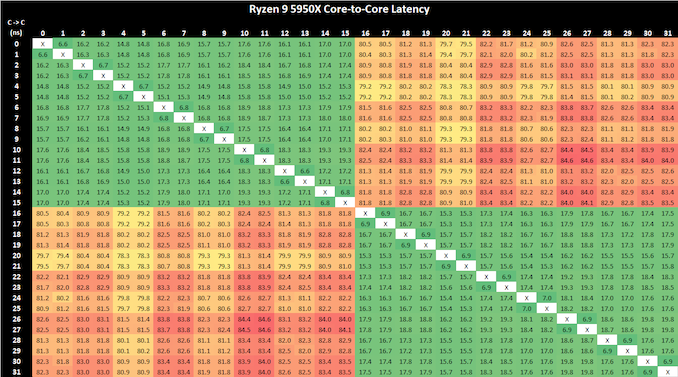

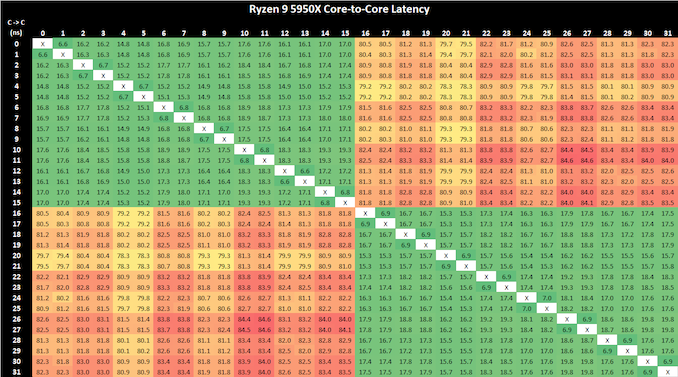

With the 5950x you get 400% + latency if a game thread gets stuck on a core of the second CCX... super ouch!

https://www.anandtech.com/show/1621...e-review-5950x-5900x-5800x-and-5700x-tested/5

The 5950x is just as well only a 8core CPU for gaming because all the other cores add so much latency that you best shut them off completely.

Oh hey, wait! that's what even AMD said you should do.

hey look, they still tell you to do that in 2022 because they still haven't given a single flying duck about fixing it.

alceryes

Splendid

Not arguing real life, just the fact that several well-respected and knowledgeable reviewers and enthusiasts have shown that the e-cores currently do not help gaming performance at all and that, in some niche cases, may actually be a detriment. Intel's 12th gen P-cores are spectacular - no arguments there.And what real life negatives do they have in games? Like, actual, noticeable negatives, not measured ones. Last time I checked, everything pointed towards none.

I also won't argue the known limitations of AMD's Ryzen 5000 series - this is a known and accepted occurrence, due to architecture limitations.

Wolverine2349

Commendable

Meh, you get 20% higher latency inside the cache, if and when an e-core has to do something.

With the 5950x you get 400% + latency if a game thread gets stuck on a core of the second CCX... super ouch!

https://www.anandtech.com/show/1621...e-review-5950x-5900x-5800x-and-5700x-tested/5

The 5950x is just as well only a 8core CPU for gaming because all the other cores add so much latency that you best shut them off completely.

Oh hey, wait! that's what even AMD said you should do.

hey look, they still tell you to do that in 2022 because they still haven't given a single flying duck about fixing it.

So wrong on all fronts. The e-waste cores are absolute crap. Intel P cores rock!!

On Ryzen 9, if a thread gets stuck on another core, it is still a good core even if it has to cross CCX. Not as ideal, but better than having to cross the slow latency to the e-core crap cluster on Intel Alder Lake CPUs and worse yet on a horrible core not capable of anything for gaming. At least AMD Zen 3 cores are still good overall unlike those e-waste cores even if it gets caught on the other CCX.

But best of all, how about 10-12 P cores on a ring bus for fastest communication and no crossing anything with a high latency penalty. Intel actually had that on Comet Lake and Broadwell-E/Haswell-E 10 core counterparts. Too bad the IPC improvements stopped with Skylake derived parts with more than 10 cores on a ring bus.

If Intel releases a 10 P core Golden Cove or 12 P core Raptor Cove chip that can clock 4.8 to 5GHz all core on a ring bus, they got a buyer in me.

But oh no they lock us to 8 good cores with adding more e-waste core clusters.

Sticking with AMD as such cause at least even if there is a cross over to other CCD via infinity fabric, at least the other cores are still the same and good cores and do not force the hybrid Big/Little crap on my PC. and a game thread getting stuck on another core on other CCX, not near the issue as Windows manages communication across CCXs well when the cores are still good unlike something getting stuck on crappy core crossing into horrific latency as well.

alceryes

Splendid

I admit this is a niche case but let's flip the tables, eh...?Oh come on now, this only works because he starts with a dual core as a baseline... A DUAL CORE in 2022, with 8 e-cores.

So chop off the cores that windows wants to run the games on and lo and behold suddenly the games run worse...OMG how ever did he manage to do that?!

The only thing this video shows is that the thread manager works well, at least with these games, keeping the games on the performance cores only.

Next up take a 5950x an use only one core on each ccx to play a game and look how well that is going to go.

Can you show me a reputable review from a known and respected enthusiist or reviewer that shows Intel's 12th gen e-cores giving some tangible benefit to gaming performance? Something like Hardware Unboxed did where they actually disabled P and E cores to show differences. I'd love to see this kind of review but haven't found any yet.

Wolverine2349

Commendable

Not arguing real life, just the fact that several well-respected and knowledgeable reviewers and enthusiasts have shown that the e-cores currently do not help gaming performance at all and that, in some niche cases, may actually be a detriment. Intel's 12th gen P-cores are spectacular - no arguments there.

I also won't argue the known limitations of AMD's Ryzen 5000 series - this is a known and accepted occurrence, due to architecture limitations.

Yes so true which is an absolute shame why Intel cannot or will not produce a more than 8 P core Alder Lake CPU. That would be a gaming and productivity monster that needs full scale parallelism without hybrid arch crap and those useless e-cores except some limited productivity that they only assist as accelerators to the already awesome P cores. More P cores is a better option for lots. There is a market for both and Intel needs to see that.

TerryLaze

Titan

That's not how that works, if one thread gets allocated to the second CCX then it will have to do all of its cache access through the increased latency.So wrong on all fronts. The e-waste cores are absolute crap. Intel P cores rock!!

On Ryzen 9, if a thread gets stuck on another core, it is still a good core even if it has to cross CCX. Not as ideal, but better than having to cross the slow latency to the e-core crap cluster on Intel Alder Lake CPUs and worse yet on a horrible core not capable of anything for gaming. At least AMD Zen 3 cores are still good overall unlike those e-waste cores even if it gets caught on the other CCX.

But best of all, how about 10-12 P cores on a ring bus for fastest communication and no crossing anything with a high latency penalty. Intel actually had that on Comet Lake and Broadwell-E/Haswell-E 10 core counterparts. Too bad the IPC improvements stopped with Skylake derived parts with more than 10 cores on a ring bus.

If Intel releases a 10 P core Golden Cove or 12 P core Raptor Cove chip that can clock 4.8 to 5GHz all core on a ring bus, they got a buyer in me.

But oh no they lock us to 8 good cores with adding more e-waste core clusters.

Sticking with AMD as such cause at least even if there is a cross over to other CCD via infinity fabric, at least the other cores are still the same and good cores and do not force the hybrid Big/Little crap on my PC. and a game thread getting stuck on another core on other CCX, not near the issue as Windows manages communication across CCXs well when the cores are still good unlike something getting stuck on crappy core crossing into horrific latency as well.

Gaming threads are not one and done where it loads things once and then it just works on that data, if that where the case we would have games that are only a few Mb large.

Intel didn't call them g-cores (gaming cores) they are for efficiency and to take any background load that would otherwise fall to the p-cores.I admit this is a niche case but let's flip the tables, eh...?

Can you show me a reputable review from a known and respected enthusiist or reviewer that shows Intel's 12th gen e-cores giving some tangible benefit to gaming performance? Something like Hardware Unboxed did where they actually disabled P and E cores to show differences. I'd love to see this kind of review but haven't found any yet.

They are not supposed to make gaming ALONE better, they are supposed to run background stuff that would otherwise slow down your game if the p-cores would have to do everything.

-Fran-

Glorious

Don't eat all the marketing fluff neither Intel and AMD push down your throat though.That's not how that works, if one thread gets allocated to the second CCX then it will have to do all of its cache access through the increased latency.

Gaming threads are not one and done where it loads things once and then it just works on that data, if that where the case we would have games that are only a few Mb large.

Intel didn't call them g-cores (gaming cores) they are for efficiency and to take any background load that would otherwise fall to the p-cores.

They are not supposed to make gaming ALONE better, they are supposed to run background stuff that would otherwise slow down your game if the p-cores would have to do everything.

If Intel would've made the 12900K a 10P-core design (and Raptor Lake a 12P-core one), it would have been as good, if not better, than it is now for 100% of gamers, but just lost in the MT tasks against AMD while beating them handily in everything else. I'm not saying the E-cores are inheritely bad, but the sacrifices they made are a bit dumb IMO: the Ring BUS, Gear settings for the RAM and asymetric ISA. I mean, losing AVX512 is not a trivial thing and no one is really talking about that, which is kind of ironic. I wonder if Intel will give the E-cores full AVX512 support soon. Then again, they'd get really fat. I just don't think they can allow AMD get AVX512 support while they still have issues with their "smart" scheduler. It is not a good outlook, regardless of what you think about AVX512.

Anyway, point is: Intel knows that 8 cores are really enough for 100% of gamers for a good while, so they just want to beat AMD and slapped the E-cores in there to do just that.

Regards.

Brian D Smith

Commendable

Brian D Smith

Commendable

Wolverine2349

Commendable

Don't eat all the marketing fluff neither Intel and AMD push down your throat though.

View: https://www.youtube.com/watch?v=Nd9-OtzzFxs

If Intel would've made the 12900K a 10P-core design (and Raptor Lake a 12P-core one), it would have been as good, if not better, than it is now for 100% of gamers, but just lost in the MT tasks against AMD while beating them handily in everything else. I'm not saying the E-cores are inheritely bad, but the sacrifices they made are a bit dumb IMO: the Ring BUS, Gear settings for the RAM and asymetric ISA. I mean, losing AVX512 is not a trivial thing and no one is really talking about that, which is kind of ironic. I wonder if Intel will give the E-cores full AVX512 support soon. Then again, they'd get really fat. I just don't think they can allow AMD get AVX512 support while they still have issues with their "smart" scheduler. It is not a good outlook, regardless of what you think about AVX512.

Anyway, point is: Intel knows that 8 cores are really enough for 100% of gamers for a good while, so they just want to beat AMD and slapped the E-cores in there to do just that.

Regards.

It would have beat AMD even in multi threaded workloads that scale up to 12 cores as the 2 core deficit would have been made up for by the 12% IPC increase and much faster clocks of Golden Cove cores than Zen 3 ones. And that is better than adding a bunch of e-waste cores to no end while staying at 8 p cores. It only would have lost to 5950X.

They came up with e-waste cores cause they could not compete on strong cores count due to heat and power consumption and die space of LGA 1700 chips with their current manufacturing process.

But still they should make a 10 P core design and 12 P core Raptor Lake in addition to their 8+8 and 8+16 counterparts as there are markets for both. They have turned away many enthusiasts to AMD as such including myself.

And yes I agree not a good look as ring bus goes down and scheduling is a nightmare in an SMP world as the e-cores I never saw get used for background tasks even on WIN11 and instead they were relegated to the P-cores and e-cores did nothing except run in Cinebench so they were useless just dragging ring clock down. Again not a good look as you mentioned.

And yeah Intel thinks 8 cores are enough for gamers for a long while.

Well everyone thought 4 cores was more than enough for gaming in 2014-2016 as well. The Core i7 7700K was mentioned that's all you will need. Well it has not aged well despite being released 5.5 years ago. The Core i7 8700K which is a 6 core 12 thread chip is much much better in gaming today despite it only being released not even a year later and having same Skylake/Kaby Lake IPC, but a couple more cores. I have heard it takes a very tough effort just to get 8 threads for games. Though it seems during Core 2 Duo and Quad days, eventually getting a Quad really helped. Then it was stagnation where no more than 4 cores ever benefited games for a long while.

It concerns me as it seems for gaming today 8 cores 16 threads seems to be what 4 cores 8 threads were in 2014-2016. Then it really struggled starting 2019. Are things different today that games are going to be harder to develop beyond 8 cores than they were beyond 4. Like law of dismissing returns for the toughness of scaling games to more cores??

Wolverine2349

Commendable

That's not how that works, if one thread gets allocated to the second CCX then it will have to do all of its cache access through the increased latency.

Gaming threads are not one and done where it loads things once and then it just works on that data, if that where the case we would have games that are only a few Mb large.

Yeah so game threads are not just one and done. Though many benefit from the 5900X over 5800X due to 64MB L3 cache instead of 32MB. But it has to cross CCX to access that cache so how much penalty.

Though it seems better for a game thread to be on another good core close in performance than an e-core which is so much worse IPC and latency and also clocked much lower compared to a different same Zen 3 core at only slight clock variations.

How would it be if there existed dual LGA 1700 socket motherboards and you could get 12 or 16 P cores on Alder Lake by buying 2 12600Ks or 12700Ks and thus creating a 12 or 16 P core Alder Lake System and shutting off e-cores?? Would crossing between each physical CPU have a bad penalty like crossing Infinity fabric between chiplets. Or worse or not as bad?

TerryLaze

Titan

Did you even watch that video?!Don't eat all the marketing fluff neither Intel and AMD push down your throat though.

He shows that his multitasking only uses 1-5% CPU...

and you are comparing that to encoding the video of a running game at real-time.

They don't have to actively help gaming performance to be helpful. By that logic, an 8-core Ryzen with the same clock speed as the biger models would be the best Ryzen gaming CPU... which is kinda the case. And even when leaving out the 5800X3D, the 5900X is actually the better gaming CPU compared to the 5950X. Almost as if those last couple cores do jack for gaming and there are other factors at work, too... huh. Expecting the e-cores to be good for something they were never meamt to be good for is illusory. However, the dude that "discussion" was with actively claimed that Ryzen CPUs are better in every regard because they don't have e-cores, and Intel should have just made p-cores and be done. But by the same logic, Ryzen CPUs are bad because they aren't monolithic and accidentially pushing a game thread to a core in the wrong chiplet can cause bad drops... that are worse than anything the e-cores could do. Why the heck do I have to listen to someone who is comparing so one-sidedly? That is pure nonsense. And I honestly could have passed on recindling this bs. Besides, as I also mentioned, I have yet to run into a situation where the e-cores would be detrimental instead of unutilized at worst; and again, I noticed streaming really using the e-cores, which is a benefit in my book since it keeps my precious p-cores free for gaming. Who cares about niche cases when that also exists with Ryzen CPUs? How come nothing is ever wrong with them even when you can prove that they do have issues, while Intel chips always have issues that make them unusable for gamers? Seriously, I'm sick and tired of the double standards. Especially when it's based off old articles describing issues that are long fixed.Not arguing real life, just the fact that several well-respected and knowledgeable reviewers and enthusiasts have shown that the e-cores currently do not help gaming performance at all and that, in some niche cases, may actually be a detriment. Intel's 12th gen P-cores are spectacular - no arguments there.

I also won't argue the known limitations of AMD's Ryzen 5000 series - this is a known and accepted occurrence, due to architecture limitations.

Also, again. I place my own experiences and that of my friends higher than some random Youtuber on the internet.

alceryes

Splendid

And yet, as shown in a couple different examples, from reputable reviewers/enthusists, e-cores taking that 'background stuff' doesn't benefit gaming performance at all.That's not how that works, if one thread gets allocated to the second CCX then it will have to do all of its cache access through the increased latency.

Gaming threads are not one and done where it loads things once and then it just works on that data, if that where the case we would have games that are only a few Mb large.

Intel didn't call them g-cores (gaming cores) they are for efficiency and to take any background load that would otherwise fall to the p-cores.

They are not supposed to make gaming ALONE better, they are supposed to run background stuff that would otherwise slow down your game if the p-cores would have to do everything.

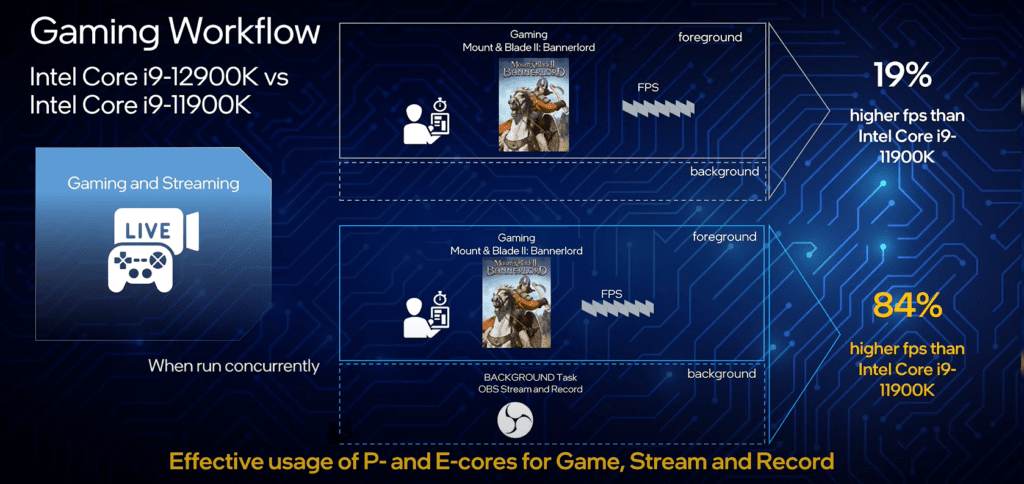

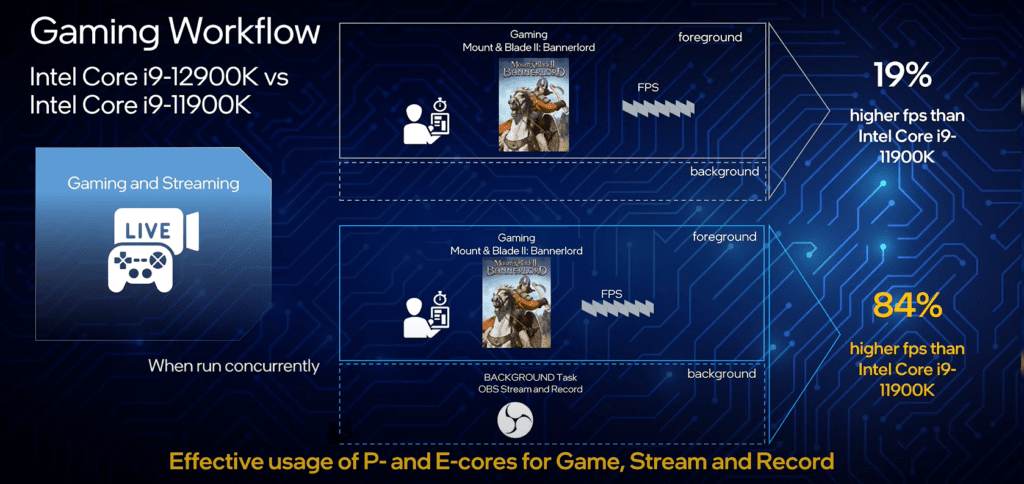

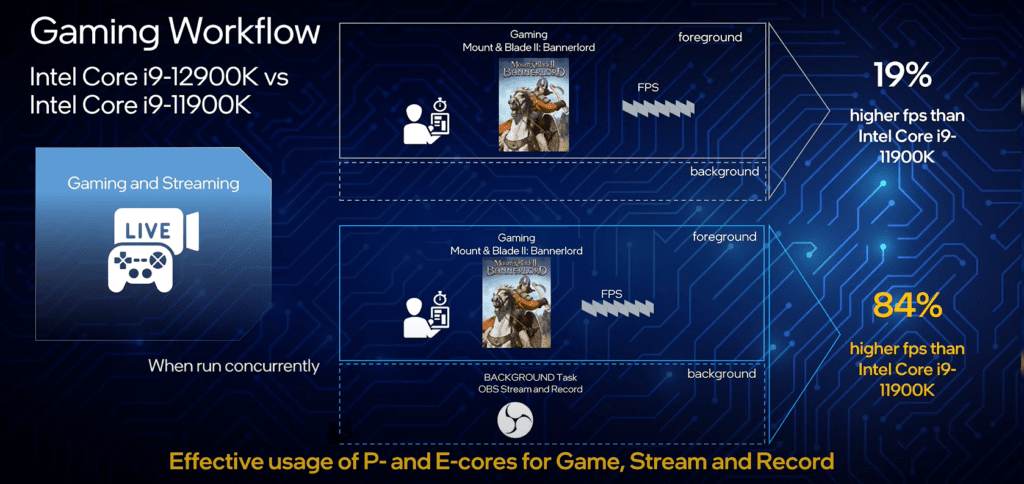

Intel's slide is at odds with every review/article that I've seen showing any kind of performance benefit/loss from e-cores, outside of synthetic benchmarks and specific, non-gaming workloads.

I'd love for this NOT to be the case. I'd love for the e-cores to show some gaming benefit. Again, if you can find me a review that backs up Intel's 'marketing slide' above on the performance increase, I and a TON of other people would be very interested. But as far as I know, the marketing slide above is a lie and no actual evidence to back up the claim exists.

kookykrazee

Distinguished

I personally will be waiting quite a while, I have a 5950x and nice high end board. I just bought and will update to a new full tower case. The only thing I MIGHT buy int he next year, will be the 4xxx gpu when one comes out. I have my 2 3090 that are paid off via mining and purchasing at or below MSRP, due to discounts I got. I might get a new 45" screen, but otherwise, it's all goodThe question is if Socket AM5 will be able to attract Socket AM4 users, and I don't think it will. Let's say it's on average 25% faster per thread than the Ryzen 5000 series. The cost of a new motherboard and RAM in addition to the CPU makes that 25% cost an awful lot. Even a Zen 2 or Zen 1 user can drop in a Zen 3 CPU into their existing setup for a large to enormous performance gain for a relatively small price.

Also assuming that AMD is going to attempt to keep the same pace as Intel, the next 3 or 4 years should bring relatively decent to large increases in performance and efficiency across all segments each year, and the last thing most people want is to buy in at the start only to see each generation bring a large performance increase, as those of us who were Socket AM4 early adopters saw.

Combine this with increasing GPU supply and more people finally being able to see what their existing Socket AM4 setup can actually do, and I predict quite sluggish sales of AM5 systems.

-Fran-

Glorious

I mean, I know for a fact I can encode a video and game with a little loss of performance; its called Streaming. You clearly missed the point.Did you even watch that video?!

He shows that his multitasking only uses 1-5% CPU...

and you are comparing that to encoding the video of a running game at real-time.

Ask yourself, honestly, who is going to do* heavy (think VirtualDub, Sony Vegas, Premier or any other video edting tool) video encoding and play games (even demanding ones) at the same time in a platform with only Dual Channel and limited CPU BUS bandwidth? I'm scratching my head at the premise...

EDIT: Just to clarify from that marketing slide. Intel is telling you hardware encoders don't exist and they're, probably, using software to illustrate how "big" of a jump it is. I can't check the fine print, but I also can tell you for a fact, using X264 with the "fast" encoder preset and some other tweaks, you get at most a 5% performance hit without dropping frames. There's more to streaming/encoding than what that slide lets you "understand"; hence: don't eat the marketing fluff so easily.

Regards.

Last edited:

Correction is needed for display support. AMD's slide clearly show that RDNA2 iGP on 7000 series CPUs will support DisplayPort 2.0, minimally with UHBR10 link at 40 Gbps.Here's the Ryzen 7000 release , benchmarks, specifications, pricing, and all we know about AMD's Zen 4 architecture.

AMD Zen 4 Ryzen 7000 Specs, Release Date Window, Benchmarks, and More : Read more

This is what Rembrandt APUs have been recently certified for by VESA and Raphael CPUs will follow suit.

TerryLaze

Titan

You are missing the woods from the trees...I mean, I know for a fact I can encode a video and game with a little loss of performance; its called Streaming. You clearly missed the point.

Ask yourself, honestly, who is going to do* heavy (think VirtualDub, Sony Vegas, Premier or any other video edting tool) video encoding and play games (even demanding ones) at the same time in a platform with only Dual Channel and limited CPU BUS bandwidth? I'm scratching my head at the premise...

EDIT: Just to clarify from that marketing slide. Intel is telling you hardware encoders don't exist and they're, probably, using software to illustrate how "big" of a jump it is. I can't check the fine print, but I also can tell you for a fact, using X264 with the "fast" encoder preset and some other tweaks, you get at most a 5% performance hit without dropping frames. There's more to streaming/encoding than what that slide lets you "understand"; hence: don't eat the marketing fluff so easily.

Regards.

Yes the marketing slide shows an extreme, a niche that not many people will use.

Intel is clearly telling you that if you don't use any CPU heavy software alongside your game then the e-cores aren't going to give you anything extra.

How is that a bad thing, they are telling you when it makes sense to get a CPU with e-cores instead of a CPU without them.

Also most live streamers still prefer CPU encoding at a somewhat higher quality level because they get a better quality/bandwidth ratio than hardware encoding, especially if they have to use the gpu for it because that reduces the FPS the GPU can get.

If you are just recording you can use hardware acceleration and a very high bitrate to get very good results but if you had to upload those at real-time you would have a big problem.

Yes, ask yourself, why did the reputable reviewers not exactly recreate what intel claimed to see if it's true or not? Shouldn't that be the first thing a reputable source would do?And yet, as shown in a couple different examples, from reputable reviewers/enthusists, e-cores taking that 'background stuff' doesn't benefit gaming performance at all.

No. He meant what he said. There are 24+4 PCIe 5.0 lanes. x4 are for the chipset. The chipset is wired PCIe 4.0 x4 as Asmedia did not have enough time to validate 5.0 chipset, so it will run at 64 Gbps.EDIT?

"The Socket AM5 motherboards will expose up to 24 lanes of PCIe 5.0 to the user......, and leverage an additional four lanes of PCIe 5.0 to connect to the chipset"

AMBIGUITY? ie 8 LANES FOR CHIPSET? hmm?

i think u mean additional 4 lanes (total 28 lanes)...

...."(less expensive motherboards can use PCIe 5.0 to the chipset). "

U mean pcie 4.0? "

TRENDING THREADS

-

-

I swapped my mini-LED display for a $1,300 OLED monitor. Here’s what happened

- Started by Admin

- Replies: 27

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.