I recently upgraded from a 1050 TI/R3 2200G to a 3070/i5 12400F. Ever since I upgraded, I never felt like it was a new PC. Things stuttered, took a long time to load and even crashed at sometimes. I thought maybe that was normal because I use an old Sata SSD, but now I really don't think it is. Only a few load of games actually worked ever since I upgraded, which were God of War, that run in 4k with DLSS Quality beautifully... and RDR2 which also was in 4k with DLSS Quality. Never had any problem with these games at all.

But playing Fornite, my framerate is literal hell. I can hit 150fps and not long enough it will drop massively to low 100s, even 70s and 30s constantly. The same thing goes for Spider-Man Remastered, my GPU usage never really escapes my CPU usage unless I use higher resolutions. Shouldn't I get great FPS playing at 1080p with this GPU and CPU? I've tried every setting and nothing seems to work - even in 1080p my game is always this 60 to 40 in an instance experience. So much stutter, textures not loading properly and loading right in front of me, horrible frametimes....

Almost every game is like this on my PC. And now, with the launch of Hogwarts Legacy my PC is giving a 40 fps experience with 50% GPU usage and 30% CPU usage. It can go up to 60% GPU but that's it. It doesn't go higher. Never. I know the game is horribly optimized for PC but my cousin has a 2060 and a i7 10700f and is running the game beautifully. I don't know what to do anymore. I re-installed Windows and every driver three times. Still persisted.

GeForce RTX 3070 GALAX

Intel Core i5 12400F

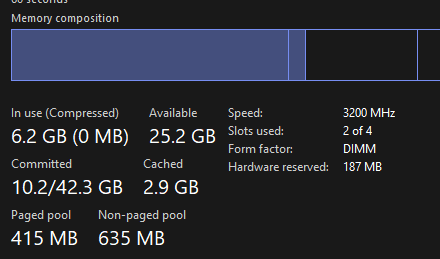

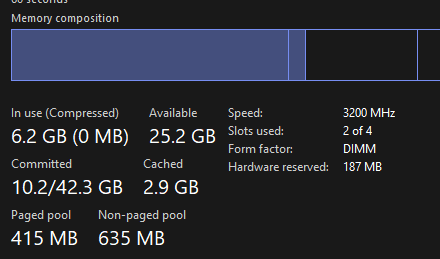

RAM Memory Kingston Fury Beast 2x16 (3200mhz x 2400mhz both XMP'd to 3200mhz)

Motherboard B660 TUF Gaming Intel LGA 1700

Fonte Corsair CV650W

SSD SATA 128GB Sandisk

HD 1TB Seagate

OBS; I had a problem that I used two older RAM sticks at once, 1x8 2111mhz and 1x16 3600mhz. That made my CPU always go to 70% and my GPU to 20%.

I switched it to 1x16 (3200mhz) and 1x16 (2400mhz). Both are XMP'd and running at 3200mhz.

Could the RAM still be the problem here?

But playing Fornite, my framerate is literal hell. I can hit 150fps and not long enough it will drop massively to low 100s, even 70s and 30s constantly. The same thing goes for Spider-Man Remastered, my GPU usage never really escapes my CPU usage unless I use higher resolutions. Shouldn't I get great FPS playing at 1080p with this GPU and CPU? I've tried every setting and nothing seems to work - even in 1080p my game is always this 60 to 40 in an instance experience. So much stutter, textures not loading properly and loading right in front of me, horrible frametimes....

Almost every game is like this on my PC. And now, with the launch of Hogwarts Legacy my PC is giving a 40 fps experience with 50% GPU usage and 30% CPU usage. It can go up to 60% GPU but that's it. It doesn't go higher. Never. I know the game is horribly optimized for PC but my cousin has a 2060 and a i7 10700f and is running the game beautifully. I don't know what to do anymore. I re-installed Windows and every driver three times. Still persisted.

GeForce RTX 3070 GALAX

Intel Core i5 12400F

RAM Memory Kingston Fury Beast 2x16 (3200mhz x 2400mhz both XMP'd to 3200mhz)

Motherboard B660 TUF Gaming Intel LGA 1700

Fonte Corsair CV650W

SSD SATA 128GB Sandisk

HD 1TB Seagate

OBS; I had a problem that I used two older RAM sticks at once, 1x8 2111mhz and 1x16 3600mhz. That made my CPU always go to 70% and my GPU to 20%.

I switched it to 1x16 (3200mhz) and 1x16 (2400mhz). Both are XMP'd and running at 3200mhz.

Could the RAM still be the problem here?