Talk about missing the point...

Obviously the CPU

doesn't always run all cores at the same clock. My point was that

if it did, then the power draw of 16-core Raptor Cove would be enormous. And if it's

not going to run all 16 cores at max clock, because that would be inefficient, then — and this will really blow your mind! — why not add more efficient cores that run at lower clocks and use a lot less power? We could even call these things something like "E-cores"!

Hypothetically, 16 Zen 4 cores all running at 5.1 GHz would be around 352W, way more than the TDP limit. And if they were running 'stock' at up to 5.7 GHz, then it would be the 688W you speak of, which is about four times the actual power limit on Ryzen 9 7950X. Of course, those numbers aren't actually accurate, because that chart isn't showing you what you think it's showing.

It's not "per core power," it's "package power running a single-threaded workload." BIG difference! We can rightly estimate that a single CPU core is using

less than what the chart shows, but all the other stuff in the package will still be using power. How much power? That's difficult to say.

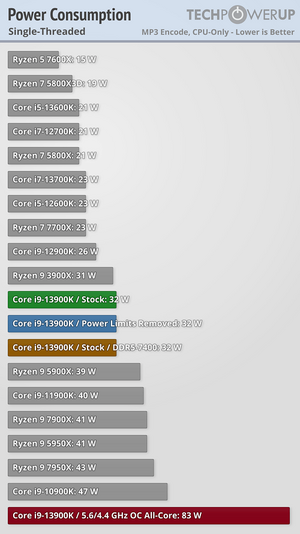

But what's funny is you don't give a chart that shows where Raptor Lake would land. Let me do that for you, and for TheHerald, because he keeps missing the point.

View attachment 313

Running 'normally' a single Raptor Cove core ends up at around 32W — which is obviously clocking down quite a bit. How do we know? Because when all the P-cores are at 5.6 GHz (and E-cores at 4.4 GHz), package power jumps to 83W.

Again, how much of that is for a single core in practice? Well, less than 32W presumably, and I suspect Intel is better than AMD about making all the idle cores go into a deep sleep state where power use drops close to zero. Because when you force all the cores to clock at 5.6 GHz / 4.4 GHz, the package power skyrockets. Even a fully idle core sitting at 5.6 GHz uses more power than that same core idling at 1.4 GHz or whatever the 'sleeping' value is for Intel these days.

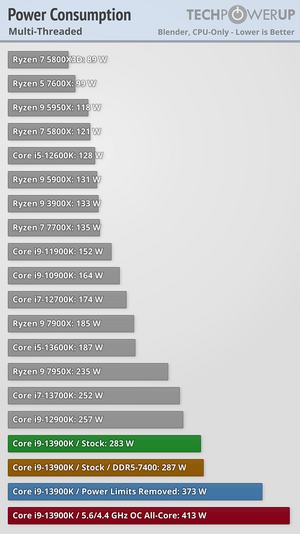

Which is why looking at a fully loaded multi-threaded workload is so important, and that's where TPU gives this:

View attachment 314

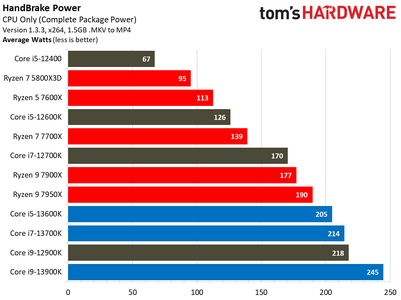

Or alternatively, our own testing:

View attachment 315