Wow, that's high praise, considering the source.Congrats Jarred on this article! Very nice work!

I love your PSU articles. Seriously.

Wow, that's high praise, considering the source.Congrats Jarred on this article! Very nice work!

I didn't bother putting them into the tablet, but I may change that. Here are the numbers:It would've been the icing on the cake to see them in the efficiency rankings table, as well.

| R9 390 | 31.8% |

| R9 Fury X | 39.1% |

| GTX 970 | 51.1% |

| GTX 980 | 52.2% |

| GTX 980 Ti | 51.3% |

We can't have icing at THOSE temps! 😆Thanks. I had a good laugh seeing the R9 390 and Fury X top the Fumark power charts. I was somewhat expecting it, but it's still a bit of a shock to see how far out Fury X places.

It would've been the icing on the cake to see them in the efficiency rankings table, as well.

I think you're missing my meaning. The quote is exactly what I say: the MSI RX 570 Gaming X 4G can draw up to 180W over the 8-pin PEG connector. (Peak was 182.8W.) The Powenetics hardware collects data for the PCIe x16 slot, as well as up to three 8-pin PEG connectors. The MSI card uses only one 8-pin connector."The RX 570 4GB (an MSI Gaming X model) actually exceeds the official power spec for an 8-pin PEG connector with FurMark, pulling nearly 180W. "

The 570 can draw an additional 75W from the PCIE slot, for a total of 225W. My 4GB RX570 is plugged into a crap proprietary power supply via 8 pin connector, IIRC its a single 14A rail. Runs over 150W stable for hours OC'd to 1400 and IIRC the memory at 2000. folding@home puts a beatdown on the cpu and gpu. I've actually drawn more power and made more heat with folding@home than furmark or other cpu burnin tools.

I can only test with the cards I have, and that meant an MSI RX 570 Gaming X 4G in this case. What's interesting is that the card is right at the expected 150W TDP (TBP) when running Metro Exodus. The problem is that FurMark blows right through all the power and throttling restrictions. I'm not quite sure why, but I suspect any RX 570 or 580 is likely to exceed TBP. Some might not go as high as 225W, but the other AMD cards tested indicate there's something about FurMark-type workloads that will exceed typical power use.Inconsistent. Looks like you are using a high-end RX570 , power consumption similar to an RX590. I know it is not impossible. I have RX570s here that has a consumption close to my Vega 56, while some RX580s equals the Vega

FurMark is infamous for this. I believe it achieves very high shader occupancy and is compute-bound, rather than being memory-bottlenecked.the other AMD cards tested indicate there's something about FurMark-type workloads that will exceed typical power use.

This is true, though in practice the difference between most games isn't very large unless a game is specifically limited by something other than the GPU at some settings. I used Metro Exodus, and I noticed that some GPUs (especially lower end models) use more power at 1080p medium than at 1440p ultra -- and while I didn't explicitly state this, I did test at the settings that resulted in higher power use. I also checked a few cards in several different games, and the difference for an RTX 2080 Ti as an example at 1440p medium to 1440p ultra was mostly about 5-10W.To my understanding this only tells how much power GPU will consume, when you play a game with certain settings. Which is different job for every gpu, since they produce different amount of details per second (pixels, voxels, etc.) based on alternating fps.

I think the more interesting set would be how many Watt-Hours are needed to finish a certain task, like encoding a video or rendering a 3d object.

Since my eGPU will not do heavy work for 99% of time in use and will spend a lot of time, I'm most interested about GPU power efficiency in idle and light use?

I also don't want extra heat to the room and want to save in elecricity.

How much these GPUs use, when you do nothing. Like showing the desktop on 4k monitor. Or just scroll your few pages on a browser.

Idle power for most of the latest cards is around 15W, give or take. A few might go as low as 9W (when the display is in sleep mode), some might get close to 20W, but a few watts isn't a major concern I don't think. Once running a graphics workload, you'll get performance and power as shown in these charts. You can reduce power use by reducing performance, basically. Also, for an external GPU box, you probably won't get maximum performance anyway and I wouldn't go about the ~$500 GPU mark. The PCIe x4 link equivalent is going to be a bottleneck.I'm looking for card to my egpu, which has the smallest idle power use, since 99% of time, I'd be using it only to produce more screen real estate.

Then 1% might be gaming or encoding & rendering.

So I'd like it to use energy as little as possible and then also produce heat as little as possible.

How big difference is there in current AMD lineup?

Thanks for the info.Idle power for most of the latest cards is around 15W, give or take. A few might go as low as 9W (when the display is in sleep mode), some might get close to 20W, but a few watts isn't a major concern I don't think. Once running a graphics workload, you'll get performance and power as shown in these charts. You can reduce power use by reducing performance, basically. Also, for an external GPU box, you probably won't get maximum performance anyway and I wouldn't go about the ~$500 GPU mark. The PCIe x4 link equivalent is going to be a bottleneck.

This is more of a question that belongs in its own thread.I guess my choice is now between 5500, 570 and 580.

What gives the best price-performance-power usage...?

I have one old eizo, which would benefit from having dvi, but I could also use that with some other setup...

In terms of performance per watt, the 5500 XT wins easily: the 5500 XT 8GB is basically tied with the RX 590 in performance (it's about 2% slower is all) and uses 126W vs. 214W. The RX 580 8GB is about 4% slower than 5500 XT (more if it's a card that's not as overclocked as the Sapphire Nitro+ I used for testing) and has power use of around 208W (less with some 580 models).I guess my choice is now between 5500, 570 and 580.

What gives the best price-performance-power usage...?

I have one old eizo, which would benefit from having dvi, but I could also use that with some other setup...

Thanks for the advice!In terms of performance per watt, the 5500 XT wins easily: the 5500 XT 8GB is basically tied with the RX 590 in performance (it's about 2% slower is all) and uses 126W vs. 214W. The RX 580 8GB is about 4% slower than 5500 XT (more if it's a card that's not as overclocked as the Sapphire Nitro+ I used for testing) and has power use of around 208W (less with some 580 models).

Factoring in price, the RX 590 is now selling for $210+ ($195 on eBay), RX 580 8GB is selling for $170 (possibly $130 on eBay), and RX 5500 XT 8GB is selling for $190 (same or higher on eBay). Given pricing is currently pretty close, RX 5500 XT 8GB wins out in my book, but if you can find a cheap RX 580 (meaning, around $140 or less) that would still be a reasonable option.

I think all the necessary hardware is present on current GPUs to support DVI, but it's not usually done because it's viewed as a dying / dead interface. Or maybe only single-link is supported? Anyway, this PowerColor 5500 XT 8GB has a DVI-D connector, but the specs say it's single-link. There are also multiple GTX 1650/1660 Super cards that have DVI-D connectors, but none explicitly say they're dual-link. Of these, I'd personally go for the Asus 1660 or 1660 Super, unless you insist on using an AMD GPU. But DL DVI-D support seems unlikely on Navi while being almost certain with Turing:Thanks for the advice!

The only factor I have for older cards is that dual-dvi eizo.

I'm not sure, if I could use Apple's dp -> dual-dvi adaptor, that I have, between the dp switch (I'm planning to buy) and the Eizo.

I understand that dvi connectors have nothing to with this thread, but I have (Tim Cook's) courage to ask:

Does a display adapter with 5500 & dvi connector exist?

Or were they wiped out by last gen? Is this a technical decision by AMD?

My spreadsheets show ~14W 'idle' power use (with the monitor still powered up) on the Sapphire RX 580 8GB Nitro+ LI used for my testing. That's a higher performance 580, so I suspect other 580 cards will potentially use a bit less power (1-2W). But that's only with a single display. Let me see if I can get some quick figures for what happens when two 4K monitors are connected...Found this thread:

https://www.techpowerup.com/forums/...e-rx580-power-consumption-30w-at-idle.263656/

Sapphire's 580 idling at 30 watts, other's 10 W...

This is the difference, I'm looking for.

Any charts anywhere for these idle power use...?

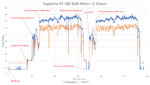

I just ran some tests, and the results are very interesting -- and again illustrate the problem with 'trusting' whatever power numbers software tells you. This is an image showing the Sapphire RX 580 Nitro+ LE at idle with a single 4K display connected, then with a second 4K display, then launching Horizon Zero Dawn and the built-in benchmark. Then I exit to the desktop, disconnect the second display, and launch HZD again and run the test a second time.My spreadsheets show ~14W 'idle' power use (with the monitor still powered up) on the Sapphire RX 580 8GB Nitro+ LI used for my testing. That's a higher performance 580, so I suspect other 580 cards will potentially use a bit less power (1-2W). But that's only with a single display. Let me see if I can get some quick figures for what happens when two 4K monitors are connected...

My eizo is SX3031 and I don't want to sell it. Price wouldn't be good and there aren't eizos at that price-quality point today, used or new.What Eizo display are you using? Might be time to sell it and just upgrade to a modern DisplayPort monitor, though I know you're unlikely to get a good value out of going that route. Or you can try one of those 1650 Super or 1660 Super cards, or even the PowerColor RX 5500 and see if the DVI link is actually dual-link.

PowerColor explicitly says "SL DVI-D" but the connector looks like a standard DL DVI-D. (See: https://upload.wikimedia.org/wikipedia/commons/f/fb/DVI_Connector_Types.svg ) I'm 95-ish percent sure the GTX 16-series parts with DVI-D are dual-link as well -- there's at least one place (PCMag) that says the standard configuration for the cards is 1x DL DVI-D, 1x HDMI, and 1x DP.