juanrga :

That review uses another concept of efficiency for its final graph. Using the ordinary concept of efficiency the i7-8700k was more efficient than the R7-1800X, because the 1800X was 7.93% faster on Blender but consumed 9.15% more power.

Also he only tested the top i7-8700k model, which will have worse efficiency than other lower-clocked CoffeLake models. It is the reason why the 1700 is more efficient than the 1800X.

Performance varies linearly with frequency, but power consumption varies nonlinearly. So reducing frequency increases efficiency with everything else the same.

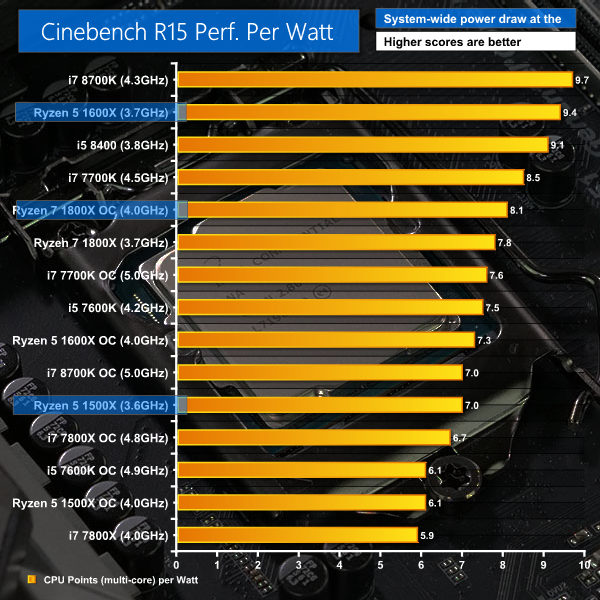

Other reviews measured efficiency. Kitguru used CB15 and got that the i7-8700k was 24% more efficient than the R7-1800X.

https://www.kitguru.net/wp-content/uploads/2017/10/Power-per-Cinebench.png

HFR measured efficiency using x264 workload. Again the i7-8700k was more efficient than 1800X.

http://www.hardware.fr/getgraphimg.php?id=609&n=1

The more efficient chip was the i5-8400, again due to lower clocks.

Here's the interesting page, since the image is not being displayed: https://www.kitguru.net/components/leo-waldock/intel-core-i7-8700k-and-core-i5-8400-with-z370-aorus-gaming-7/6/

They don't test the R7-1700 in there, but given how the 1600X is right next to the i7 8700K, I would imagine the 1700 is up there as well. Keep in mind both have similar ranges: 3.6Ghz base, 3.7Ghz all-core, 4.0Ghz 2-cores and 4.1Ghz XFR for the 1600X and 3.0Ghz base, 3.1Ghz all-core (not 100% sure on this one), 3.7Ghz 2-core and 3.75Ghz XFR for the 1700. The interesting part is when the software is heavily multi-threaded. The 1700 will have monster efficiency and there's no way around it. Intel will have parity (or way better efficiency) with the i7-8700 instead, but until then, the R7-1700 is just better. Deal with it.

Also, interesting that in that same link they prove you wrong with the i5-8400. It's right *after* the 1600X.

As for the frenchy site, well, look at the R7-1700 being more efficient than the i7-8700K. Funny how 2 pieces of evidence you provide contradict each other. What gives?