Quite the opposite, AMD isn't rational, they just have a low power server part that they are trying desperately to make it work as a desktop part.

With a EKWB EK-AIO Elite 360 D-RGB 360mm,

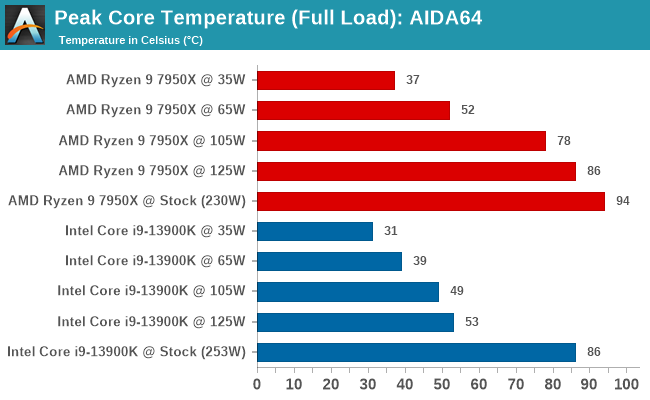

the 7950x can't even reach the power limit set by AMD because it already hits the ~95 degree heat limit.

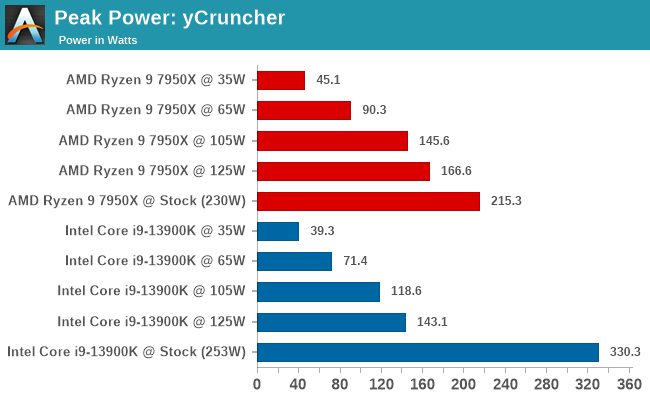

The 13900k reaches 330W, and stays well below the temp limit, at 86 degrees.

So which company pushes their CPUs more?

The one that tries to make their CPUs use more power than they can handle or the one that leaves a good 30% power headroom?

(If people would actually respect the limit that intel sets. )

You are not supposed to use a desktop part for server workloads that would make your CPU run at 100% power all the time anyway.

Professionals like designers, architects, video editors and so on, they do need the grunt for the final renders but they also have to deal with effects or calculations that only run single threaded so if that can go even a little bit faster it's going to be a good reason for them to get an KS.