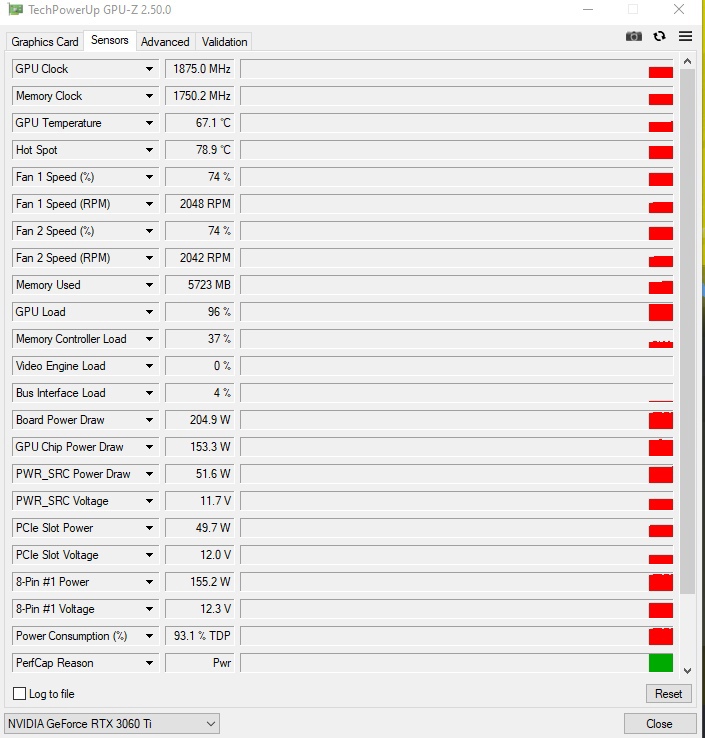

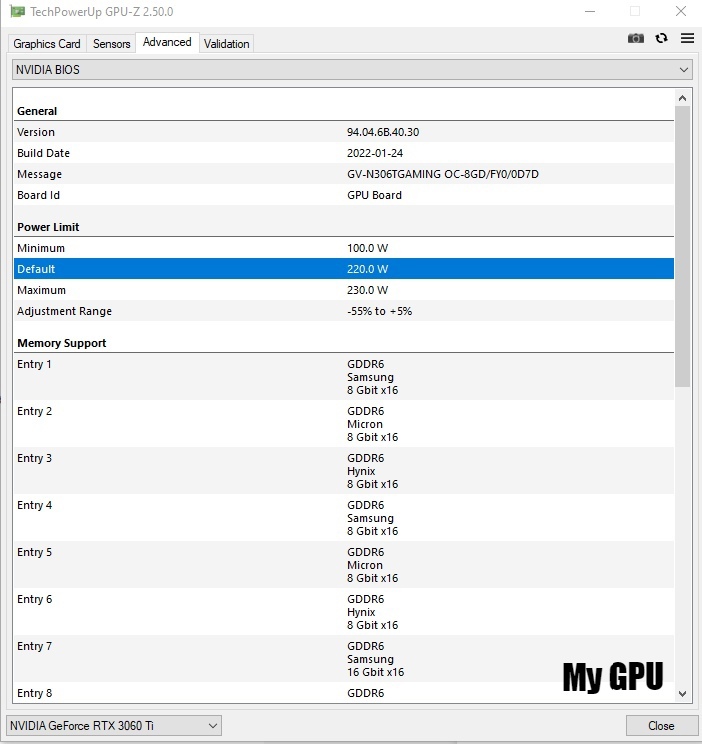

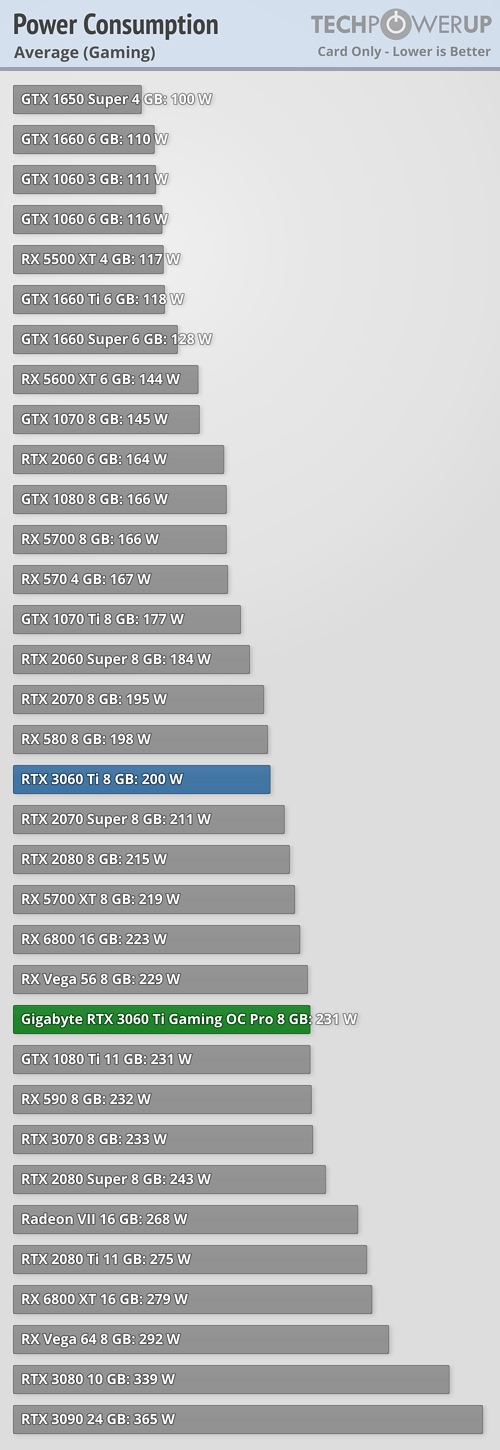

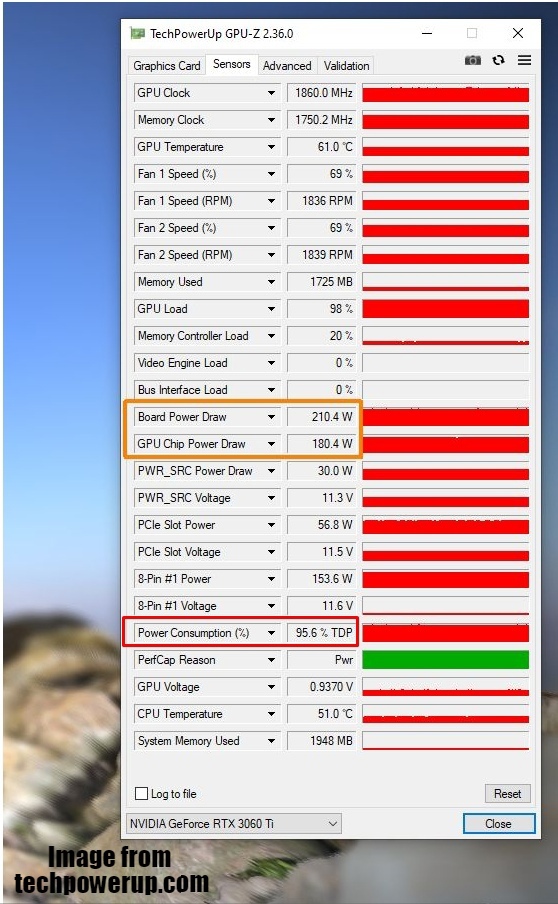

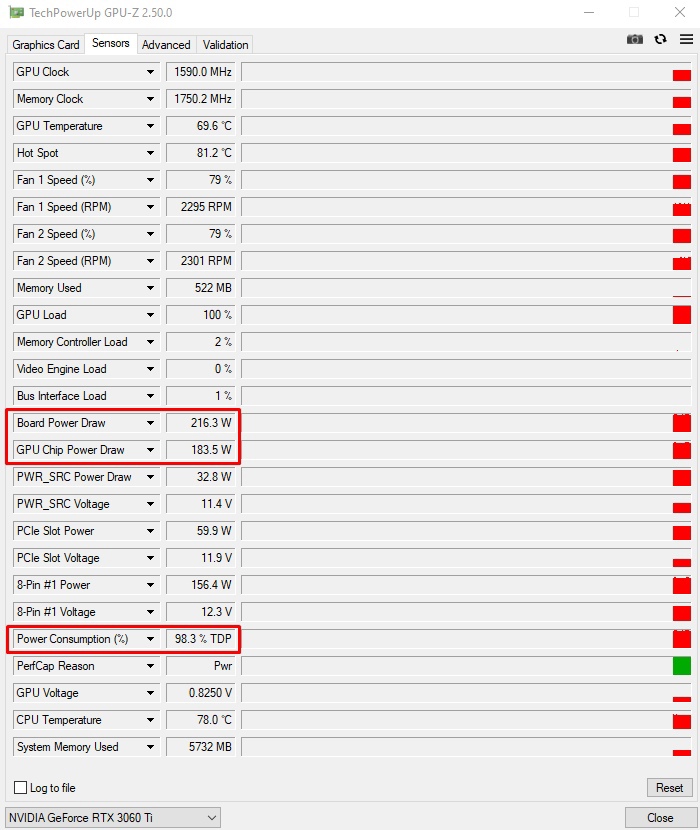

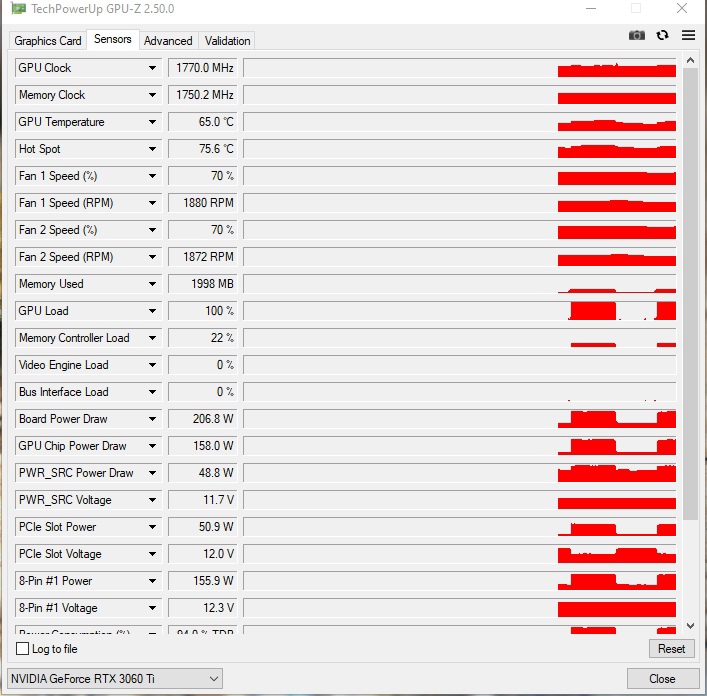

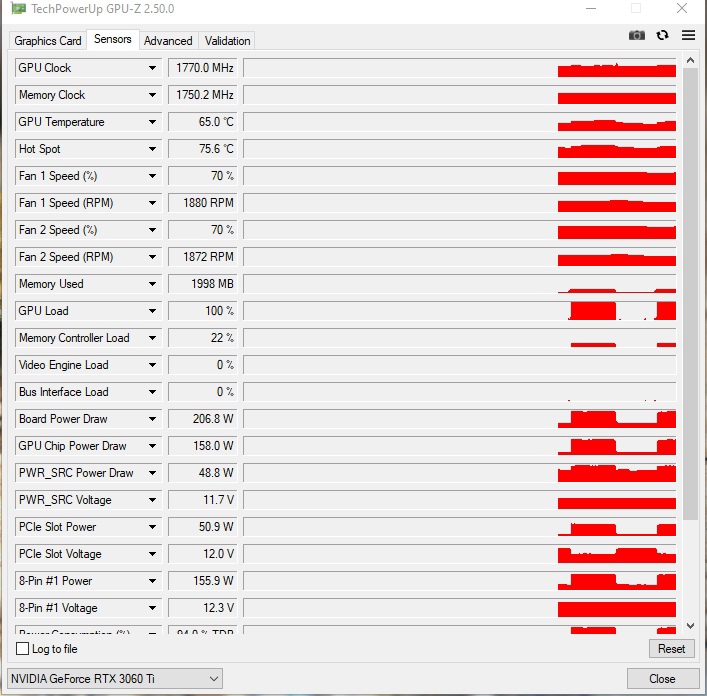

In FurMark, I get an average of 169 FPS with GPU Benchmark 1080p. The GPU frequency during testing in Furmark does not exceed 1410 Mhz, judging by the graphics. When viewed in GPU-Z, the frequency drops from 1740 to 1410. If I turn on the 720p test, the frequency rises to 1515 mhz. In MSi Kombustor, when testing at 1080p, the GPU frequency rises to 1780-1800 Mhz with an average fps of 62 frames. But it confuses me that in all test scenarios my TDP does not exceed 93-94%. Sometimes it goes up to 96%, for a second. I've tried raising the power limit in Afterburner to 104%, but that didn't help. I also turned on the preferred speed mode to the maximum in the nvidia settings, this also did not help. I have a 650W Masterwatt power supply. When I look at the test results of other people, they have a maximum TDP of 100 to 102%. I checked the voltage on the 12V line in AIDA64 (I know that this is not entirely correct), but when the video card is loaded with 12V, the line shows 11.800V, which is normal. If measured with a tester, then the difference can be 0.1-0.2v. So what could be the reason?

Just in case, my GPU is Gigabyte 3060 ti Gaming Oc LHR (ver 2.0)

Just in case, my GPU is Gigabyte 3060 ti Gaming Oc LHR (ver 2.0)

Perhaps this is a glitch in the GPU-z program itself. Because if we proceed logically, then in the game I have a consumption of 198-208W, which just rests on the maximum TDP 3060 ti.

Perhaps this is a glitch in the GPU-z program itself. Because if we proceed logically, then in the game I have a consumption of 198-208W, which just rests on the maximum TDP 3060 ti.