Cost per mm^2 is not really a great metric to use seeing as multiple SKUs use the same die (ex: 4070 and 4070ti use AD104).

If we assume that the 4070 Ti and 4090 both enable about the same % of their respective dies, then it's a fine metric.

Cost per GB is also nebulous seeing as they're using fewer memory chips per tier than the 30 series counterpart which impacts board cost.

The number of memory chips and data bus width scales linearly between the three SKUs I mentioned.

I think you're assuming that I'm talking about 4000-series vs. 3000-series, but I'm not. I'm only comparing 3 products: RTX 4090, RTX 4080, RTX 4070 Ti. What I'm saying is that if you plot the 3 of them in terms of GB/$ or mm^2/$, then the 4080 is the outlier. It's overpriced by about $150.

Their costs are not much higher than they were with the 30 series which is the point.

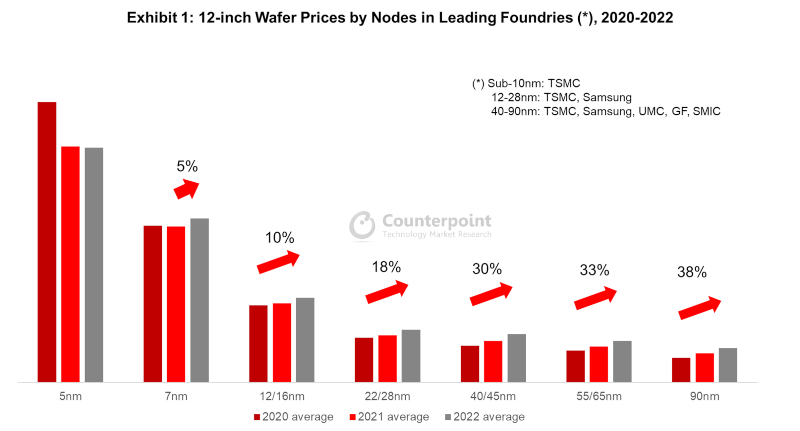

I thought we were in agreement that the 4000-series GPU dies appear to cost

considerably more. If you don't see that, then you should review the data.

The 4060ti for example is only PCIe x8 which cuts down on board cost further not to mention the die size is less than half that of the 3060ti.

I'm skeptical just how much difference it makes, at either the die or board level. Still, even a couple $ is probably worth it.

As for why they decided to x8

now, I think we're seeing the impact of greater PCIe 4.0 penetration. I think they're betting that a card of that tier just doesn't need full x16. It won't make much difference, on a PCIe 4.0 motherboard. More, on a PCIe 3.0 board, but still not a deal-breaker.

I guess games tend to stress PCIe bandwidth pretty hard, when you're using settings that put too much pressure on GPU memory. At least, that's what I think we saw with the RX 6500XT. With settings turned up well past the point that its meager 4 GB filled up, performance would be greatly affected based on whether it was in a PCIe 3.0 or 4.0 system.

They knew the crypto boom was just that well before the SKUs and pricing for the 40 series was decided upon.

That's impossible. I mean, everyone knew the risk of the crypto boom ending, but the GPU dies' specs were finalized probably 2 years before launch. You have to know what your price floor is and the approximate wafer costs, at that point in the development process. You have to build the right product for the market you expect to launch it into.

Once you've committed to the chip specs, about the only choice you have left is to cancel any that wouldn't be commercially viable, and that means all the development costs get flushed down the toilet and you get zero return-on-investment. So, it's critical to get the pricing right,

years before launch.

The lesson they seem to have taken from the crypto boom was certainly people would pay more,

That's what I'm saying.

but that doesn't mean that their costs went up accordingly.

You just

showed their GPU price at least

doubled. Nvidia needs to make its profits, so they probably do about a 100% markup. Not only that, but a larger, hotter, high-power board requires more expensive VRMs and cooling solution. On top of that, their board partners have costs and need to make a profit.

The design costs for these chips is starting to starting to stretch into the $Billions. Also, people tend to be dismissive of software costs. Something like 90% of Nvidia's engineers are software. They need to get paid, too! Traditionally, you can ignore these NRE costs, but I think they're actually big enough that I think you simply

cannot just look at chip fabrication costs, to establish a cost basis for them.