If you're considering the A770 16GB, I bought one just to show support for a 3rd player in the GPU game. I'm happy to report Intel has made huge strides in the older games with their latest drivers.... I play enough older games that I also lean away from Intel. I guess I'm just hanging on to Pascal for a bit longer.

Review Nvidia GeForce RTX 4060 Ti Review: 1080p Gaming for $399

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I don't know what review you read but the one I read has the 3060 at an average of 38 fps while the 4060ti averages 56 fps. That's not nearly double the performance.I game very little, but when I do it's using a 3600 at 1440p. This 4060ti has nearly double the performance of mine, and in fact has the best performance at that resolution of any sub-$400 card -- and the headline is meant to imply it's wholly unsuitable for 1440p gaming? Rather shameful, Jarred.

D

Deleted member 2838871

Guest

I think Gamers Nexus said it best...

"2016 called and they want their resolution back."

"2016 called and they want their resolution back."

It's hard to put into words how bad 8GB VRAM is. A small drop in FPS is one thing, but texture pop-in is incredibly immersion breaking.

To be pedantic, it's just over 2/3 of the way there. And the point remains. 30 fps is playable; 58 fps eminently so. This nonsense about a game at any frame rate below 60 looking like "all the characters are drunk" is just that. Your average Hollywood film runs just below 30fps, and -- Channing Tatum aside -- the actors rarely appear perpetually intoxicated.I don't know what review you read but the one I read has the 3060 at an average of 38 fps while the 4060ti averages 56 fps. That's not nearly double the performance.

The downside of console is the game costs Pc gaming tends to be cheaper.

The 4060ti only cool factor is the power compsumation.

what i see for now its good to someone like me who have 1920x1080 monitor with freesync 75hz maximum...

ITS HARD TO SAY! THIS IS A SHAME! Nvidia shame for everybody

The 4060ti only cool factor is the power compsumation.

what i see for now its good to someone like me who have 1920x1080 monitor with freesync 75hz maximum...

ITS HARD TO SAY! THIS IS A SHAME! Nvidia shame for everybody

It's just to answer a hypothetical that Nvidia might be counting on improved DLSS performance to make up for the lack in raw speed improvements.You telling me you don't wanna play your games as if your character was drunk?

Whatever your personal opinions of DLSS, I think you can probably set them aside for long enough to hopefully understand what Nvidia might or might not have been thinking.

BTW, I did say exclusive of frame generation. That's a whole other topic and not a simple apples-to-apples comparison like what I'd wish to see.

D

Deleted member 2838871

Guest

It's hard to put into words how bad 8GB VRAM is. A small drop in FPS is one thing, but texture pop-in is incredibly immersion breaking.

Not sure why anyone would even consider 8GB VRAM in 2023. My 1080 Ti from 2017 had 11GB for crying out loud.

Nvidia must be drunk. Even if the 4090 had 30% less performance I'd still buy it for the 24GB VRAM alone.

The downside of console is the game costs Pc gaming tends to be cheaper.

I actually just sold my Series X for $320 yesterday. I only used it for sports titles... and when I found out I can stream via Game Pass Ultimate onto the PC that was the deal maker... right now I'm playing MLB the Show 23 and it looks great even in stream mode.

I'm keeping the Switch though... I use it for the retro NES/SNES titles from when I was a kid.

Haven't owned a Playstation since the PS1 and PS2.

PC is still MasterRace!

You have no idea what you are talking about 58 vs 60 fps means tearing, which looks terrible and causes me nausea. 67% vs 100% is not being pedantic, you just got called on your BS. Just because you're fine with garbage visuals doesn't mean the rest of us are.To be pedantic, it's just over 2/3 of the way there. And the point remains. 30 fps is playable; 58 fps eminently so. This nonsense about a game at any frame rate below 60 looking like "all the characters are drunk" is just that. Your average Hollywood film runs just below 30fps, and -- Channing Tatum aside -- the actors rarely appear perpetually intoxicated.

Watched the GN review and seeing this basically equal or even LOSE to the 3060 ti that costs less?

Nvidia is smoking some potent stuff.

The 4060 non ti is basically all but guaranteed to be xx50 tier performance with a 60 tier nametag.

The 4060 ti REQUIRES a DLSS 3.0 use case to come out ahead by anything even meaningful.

99% of stuff does NOT support that. (its early day RTX all over again)

This is a joke of a card.

Nvidia is smoking some potent stuff.

The 4060 non ti is basically all but guaranteed to be xx50 tier performance with a 60 tier nametag.

The 4060 ti REQUIRES a DLSS 3.0 use case to come out ahead by anything even meaningful.

99% of stuff does NOT support that. (its early day RTX all over again)

This is a joke of a card.

3060Ti is faster than this gpu in some cases...

I have to agree with some other posts I saw that Nvidia must be leaning hard on frame generation.

Seriously, screw these walled gardens... but some are going to bend over/kneel/get on all fours for it anyway.

I have to agree with some other posts I saw that Nvidia must be leaning hard on frame generation.

Seriously, screw these walled gardens... but some are going to bend over/kneel/get on all fours for it anyway.

Wow, such hatred. And a vast technical misconception too. If the card averaged 60 instead of 58 fps, it'd still have just as much tearing -- unless of course you have a syncing monitor, in which case it won't tear in either case. A multi-game average of "58 fps" doesn't mean that even one game runs precisely at 58 fps all the time, much less all of them ... and running slightly above your monitor's refresh rate causes tearing just as slightly below it does.You have no idea what you are talking about 58 vs 60 fps means tearing, which looks terrible and causes me nausea. 67% vs 100% is not being pedantic, you just got called on your BS. Just because you're fine with garbage visuals doesn't mean the rest of us are.

D

Deleted member 2838871

Guest

3060Ti is faster than this gpu in some cases...

I have to agree with some other posts I saw that Nvidia must be leaning hard on frame generation.

Seriously, screw these walled gardens... but some are going to bend over/kneel/get on all fours for it anyway.

I read a comment somewhere yesterday that DLSS was implemented to give gamers that extra bit of performance... but developers are using it to save a buck by cheesing optimization. 🤣🤣

I do think a lot of the recent complaints regarding titles like Last of Us, Hogwarts and Jedi Survivor are hardware related because I don't have any issues with native 4K on Ultra settings and I don't use DLSS but I do think all PCs should be able to achieve solid performance on AAA titles and that's on the developers.

This is just embarrassing for Nvidia. The 4090 is the only really decent card so far. Everything else is over priced and under performs for its segment IMHO.

Yep this pretty much hits the nail on the head.

Oof... That's all I have to say......It's really a shame, because if this card was properly named "4060" and came in at $300 like it was meant to, it would be a killer product even with the downsides.

Yep this pretty much hits the nail on the head.

Couldn't agree more.Very underwhelming, although I guess we've known that for a few weeks.

Anyone on a 3xxx series card should ignore anything up to a 4080.

Well, they do. A 3060ti beats even a 2080 super for the most part. I think the performance should be above the 2080/S/TI at least!

This gen is a grab reach for profits from Nvidia. This segmentation sucks

Last edited:

The only excuse for this card I have seen is..."It's fine for 1080".

But this is not the case, several reviews have pointed out that setting textures on high causes a VRAM bottleneck in 1080p in many games on the 8GB card.

It is not actually increasing resolution that causes these VRAM bottlenecks, it is simply setting textures to high, regardless of resolution.

Secondly...this thing costs $400. This is supposed to be at least a 1440p card. In fact last time I was looking for a 1080p monitor, I had difficulty even finding a decent one. 1440p is simply the new minimum on many screens.

But this is not the case, several reviews have pointed out that setting textures on high causes a VRAM bottleneck in 1080p in many games on the 8GB card.

It is not actually increasing resolution that causes these VRAM bottlenecks, it is simply setting textures to high, regardless of resolution.

Secondly...this thing costs $400. This is supposed to be at least a 1440p card. In fact last time I was looking for a 1080p monitor, I had difficulty even finding a decent one. 1440p is simply the new minimum on many screens.

It also costs more

No it doesn't, it's around $50 less at ebay right now

FunSurfer

Distinguished

SomeGuyonTHW

Reputable

Nvidia really making DLSS 3.0 exclusive to 4000 series just to be able to sell this thing.

Barely an improvement from previous gen without frame generation.

Barely an improvement from previous gen without frame generation.

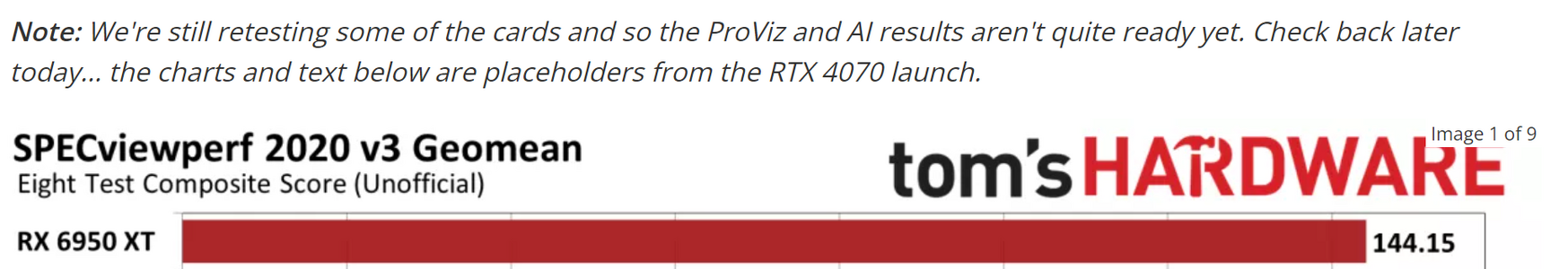

I ran out of time, and ran out of steam. Sorry!FYI, the professional/content creation portion of the review is for the 4070. Looks like you forgot to replace it with the 4060 Ti's data in the template.

I still need to test/retest a few other cards so I can make the ProViz charts. I'm going that now.

bourgeoisdude

Distinguished

I was refering to new. NewEgg and Amazon prices it is about $50 more for the 6800. But point taken; I should have specifiedNo it doesn't, it's around $50 less at ebay right now

Personally, I want a little less power consumption than the 6800 would do so hoping the rumors about the Radeon 7600 are wrong and it is a decent card for the price. Not holding my breath though.

But why - oh, that's right: DLSS is solution selling/marketing for RTX On performance impact.I read a comment somewhere yesterday that DLSS was implemented to give gamers that extra bit of performance...

[Strong IMO here]Raster looks plenty fine on gpus, someone has to really nitpick in favor of RT and PT. Some of these visuals I could hardly give a duck about. "Ooh, the shadows! Ooh, the reflections!", etc - meanwhile, I'm more concerned about gameplay, or a good story:

-if my party of 3 can dps down this boss before I run out of my limited stock of 3 revival potions

-there's a visual novel giving me the feels

-playing a futuristic racer, watching for hazards on the track, attacks from opponents, while trying to keep the top spot

-can't forget some older emulated titles

I guess I'm just not the target audience for those technologies... I don't see the need for this hard push towards realism; some games are good without this, and can be worse with it. Art should come in many forms.

I think they're upper management related. They have the final say on all these projects.I do think a lot of the recent complaints regarding titles like Last of Us, Hogwarts and Jedi Survivor are hardware related because I don't have any issues with native 4K on Ultra settings and I don't use DLSS but I do think all PCs should be able to achieve solid performance on AAA titles and that's on the developers.

-not enough allotted time, which happens a lot, even with the crunch

-sometimes making devs use engines not well suited for a particular genre, because the licensing fees

-pushing for projects built around macrotransactions(there's nothing 'micro' about these things anymore)

Some folks tend to point fingers/pitchforks at the developer, and I'd understand that, IF they weren't under a publisher.

Look at all the complaints towards Activision-Blizzard-King.

Basically, ABK's response: I can't hear you over the sound of all my money!!!

I doubt they will change direction until folks hit 'em where it hurts most: money.

In your case, you've brute forced your way around the problems with that hardware.

The problem with doing DLSS / FSR2 everywhere is just time. So my standard, like with overclocking, is to show just the card under review with a reference point. In this case, DLSS 2 Quality upscaling improves performance (in the games that support it) by an average of around 50% — 69% is the geometric mean for the ray tracing games, but the non-RT games show anywhere from an 8% improvement (Forza Horizon 5) to a 38% improvement.Maybe I missed it, but @JarredWaltonGPU , why no other models included in the DLSS benchmarks? In particular, why not the RTX 3060 Ti? Because, a big question I have is how much they're counting on improvements in hardware support for DLSS to makeup for the otherwise lackluster improvement. Does DLSS (without frame-generation) provide a bigger benefit for the RTX 4060 Ti than its predecessor?

Frame Generation improves "performance" by 95% when combined with Quality upscaling, at 1440p and in the games that supported it from my test suite. So that's an additional ~40% boost to frames to monitor. Like I said in the review, however, that 40% feels more like ~15% because they're not "real" frames.

Cut the shame crap, first off. The headline is just a headline, not the full story. Others think I scored it too high, some think it deserves a 5-star review, whatever.I game very little, but when I do it's using a 3600 at 1440p. This 4060ti has nearly double the performance of mine, and in fact has the best performance at that resolution of any sub-$400 card -- and the headline is meant to imply it's wholly unsuitable for 1440p gaming? Rather shameful, Jarred.

Depending on the game, resolution, settings, and desired FPS, the 4060 Ti can handle 1440p. My point (in the headline) is that it's more of a 1080p gaming card. And it still costs $399. Nvidia said as much, and I quote from an email:

"I think the sweet spot for this card is 1080p. Abd that is what A LOT of people play at."

I even left in the typo. That was in response to an email I sent the other day saying:

"Fundamentally, I think the 8GB VRAM capacity and 128-bit bus are going to be a bit of an issue at 1440p in some modern games. DLSS can help out, but really I can't help but feel that the 30-series memory interfaces were better sized than the 40-series (except for the 4090). I'm not alone in that, I know, but it would have been better from a features standpoint to have 384-bit and 24GB on the 4090, then 320-bit and 20GB on 4080, 256-bit and 16GB on the 4070-class, 192-bit and 12GB on the 4060-class, and presumably 128-bit and 8GB on the 4050-class. Obviously that ship has long since left port, and the 4060 Ti 16GB will try to overcome the VRAM limitation to some extent (when it ships), but it's still stuck with a 128-bit interface."

D

Deleted member 2838871

Guest

This is just embarrassing for Nvidia. The 4090 is the only really decent card so far. Everything else is over priced and under performs for its segment IMHO.

Yep. I've said this from the beginning. The 4090 is the only 40 series card worth purchasing... even if it is overpriced. I don't know what I'd rather have... the 70-80% improvement over my previous 3090 for $1700.... or the normal 30% improvement you get from gen to gen for $1000.

Probably the former if you are talking about paying for performance.

Some of these visuals I could hardly give a duck about. "Ooh, the shadows! Ooh, the reflections!", etc - meanwhile, I'm more concerned about gameplay, or a good story:

I'm a big eye candy fan...

From The Last of Us... max settings with RT.

Now I can't tell you where the RT begins or ends... but it sure does look amazing. These screenshots don't do it justice.

In your case, you've brute forced your way around the problems with that hardware.

... as planned when I went with AM5. Might as well go all in and sit pretty for a few years.

TRENDING THREADS

-

News Fake Ryzen 7 9800X3D bought from Amazon was actually an old AMD FX chip disguised by IHS sticker

- Started by Admin

- Replies: 33

-

Review AMD Radeon RX 9070 XT and RX 9070 review: An excellent value, if supply is good

- Started by Admin

- Replies: 165

-

-

News We need your help to shape the future of Tom’s Hardware

- Started by Admin

- Replies: 97

-

News Nvidia RTX 5090's 16-pin power connector hits 150C in reviewer's thermal camera shots

- Started by Admin

- Replies: 43

-

Question My computer screen goes black but still has signal pls help.

- Started by acidbarbie

- Replies: 1

-

News Nvidia RTX 5050, RTX 5060, and RTX 5060 Ti specs leak — expect 8GB/16GB flavors and higher TGPs

- Started by Admin

- Replies: 24

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.