Review Nvidia GeForce RTX 4070 Ti Review: A Costly 70-Class GPU

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

PEnns

Reputable

What a surprise!! Another card for scalpers and whales... made palatable by your friendly reviewers at certain tech web sites. And those are REALLY trying to make everyone believe this obscene nonsense of pricing scheme is OK, because, you know, it's not bad on Ultra settings (now some are using the pathetically named "Epic" setting) for card that starts at $799 (good luck finding such price!!)

I find it really offensive and borderline unethical for a bunch of tech writers to peddle these cards and make it sound it's perfectly normal for rubes to buy such overpriced toys.

But yeah, the fat cats at NGreedia still want their fat bonuses, although that gravy train, aka crypotomania, was sunk a long time ago.

I find it really offensive and borderline unethical for a bunch of tech writers to peddle these cards and make it sound it's perfectly normal for rubes to buy such overpriced toys.

But yeah, the fat cats at NGreedia still want their fat bonuses, although that gravy train, aka crypotomania, was sunk a long time ago.

rDigital

Distinguished

I don't blame the scalpers, they are simply seeing a supply side market opportunity and embracing it. GPUs and computer components (along with most luxury consumer commodities) are one of the most free markets of all. People simply do not need these things to live, work (rare) or eat.

Nvidia and AMD see the writing on the wall. The market WILL bear much higher prices than they were charging. This means they were leaving a lot of meat on the table. I'd rather the maker or retailer get that money than an opportunistic scalper.

GPU mining of crypto did not help anything. People are quite weary of used GPUs being sold by the ton on eBay now that the bottom has fallen out on proof of work crypto. Buying a used GPU now is like buying a used car after Hurricane Katrina. The shoddy used GPUs will only push more people towards the 4000 and 7900 series respectively. Thus keeping the market a little higher still.

This pricing is better for everyone in the long run. The scalpers are demoralized. They are still buying up all of the high end cards, but it seems the only ones that they can still make money on are the 4090s. 4080s on ebay go for at or a little less than retail and the scalpers are eating the tax. Without the market pressure from mining prices may start to slowly go down over time, or at least freeze and let inflation lighten the blow over time. If Nvidia had released the 4070 ti at $500, there would effectively be ZERO available at retail.

Prices will always meet demand. If you don't like it, don't buy it. Vote with your wallet. For those who really want to get something to play at 1080p for the next few years. the RX 6600 is an amazing and efficient value right around $200.

Nvidia and AMD see the writing on the wall. The market WILL bear much higher prices than they were charging. This means they were leaving a lot of meat on the table. I'd rather the maker or retailer get that money than an opportunistic scalper.

GPU mining of crypto did not help anything. People are quite weary of used GPUs being sold by the ton on eBay now that the bottom has fallen out on proof of work crypto. Buying a used GPU now is like buying a used car after Hurricane Katrina. The shoddy used GPUs will only push more people towards the 4000 and 7900 series respectively. Thus keeping the market a little higher still.

This pricing is better for everyone in the long run. The scalpers are demoralized. They are still buying up all of the high end cards, but it seems the only ones that they can still make money on are the 4090s. 4080s on ebay go for at or a little less than retail and the scalpers are eating the tax. Without the market pressure from mining prices may start to slowly go down over time, or at least freeze and let inflation lighten the blow over time. If Nvidia had released the 4070 ti at $500, there would effectively be ZERO available at retail.

Prices will always meet demand. If you don't like it, don't buy it. Vote with your wallet. For those who really want to get something to play at 1080p for the next few years. the RX 6600 is an amazing and efficient value right around $200.

LTT has a nice image showing how insane these new GPU are priced compared to previous gens.

Nvidia has basically doubled the price of GPU since the 1070.

(and this assumes that 4070Ti will actually be sold at $799, some board partners are already selling at $899+)

(and this assumes you own $, in many parts of the world you can tack on another 20%-30% in price due to currency losses against the $)

Last I checked people haven't doubled the amount of money they had in a few years time. It's no wonder GPU sales are in the gutter and Nvidia and AMD are cutting orders at TSMC. Not sure what they think to achieve by making GPU flat out unaffordable.

Nvidia has basically doubled the price of GPU since the 1070.

(and this assumes that 4070Ti will actually be sold at $799, some board partners are already selling at $899+)

(and this assumes you own $, in many parts of the world you can tack on another 20%-30% in price due to currency losses against the $)

Last I checked people haven't doubled the amount of money they had in a few years time. It's no wonder GPU sales are in the gutter and Nvidia and AMD are cutting orders at TSMC. Not sure what they think to achieve by making GPU flat out unaffordable.

Last edited:

rDigital

Distinguished

Microcenter has them pre-listed with a few $800 models (that will never be restocked after the initial drop) and models ranging all the way up to $1050. Completely unacceptable pricing for a -70 part.

Woof.

AgentBirdnest

Respectable

And just to drive that point home - the 2070 Super is only 3.5 years old.LTT has a nice image showing how insane these new GPU are priced compared to previous gens.

Nvidia has basically doubled the price of GPU since the 1070.

The price of a x070 Ti has gone from $450 to $800 in under 4 years. :-/

ohio_buckeye

Illustrious

Microcenter has them pre-listed with a few $800 models (that will never be restocked after the initial drop) and models ranging all the way up to $1050. Completely unacceptable pricing for a -70 part.

Probably right. My local Microcenter shows 2 models at 799. A PNY and a Zotac for that. The rest of them go over 800 and up over $1000. For that price they can keep them. They've got 7900XT sitting for 900. Why would I pay over 1000 for 800 dollar cards??? I'll keep my 3080.

hannibal

Distinguished

Probably right. My local Microcenter shows 2 models at 799. A PNY and a Zotac for that. The rest of them go over 800 and up over $1000. For that price they can keep them. They've got 7900XT sitting for 900. Why would I pay over 1000 for 800 dollar cards??? I'll keep my 3080.

Because it is Nvidia GPU it will sell like hotcakes, because people can not afford 4080 and 4090, so this is the "affordable" high end option in Nvidia GPU offering...

And if that is too high... 4050 at $500 will save those that don´t have money for 4070ti...

I think that upgrading GPU every 4 years is too short time period... Have to keep GPU longer than that. So Nvidia is making green solution to electronic waste problem! People keep GPUs longer, less electronic waste! Thanks Nvidia for saving the earth!

😉

rDigital

Distinguished

Agree. same at Mayfield, OH Microcenter. They have 25+ 7900XT sitting there doing nothing. Most are reference cooler design, so people in the know are probably avoiding them like the plague with the current issues AMD is having.Probably right. My local Microcenter shows 2 models at 799. A PNY and a Zotac for that. The rest of them go over 800 and up over $1000. For that price they can keep them. They've got 7900XT sitting for 900. Why would I pay over 1000 for 800 dollar cards??? I'll keep my 3080.

Your 3080 should be kicking ass and taking names for a long time. I just finally retired my 1070 GTX this year, to my kids, it still plays games pretty good on reasonable settings. Over 100 fps in COD: MW2 warzone at 1080p with a capable cpu.

They're only "wrong" when everyone refuses to pay them. Unfortunately, we're being shown time and time again that there are apparently enough people willing to spend $800 for this level of performance that we'll continue to see them. As stated in the conclusion, if Nvidia had tried to sell this as a $600 card, or a $500 card, and then scalpers just snapped them all up and asked for $800 or more, we'd be right back where we started. Except then we'd have scalpers contributing nothing and taking a chunk of the profits.

So yeah, don't buy a $800 card if you don't want to spend that much. Wait for prices to come down, or go with a cheaper and slower alternative. But if others keep paying a lot more than you're willing to pay, nothing is going to change.

Don't try to legitimize this, it makes you look like a shill.

Last edited by a moderator:

ohio_buckeye

Illustrious

Agree. same at Mayfield, OH Microcenter. They have 25+ 7900XT sitting there doing nothing. Most are reference cooler design, so people in the know are probably avoiding them like the plague with the current issues AMD is having.

Your 3080 should be kicking ass and taking names for a long time. I just finally retired my 1070 GTX this year, to my kids, it still plays games pretty good on reasonable settings. Over 100 fps in COD: MW2 warzone at 1080p with a capable cpu.

If memory serves that 1070 is equivalent to a 1660 super. I think in games that support it you may be able to turn on Freesync as well. I know at least my 3080 I played with that and could enable it in Gotham Knights.

----------------

Moving to a Mac??? As someone who was once Apple certified.....WHY?!?!?!?!? I still don't care for them.

D

Deleted member 431422

Guest

I own a 21 year old Volvo V40. I'd spend those $800 for new parts to drive to work safely than on a new GPU that gives me nothing.

hjominbonrun

Distinguished

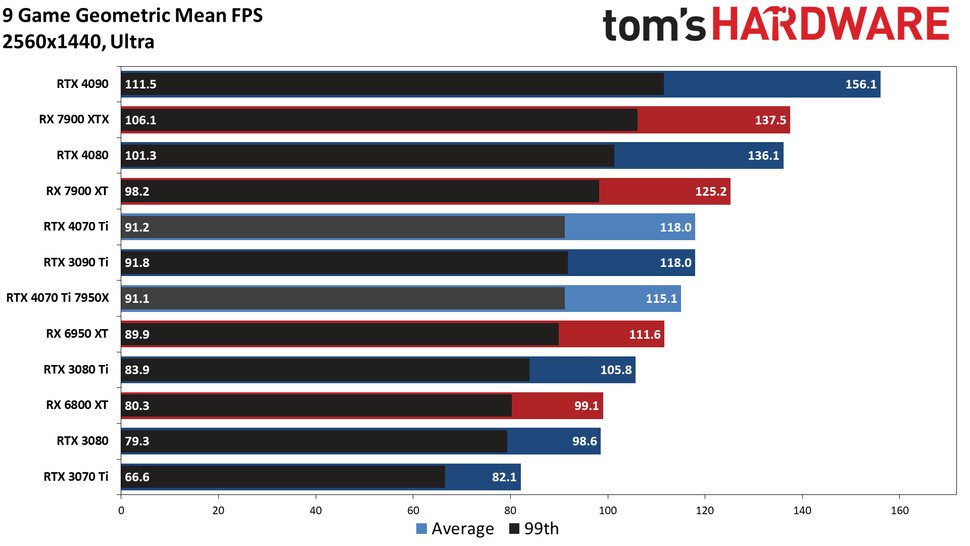

What is causing the average / mean frames to change?

I notice that the 4090 RT frames was 103.4 and is now today, 104.9 at 1440p ultra RT, and the Non RT average was 153.8 before and is now 156.1.

Do you rerun the tests for new versions of the drivers?

I have them in my personal price to performance spreadsheet and they seem to be changing.

I notice that the 4090 RT frames was 103.4 and is now today, 104.9 at 1440p ultra RT, and the Non RT average was 153.8 before and is now 156.1.

Do you rerun the tests for new versions of the drivers?

I have them in my personal price to performance spreadsheet and they seem to be changing.

Good GPU but way over priced, same as the 4080. Yes there is high inflation, but it is nowhere near the amount they have increased since 30 series. Inflation in last 2 years is say 15-20% , 4070 Ti is about 45% more and 4080 is almost 100% more.

They may have looked at what people paid before at scalped prices but that was when you could mine Ethereum and make a return on it, now you can't, so good luck with those prices! 4080 FE seems to be always in stock this last month so shows the demand is not there.

They may have looked at what people paid before at scalped prices but that was when you could mine Ethereum and make a return on it, now you can't, so good luck with those prices! 4080 FE seems to be always in stock this last month so shows the demand is not there.

JarredWaltonGPU

Splendid

I'm not going to try to make things pretty, but I do have the data. (1) = 1080p medium, (2) = 1080p Ultra, (3) = 1440p Ultra, and (4) = 4K Ultra (in the file names on the left). Here are the DLSS numbers:It would be interesting to see power consumption with and without dlss 3

And here are the non-DLSS numbers:

Without spending a ton of time analyzing things, it looks like DLSS3 in general uses a bit more power than DLSS2 while delivering higher (generated) FPS. Depending on the game, enabling DLSS2 can drop power use, particularly if you hit CPU bottlenecks — which makes sense as in that case the GPU isn't working as hard to render 1440p upscaled to 4K instead of native 4K, as an example.

JarredWaltonGPU

Splendid

Ultra removes the CPU bottleneck more, and while today's games may not always look all that different at ultra vs. high, in the long run it's just a number and things will inevitably trend downward. You can see the 1080p medium results, where everything becomes CPU limited. 1440p at high would generally perform close to 1080p at ultra. Basically, I have to choose a setting and stick with it, and since I'm showing what a GPU is potentially capable of handling, maxing out (mostly) the settings does that better in my book.Ultra doesn't do much for visual quality and slows down the FPS.

If people knock this default setting down, they're going to have much better experiences. It's somewhat amusing when I see 3060ti users can enjoy the heck out of a game, then a guy with a 4090 posts about his fury that he cant enjoy the same game because it won run 144hz at 4k with ultra RTX on.

This 4070ti looks like its at the low end borderline where you can start to push over 60fps at 4k (with 'high', not in 'ultra', and def not with RTX) and the requisite supporting components (high end CPU & 4k 144+hz monitor) to support that performance costs a whole lot more than what you need to accompany a cheap 3060ti paired with a 1440 60-144hz monitor

For CPU reviews, you can make the argument that testing at high makes more sense, because you want to shift the bottleneck back to the CPU if possible. This is also why Paul tests with a 4090 now, and conversely why I test with a 13900K. Put the focus on the component you're testing as much as possible. In an ideal world, with infinite time and systems and parts, you'd test each GPU on each CPU. But just gathering the data for GPUs from one CPU can take weeks, depending on how many GPUs you want to test.

Of course in Canada the launch prices are considerably higher even after currency conversions. Whatever.

JarredWaltonGPU

Splendid

Nvidia "unofficially" dropped the price of the 3090 Ti FE to $1,099 at Best Buy. Other companies followed — this was one of the things that pissed off EVGA's CEO. Then those cards mostly sold out, they're no longer being manufactured (AFAIK), and prices have crept back up. But the number of units for 3090/3090 Ti has dropped substantially. Plus, suggesting anyone with half a brain would pay $2000 for a 3090 Ti that is provably slower and worse in virtually every scenario than the $1,200 4080 is silly. So, like I said in the text, I punted a bit on the prices for FPS/$.MSRP for the RTX 3090 Ti is $2000 USD.

I've retested some of the cards on a different PC, with different drivers, and a slightly different gaming suite. I suppose it depends on what you're looking at. The original 4090 and 4080 launch reviews used a 12900K. Then the 13900K and 7950X arrived (technically before the 4080) and I got new test hardware, so I switched to the 13900K on the AMD 7900 reviews. Now I'm still using the 13900K with the 4070 Ti, and I had to also retest every other GPU in these charts on that system, generally using the latest drivers.What is causing the average / mean frames to change?

I notice that the 4090 RT frames was 103.4 and is now today, 104.9 at 1440p ultra RT, and the Non RT average was 153.8 before and is now 156.1.

Do you rerun the tests for new versions of the drivers?

I have them in my personal price to performance spreadsheet and they seem to be changing.

I also dropped Fortnite from the benchmarks, because the latest update totally broke my test sequence and RT / Lumen don't work in Creative mode, so I literally cannot test the latest GPUs on the previous Fortnite benchmark. I added Spider-Man: Miles Morales as a replacement, because it's easy enough to benchmark and also supports DLSS 2/3, FSR 2, and XeSS. I added A Plague Tale: Requiem to the list of benchmarks as well, again because it's easy enough to test, has DLSS 2/3 support, and represents a modern game engine. Besides the above, with the 7900 review, I noticed Forza Horizon 5 performance had dropped quite a bit on Nvidia cards. I pinged my Nvidia contacts and they said they were aware of the issue and it was being fixed in a future driver. The launch (preview) driver for the 4070 Ti includes the fixes, so I retested all of the Nvidia cards in Forza.

TL;DR: I routinely retest some games on newer drivers or with new patches if I notice a discrepancy, and switching to a new test bed (plus a secondary AMD test bed) also adds to the "fun."

Brubbler

Prominent

I like many others was excited for the 4000 series for a multitude of reasons, now, Im not.

People calling to not support Nividia just isnt going to happen given how people are, new builders, rubes, impulsive spenders, FOMO, desperation etc. These overpriced gpus will sell enough to send a message to nvidia that they can get away with duping enough of the general public to turn a profit and satisfy investors.

All of this b*tching and moaning, while justified and IMO is required will accomplish little. All we can do is wait a year or two for a potential price drop, which most likely wont be significant anyway, so, we wait for the 5000 series which will be more of the same BS fleecing and false marketing.

Gotta have the latest and greatest to play poorly optimized games? Its little more than bragging rights and its preposterous. RT while pretty is still a heavy detrimental hit and buggy DLSS are still only piece-meal in games. Again paying a hefty premium for very little.

And here we are, overpriced pap which will steer people back to the 3000 series which will now more than ever continue to sell well above msrp. This is a disaster for enthusiasts with no end in sight. Nvidia will attempt to lure people back by perhaps dropping prices ?next year? but this drop will be a mere pittance of what it should be. Im sure not holding my breath.

Screw this gen from AMD and Nvidia, they can suck it. Sticking with my trusty 1080ti, it plays everything just fine.

People calling to not support Nividia just isnt going to happen given how people are, new builders, rubes, impulsive spenders, FOMO, desperation etc. These overpriced gpus will sell enough to send a message to nvidia that they can get away with duping enough of the general public to turn a profit and satisfy investors.

All of this b*tching and moaning, while justified and IMO is required will accomplish little. All we can do is wait a year or two for a potential price drop, which most likely wont be significant anyway, so, we wait for the 5000 series which will be more of the same BS fleecing and false marketing.

Gotta have the latest and greatest to play poorly optimized games? Its little more than bragging rights and its preposterous. RT while pretty is still a heavy detrimental hit and buggy DLSS are still only piece-meal in games. Again paying a hefty premium for very little.

And here we are, overpriced pap which will steer people back to the 3000 series which will now more than ever continue to sell well above msrp. This is a disaster for enthusiasts with no end in sight. Nvidia will attempt to lure people back by perhaps dropping prices ?next year? but this drop will be a mere pittance of what it should be. Im sure not holding my breath.

Screw this gen from AMD and Nvidia, they can suck it. Sticking with my trusty 1080ti, it plays everything just fine.

Last edited:

hjominbonrun

Distinguished

I did the math and for me, with a 6700XT, if I were to prioritise RT at 1440p, and assuming a starting price of £800 for the 4070TI,Do you not know what "almost" means? And while some would take "palatable" to mean really tasty, that's not the way I normally use it. I use it more as "acceptable but not awesome." I wouldn't call an excellent dinner "palatable," I'd say it was delicious or some other word that means I really like it. Taco Bell is palatable, for example. So is Wendy's. But neither is great, just like an $800 replacement that's only moderately faster than the outgoing $800 cards.

As ugly as the price is, this is the best value for money upgrade for me, as my 1440p RT Frames will increase from 21 (mean) to 62.7 resulting in me paying £37.81 per frame Increase over and above what I already have.

If I were to prioritise nonRT frames, then for me, the RX7900XT at £890 would be the best value as I would be paying £18.16 per frame extra over and above my current average of 76.20 to 125.20 on the 7900.

So the 4070TI is the best value from the RT point of view, if you have my GPU.

Also I just checked and if you have a new build, then the 4070TI also has the largest frames for you buck.

As ugly as it is, because of the high prices of everything else,

the 4070TI is the best value for money. (EDITED : FOR Ray Tracing)

hjominbonrun

Distinguished

You must be the most hardworking person in the building.Nvidia "unofficially" dropped the price of the 3090 Ti FE to $1,099 at Best Buy. Other companies followed — this was one of the things that pissed off EVGA's CEO. Then those cards mostly sold out, they're no longer being manufactured (AFAIK), and prices have crept back up. But the number of units for 3090/3090 Ti has dropped substantially. Plus, suggesting anyone with half a brain would pay $2000 for a 3090 Ti that is provably slower and worse in virtually every scenario than the $1,200 4080 is silly. So, like I said in the text, I punted a bit on the prices for FPS/$.

I've retested some of the cards on a different PC, with different drivers, and a slightly different gaming suite. I suppose it depends on what you're looking at. The original 4090 and 4080 launch reviews used a 12900K. Then the 13900K and 7950X arrived (technically before the 4080) and I got new test hardware, so I switched to the 13900K on the AMD 7900 reviews. Now I'm still using the 13900K with the 4070 Ti, and I had to also retest every other GPU in these charts on that system, generally using the latest drivers.

I also dropped Fortnite from the benchmarks, because the latest update totally broke my test sequence and RT / Lumen don't work in Creative mode, so I literally cannot test the latest GPUs on the previous Fortnite benchmark. I added Spider-Man: Miles Morales as a replacement, because it's easy enough to benchmark and also supports DLSS 2/3, FSR 2, and XeSS. I added A Plague Tale: Requiem to the list of benchmarks as well, again because it's easy enough to test, has DLSS 2/3 support, and represents a modern game engine. Besides the above, with the 7900 review, I noticed Forza Horizon 5 performance had dropped quite a bit on Nvidia cards. I pinged my Nvidia contacts and they said they were aware of the issue and it was being fixed in a future driver. The launch (preview) driver for the 4070 Ti includes the fixes, so I retested all of the Nvidia cards in Forza.

TL;DR: I routinely retest some games on newer drivers or with new patches if I notice a discrepancy, and switching to a new test bed (plus a secondary AMD test bed) also adds to the "fun."

miroslavhm

Distinguished

From my experience with video accelerators, pretty much since this tech was created, regardless of the video card generation, if the memory bus is less than 256bit, the card is useless and depends on software magic. This has been proven on any brand being AMD, NVidia, SunSystem etc. NVidia this generation pretty much did the same marketing as during the 9600 series some 15ish years ago - narrow bus, a lot of data compression, adding this time on top of that AI simply because the data compression is not enough anymore. Well, these chips are great of scientific research and AI but somehow I don't think they are even close to being worth for graphics. And it's 12GB RAM...Anyhow, at this point of time I personally would not buy a gaming card with less than 16GB VRAM and a bus and ROPs that can utilize it properly. Let's see what AMD will pull out - if they turn their back on the gaming market, my guess is it will create an opportunity for Intel to grab some share. NVidia and AMD are pretty much designing VERY expensive chips for AI, cars IO, big data. Nothing worth for gaming here.

- Status

- Not open for further replies.

TRENDING THREADS

-

-

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

Latest posts

-

-

-

-

Question GPU temps reach 80C for less than 5 seconds, Is this normal ?

- Latest: stephenpegg

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.