LokkenJP

Distinguished

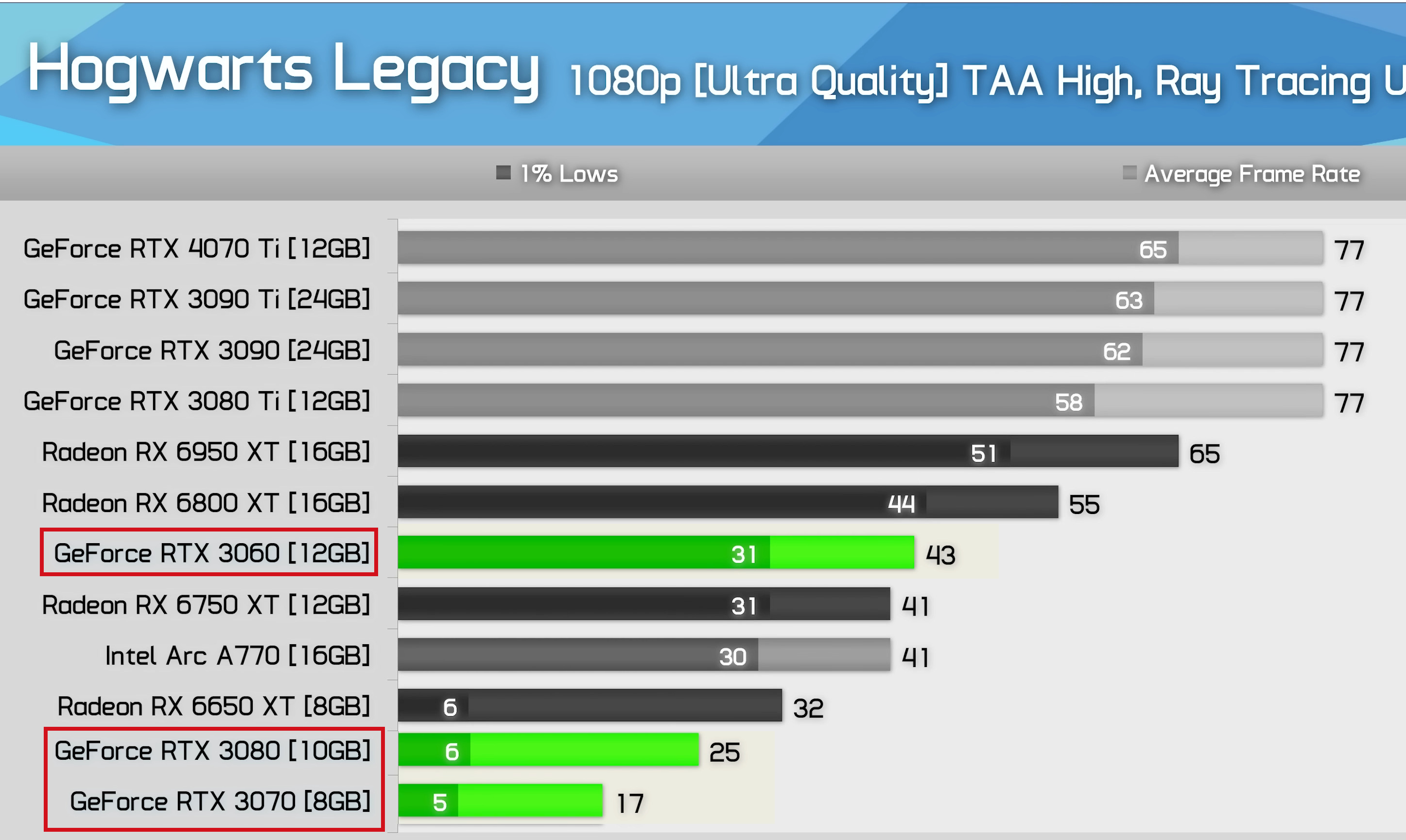

Well. The GTX/RTX X060 lineup was always meant to be the mid-low segment, so I'm perfectly fine with this changes IF it means we are back to having a decent sub <250$ GPU solution.

Of course those changes won't have any sense if we mantain the current pricing tiers, but if the efficiency (FPS per dollar spent) grows substantially from the current 3060, then it will be worth the tradeoff.

Right now the low segment is almost orphaned of any decent GPU solution, even more so on the nVidia corner. Maybe this will change with the 40xx series.

Of course those changes won't have any sense if we mantain the current pricing tiers, but if the efficiency (FPS per dollar spent) grows substantially from the current 3060, then it will be worth the tradeoff.

Right now the low segment is almost orphaned of any decent GPU solution, even more so on the nVidia corner. Maybe this will change with the 40xx series.