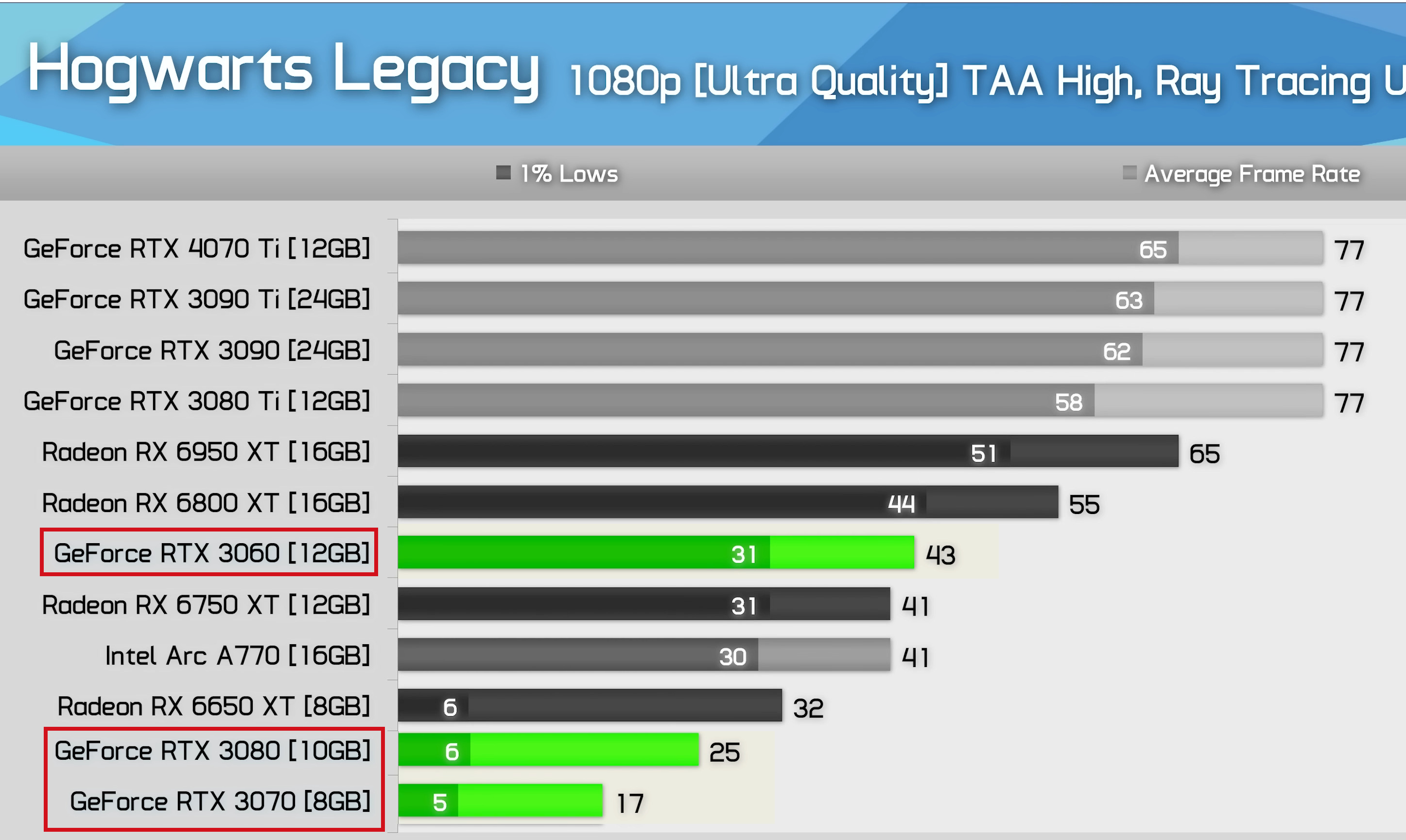

A reputable hardware leaker shares the potential specifications for Nvidia's GeForce RTX 4060 gaming graphics card.

Nvidia RTX 4060 Specs Leak Claims Fewer CUDA Cores, VRAM Than RTX 3060 : Read more

Nvidia RTX 4060 Specs Leak Claims Fewer CUDA Cores, VRAM Than RTX 3060 : Read more