newtechldtech

Respectable

Maybe it is a nice idea for all SSD makers to allow the users to underclock the SSD controller for lower power consumption... I will send them all emails for this.

It would be interesting if there were a standard NVMe command set for querying & configuring SSD power levels.Maybe it is a nice idea for all SSD makers to allow the users to underclock the SSD controller for lower power consumption... I will send them all emails for this.

To be fair if they stopped making only PCIe x4 SSDs the problem would solve itself. Not every SSD has to max everything out, and a good chunk of the reason the P31 is so good is that the controller uses half the channels of the competition. If they weren't all pushing maximum performance and instead were shooting for efficiency I'd imagine there would be even better drives efficiency wise than the P31.Maybe it is a nice idea for all SSD makers to allow the users to underclock the SSD controller for lower power consumption... I will send them all emails for this.

yeah, I am looking for one for laptop, so temp and power usage is what I care the most of.idle, average daily work, fully loaded temperatures and power consumption (with and without a heatsink) would be really good to know

The mobos I've used always have a thermal pad between the heatsink and the M.2 slot — often on both the top and bottom to give increased cooling to both sides. It's not a perfect solution, as the airflow to the bottom of the SSD will be negligible at best, but again you tend to need to hit the SSD really hard to heat it up.How do you mount SSDs on motherboards with their own integrated heatsink for the slot? Do you use a thermal pad, a blob of heatsink compound, or just rely on there being enough contact for adequate heat conduction?

I believe the when we reviewed them, Teamgroup only had A440 Pro ]called "Pro" on the 1TB/2TB variants, and only called "Pro Special" on the 4TB model. So I guess we need "Special" on our 4TB model, yes. I'll go fix that. Thanks for the heads up.You list the 4TB TeamGroup A440 Pro but I believe you tested the A440 Pro Special Series. I don't believe the non-special series performs the same as the special series. Can you confirm since there is an about 30 or 40 USD difference?

One thing I look at is whether a SSD is single-sided or double-sided. In the case of the 2 TB Samsung 990 Pro that I just got at Amazon for $120, it appears to be single-sided. That should largely avoid the need for much in the way of "underside" cooling.The mobos I've used always have a thermal pad between the heatsink and the M.2 slot — often on both the top and bottom to give increased cooling to both sides. It's not a perfect solution, as the airflow to the bottom of the SSD will be negligible at best, but again you tend to need to hit the SSD really hard to heat it up.

Yeah, the Phison E26 drives are a concern, along with other double-sided solutions. I think most of the heat is in the controller rather than the NAND, though, so if the controller is on top it should be good.One thing I look at is whether a SSD is single-sided or double-sided. In the case of the 2 TB Samsung 990 Pro that I just got at Amazon for $120, it appears to be single-sided. That should largely avoid the need for much in the way of "underside" cooling.

Fine, I've added the two Optane drives where we have test results. They're in the 1TB table and have incredibly high QD1 random IO.Again, no Optane 905p or p5800x? Sure they are discontinued but they are readily available and should stack up well against other SSDs. Glad I got a p44 pro for my game drive...

I think it was fair to leave them out, since both are discontinued, but I guess the people have spoken!Fine, I've added the two Optane drives where we have test results. They're in the 1TB table and have incredibly high QD1 random IO.

Another reason why I will only buy Samsung drives. It's low power mode is wonderful in notebooks and it can be just as quick as the normal power modeMaybe it is a nice idea for all SSD makers to allow the users to underclock the SSD controller for lower power consumption... I will send them all emails for this.

Thank you!Fine, I've added the two Optane drives where we have test results. They're in the 1TB table and have incredibly high QD1 random IO.

I happen to really like the synthetics in Toms' SSD testing, since they show things like how IOPS scale with queue depth and give you plots showing how that and sustained write performance directly compare between competing drives. They also provide average & peak power usage + power-efficiency. That's the good part.While I'm certain you spent a lot of time testing these M.2s, synthetic tests are pretty meaningless to consumers and real world use. No different than GPU testing and only publishing synthetic results. The following from Tech Power Up is an example of some of the best testing I've seen from anyone and certainly is relatable to most people for their purchasing.

Neo Forza NFP495 4 TB Review - The new TenaFE SSD Controller

The Neo Forza NFP495 is available at an astonishingly low cost of only $175 for the 4 TB model, and it's the first SSD we've reviewed that utilizes the new TenaFE TC2201 controller. Price/performance is topping our charts and real-life numbers are really good, too.www.techpowerup.com

| Title | Total Spread | NVMe Spread | SATA Spread | Slowest NVMe v Fastest SATA |

|---|---|---|---|---|

| Age of Empires IV | 36.4% | 21.5% | 11.7% | 0.5% |

| Deathloop | 21.7% | 3.9% | 14.6% | 2.2% |

| Doom Eternal | 65.4% | 11.5% | 14.3% | 29.8% |

| F1 2022 | 10.8% | 4.8% | 5.1% | 0.5% |

| Red Dead Redemption 2 | 14.9% | 5.8% | 7.1% | 1.4% |

| Unreal Engine 5 | 43.2% | 23.4% | 12.0% | 3.6% |

| Watch Dogs Legion | 36.2% | 23.8% | 10.0% | 0.0% |

| Title | Total Spread |

|---|---|

| Cyberpunk 2077 | 10.3% |

| Far Cry 6 | 14.0% |

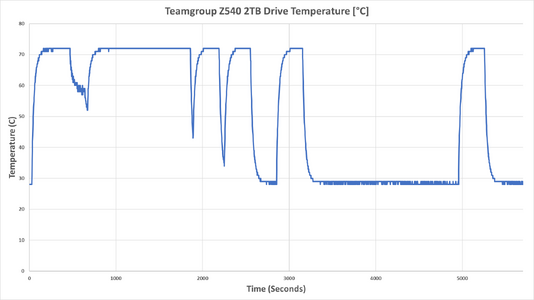

Uh, whether they say so or not, that perfectly-flat ceiling sure looks like throttling to me! It would be interesting to line it up with a performance graph, because I'll bet you'd see performance fall off whenever it hits that ceiling.Here's the results of our testing of the Teamgroup Z540 2TB as an example (this is during the 30 minute sustained write tests, which is followed by increasingly long idle periods to let the SSD recover).

View attachment 287

The maximum temperature is consistently 72C, nowhere near the throttling point.

I'll tell you what I think is important, and then perhaps you can think about the best way to address these concerns in your reviews.It takes 10 minutes of futzing about with the data for each drive, but if you really find it useful, let me know. I can work on making them for future reviews at least.

Understood, but you know that's exactly how some people are going to use them - especially if they don't know otherwise! Or in laptops, for drives that don't come with their own heatsink.But again, it's a bit more one of those anecdotal evidence things and an indication of SSD temps in our particular test PC rather than a true indication of how the drive runs everywhere. Stick them in a mini-ITX case with restricted airflow and temps would naturally be a lot higher.

There's a sustained load, writing data as fast as possible, and the bottleneck at this point is a combination of NAND and controller. Initially, these Phison E26 drives write in pSLC mode and so the Z540 spits out nearly 12 GB/s of data to the NAND for about 20 seconds (~250 GB written, give or take). Then the cache gets filled up and it writes directly to the NAND in TLC mode at around 3.7 GB/s. This goes on for about seven minutes, or ~420 more seconds and writes another ~1,500 GB.Uh, whether they say so or not, that perfectly-flat ceiling sure looks like throttling to me! It would be interesting to line it up with a performance graph, because I'll bet you'd see performance fall off whenever it hits that ceiling.

I'm actually not sure what the "ideal" temperatures are for NAND. I know on HDD studies (Backblaze and others), it's been shown that having components that are too cold can be equally as detrimental as being too hot. Granted, HDDs have moving parts, but I think there may be some correlation between temperatures and NAND program/erase cycles. I suspect there's a pretty wide range of about 30C to 60~70C where everything is fine.I'll tell you what I think is important, and then perhaps you can think about the best way to address these concerns in your reviews.

Heat affects both drive performance and longevity. Therefore, readers will likely want to know how drives compare in their sensitivity to heat and how much they need good airflow. Even though your setup differs from theirs, they'll at least know which drives are more sensitive to throttling and which drives want a better heatsink and/or airflow.

I guess another reason to look for signs of throttling is that it suggests paying yet more attention to cooling could likely unleash even more performance.

Understood, but you know that's exactly how some people are going to use them - especially if they don't know otherwise! Or in laptops, for drives that don't come with their own heatsink.

I hear you, but it's too flat and always shoots up until it hits that exact threshold, then holds it, never going over. That really looks like temperature throttling. It takes a control system to achieve such precise behavior, and it sure looks like temperature is the variable they're controlling for. I'll bet plots of any other variable aren't as level as those plateaus, during those same time periods.There's a sustained load, writing data as fast as possible, and the bottleneck at this point is a combination of NAND and controller. Initially, these Phison E26 drives write in pSLC mode and so the Z540 spits out nearly 12 GB/s of data to the NAND for about 20 seconds (~250 GB written, give or take). Then the cache gets filled up and it writes directly to the NAND in TLC mode at around 3.7 GB/s. This goes on for about seven minutes, or ~420 more seconds and writes another ~1,500 GB.

Pull off the heatsink from a Intel i9 CPU and it'll throttle down to a few hundred MHz. That doesn't mean it's not throttling even with the heatsink - just that the throttling isn't nearly as bad.All of the E26 drives (with proper cooling) behave this way. Take away the cooling and run a bare drive, and the steady state performance collapses to around 400 MB/s or something. I don't have the numbers right here, but it's really bad and the controller hits 85C before you see the throttling kick in.

I haven't exactly seen the claim about "too cold", but I did see an interesting table showing that you get better retention from writing the data at higher temperatures and then storing the drive at lower temperatures. There's certainly an upper limit - not like you want the drive to be as hot as possible, during writes. Plus, heating it up affects the other cells in the drive, causing them to lose charge faster.I'm actually not sure what the "ideal" temperatures are for NAND. I know on HDD studies (Backblaze and others), it's been shown that having components that are too cold can be equally as detrimental as being too hot.

"fine" = warranty-covered. However, if you want to get the most longevity, then you'd have to control conditions more tightly. The NAND makers know the details and tell the drive developers. We just need to look for bits of that information that get published or leak out.Granted, HDDs have moving parts, but I think there may be some correlation between temperatures and NAND program/erase cycles. I suspect there's a pretty wide range of about 30C to 60~70C where everything is fine.

If true, I'll bet the NAND chips have embedded temperature sensors - it's just a question of the drives exposing them.Except, all of the above temperatures are actually just the controller temp.

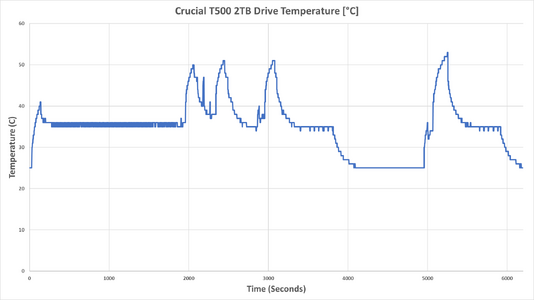

If we had the temperature + performance plots lined up, it would be much more convincing. I know it's work for you guys, so it's not like I'm demanding that you do it. My point is just that it'd be better if you could show us, rather than tell us.all indications are that any SSD running at ~75C or lower on the controller is nowhere near throttling and should be perfectly fine.

It may not line up with what was shown above, but the snippet in the review seems like it would match the beginning of the temperature graph (I'm making this guess based on the timing of the write hole mentioned in the review and the first temp graph above):If we had the temperature + performance plots lined up, it would be much more convincing. I know it's work for you guys, so it's not like I'm demanding that you do it. My point is just that it'd be better if you could show us, rather than tell us.

I wonder if I can attach a CSV here? Hmmm... Seems like I can!I hear you, but it's too flat and always shoots up until it hits that exact threshold, then holds it, never going over. That really looks like temperature throttling. It takes a control system to achieve such precise behavior, and it sure looks like temperature is the variable they're controlling for. I'll bet plots of any other variable aren't as level as those plateaus, during those same time periods.

If it were a byproduct of some other energy-intensive activity, then you'd expect to see temperature continuing to build, gradually. Or gradually approach the plateau with a nice, long rolloff and maybe undulate a bit.

Pull off the heatsink from a Intel i9 CPU and it'll throttle down to a few hundred MHz. That doesn't mean it's not throttling even with the heatsink - just that the throttling isn't nearly as bad.

I haven't exactly seen the claim about "too cold", but I did see an interesting table showing that you get better retention from writing the data at higher temperatures and then storing the drive at lower temperatures. There's certainly an upper limit - not like you want the drive to be as hot as possible, during writes. Plus, heating it up affects the other cells in the drive, causing them to lose charge faster.

"fine" = warranty-covered. However, if you want to get the most longevity, then you'd have to control conditions more tightly. The NAND makers know the details and tell the drive developers. We just need to look for bits of that information that get published or leak out.

If true, I'll bet the NAND chips have embedded temperature sensors - it's just a question of the drives exposing them.

If we had the temperature + performance plots lined up, it would be much more convincing. I know it's work for you guys, so it's not like I'm demanding that you do it. My point is just that it'd be better if you could show us, rather than tell us.

So much of this discussion could've been avoided if I could just see the data for myself. That said, the NAND vs. controller temperature is a good point and something I wish we could get confirmation of.

Thanks for all your testing & taking the time to reply. I do genuinely respect & appreciate your dedication.

It is this, W1zzard forgot to add a line about two thermal sensors in that review.For it to match the onboard thermal reporting, it should mean the green line is the controller's temperature and the red line is the hottest NAND chip's temperature.

Unlike most other SSDs, the Samsung 980 Pro has two thermal sensors, one inside the controller (green line) and another that measures the temperature of the flash chips (red line).