When do you think that moment first started to take place, inadvertently at first, later massively and boldly, onto the exponential cadence of hardware and software development?

Say, in the early 1990s there was a lot of software doing synonymous things the software of today does. Yet you could not play back good quality video or games or create complex and real-life animations on the average home computer.

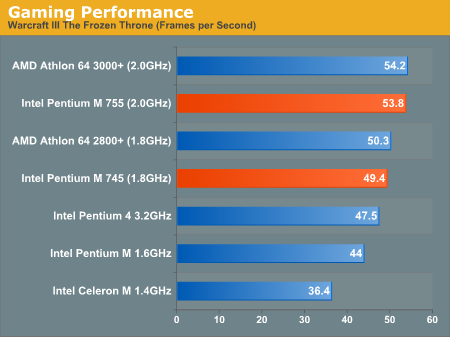

About 2003-2005, I suppose the time appeared in which hardware began massively accelerating, some personal computers harboring up to 1 GB RAM and a lot of storage and processors exceeding 3 GHz in frequency. I believe that was the point in time in which hardware began stepping up the impervious ladder of faster development and began surpassing the requirements of software. Only a decade before that it was precisely the other way around.

Now, what we are facing today, in the beginning of the 2020's, is that hardware is extremely powerful, more powerful than ever it has been in the past. There are home computers harboring 16000 cores in video processing power and CPUs with 32 cores running at incredible speeds for the average user. There, however, has appeared a new phenomenon. Software has been getting greedy, incredibly greedy with this increase in performance I've been talking about. Programs which could run on 1 MB RAM and a 386 in the 90's now require many Gigabytes of RAM. That is due to the fact that instead of writing software smartly and cleanly programmers write impossibly big structures which are fully known only by a few people, and structures which end up providing innumerable errors if any one segment from any part of them is touched. Now, the old timers, the good old programmers, know that is due to impractical thinking and programming; the same software could easily be written better and maintained easier with the core not being touched at all by peripheral changes which in the previous sentence proved to be greatly injurious. This is all because the generations have shifted and new programmers haven't the capacity and capabilities of the ones born in the 70's and before that time.

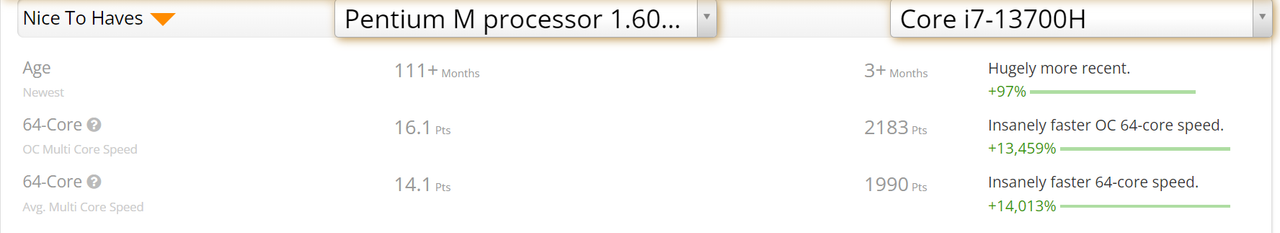

So we've gone from having software run on endlessly slow hardware to having endlessly fast hardware and badly written software taking up hundreds of times more resources than it should. And where does this leave us? What's bound to happen in the future? Going from 386 CPUs up to the Core i9-13900KS took us quite some time; so did going from practically written software to software written in a way which proves we've forgotten the very basics, the route from which knowledge spurts.

Do write up and

Thank you!

Say, in the early 1990s there was a lot of software doing synonymous things the software of today does. Yet you could not play back good quality video or games or create complex and real-life animations on the average home computer.

About 2003-2005, I suppose the time appeared in which hardware began massively accelerating, some personal computers harboring up to 1 GB RAM and a lot of storage and processors exceeding 3 GHz in frequency. I believe that was the point in time in which hardware began stepping up the impervious ladder of faster development and began surpassing the requirements of software. Only a decade before that it was precisely the other way around.

Now, what we are facing today, in the beginning of the 2020's, is that hardware is extremely powerful, more powerful than ever it has been in the past. There are home computers harboring 16000 cores in video processing power and CPUs with 32 cores running at incredible speeds for the average user. There, however, has appeared a new phenomenon. Software has been getting greedy, incredibly greedy with this increase in performance I've been talking about. Programs which could run on 1 MB RAM and a 386 in the 90's now require many Gigabytes of RAM. That is due to the fact that instead of writing software smartly and cleanly programmers write impossibly big structures which are fully known only by a few people, and structures which end up providing innumerable errors if any one segment from any part of them is touched. Now, the old timers, the good old programmers, know that is due to impractical thinking and programming; the same software could easily be written better and maintained easier with the core not being touched at all by peripheral changes which in the previous sentence proved to be greatly injurious. This is all because the generations have shifted and new programmers haven't the capacity and capabilities of the ones born in the 70's and before that time.

So we've gone from having software run on endlessly slow hardware to having endlessly fast hardware and badly written software taking up hundreds of times more resources than it should. And where does this leave us? What's bound to happen in the future? Going from 386 CPUs up to the Core i9-13900KS took us quite some time; so did going from practically written software to software written in a way which proves we've forgotten the very basics, the route from which knowledge spurts.

Do write up and

Thank you!

It is surely a good foundation of erudition upon the previous years.

It is surely a good foundation of erudition upon the previous years.