My rant was specifically about consumer/retail motherboards. Corporations rarely buy retail motherboards, they buy pre-made PCs from Dell, Lenovo and others which typically use proprietary or exclusive motherboard designs..Sorry to say, but most OEM life-cycles with big Corp are around 2 years for laptops/PCs and 5 years for Servers.

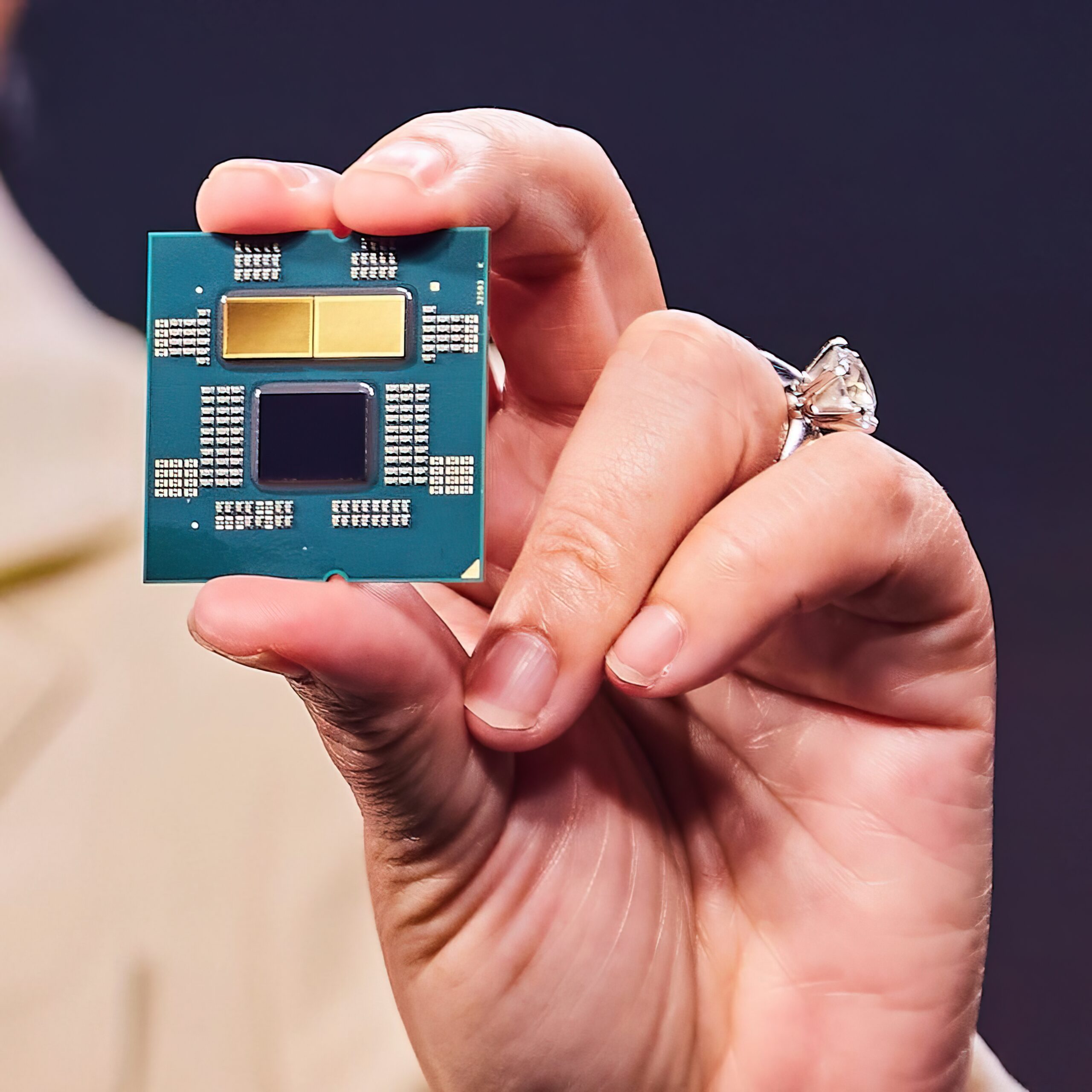

News AMD Intros Zen 4 Ryzen 7000 CPUs and 600-Series Chipset: Up to 5.5 GHz, 15%+ Performance, RDNA 2 iGPUs, PCIe 5, DDR5

Page 4 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

My boyfriend actually dealt with people asking if the 6550XT would fit into their old prebuilt as an upgrade quite frequently for a while after it came out.

Honestly, I'm thinking they're beyond lucky if a card with a new architecture works at all on an old prebuilt with whatever finicky limited version of a BIOS they have.

2Be_or_Not2Be

Distinguished

Sorry to say, but most OEM life-cycles with big Corp are around 2 years for laptops/PCs and 5 years for Servers.

Well, I don't know many businesses especially with large-scale deployments who have a lifecycle for laptops/PCs of only 2yrs. Two years might not even be enough time to replace their whole fleet, when you look at tens of thousands of machines to replace. I've more commonly encountered 4-5yrs for business PCs. Especially with the more powerful CPUs and SSDs, the performance was even more than your avg office-user needed. Hence, their usable lifecycle tended to be longer in span.

I've worked at a couple of places that had mostly 5+ years old systems too. One of them even still had UltraSparc III boxes for most people in the engineering department for people to remote into and run scripts from to interact with the server farm that did the actual heavy-lifting.I've more commonly encountered 4-5yrs for business PCs. Especially with the more powerful CPUs and SSDs, the performance was even more than your avg office-user needed. Hence, their usable lifecycle tended to be longer in span.

hannibal

Distinguished

The only thing we have from AMD themselves is this:

if you look at mobos today they are advertised as support up to 105W CPUs not up to 140W.

So common sense would be that 170 is the new 105.

The thing from tom's saying that the 170 is the new 140 is out of the blue, unless they have a quote from AMD that they didn't care to show us.

But until clarified both could be true.

Now it has been confirmed. TDP 125W, PPT 170W.

edited to correct the TDP...

AMD's Robert Hallock & Frank Azor Talk AMD Ryzen 7000 CPUs & AM5 Platform Features: 170W Socket Power Limits, 5.5 GHz Stock Clock Speeds, Smart Access Storage & More

AMD Ryzen 7000 CPUs & AM5 platform features have been detailed by Robert Hallock and Frank Azor during the PCWorld 'The Full Nerd' show.

Last edited:

TerryLaze

Titan

Well "TDP" is 145, real TDP (as in the power the socket provides to the CPU under normal "TDP" ) is 170, and the socket can handle much more depending on the quality of the mobo, otherwise there would be no overclocking.Now it has been confirmed. TDP is 145w. 170 is what socket can handle.

-Fran-

Glorious

The point was about the socket refresh cycle you mentioned: AMD, Intel and nVidia all do follow OEM cycles to a big degree. I can't quantify how significant that is, but I'm willing to say it's not a trivial amount of "influence" on how much longevity they give to the platforms. If AMD wants to support the same socket config for more than 2 years, then Dell and HP and other will also need to do that, to a degree. That's the context of mentioning OEM refresh cycles for big Corp. Since AMD is now more, let's say, "alluring" for OEMs, they'll have to concede to a degree on these cycles. To me, at a first glance, makes more sense to keep a socket alive for longer, but in the big economy of scales, it may not make sense. I'd love to know more about that from the OEM's side, but I do believe I'm not off the mark.My rant was specifically about consumer/retail motherboards. Corporations rarely buy retail motherboards, they buy pre-made PCs from Dell, Lenovo and others which typically use proprietary or exclusive motherboard designs..

Well, but most of them do just that. It is not cheap. Also, it's not like they buy, say, 20000 laptops in one go. You have to consider hire rate and internal shuffling of machines and all that. So there's the rollout speed and refresh speed. The target average I can confidently say it is now ~2 years for employees and about 5 years for servers.Well, I don't know many businesses especially with large-scale deployments who have a lifecycle for laptops/PCs of only 2yrs. Two years might not even be enough time to replace their whole fleet, when you look at tens of thousands of machines to replace. I've more commonly encountered 4-5yrs for business PCs. Especially with the more powerful CPUs and SSDs, the performance was even more than your avg office-user needed. Hence, their usable lifecycle tended to be longer in span.

Regards.

Having a socket that lasts 10 years wouldn't stop manufacturers from doing platform refreshes and sell fundamentally the same things with a bigger model number. Between major new IO additions, all chipsets look mostly the same and provide few to no benefits other than disambiguating what CPUs motherboards should be compatible with out-of-the-box.If AMD wants to support the same socket config for more than 2 years, then Dell and HP and other will also need to do that, to a degree. That's the context of mentioning OEM refresh cycles for big Corp.

Little Cat

Reputable

Having a socket that lasts 10 years wouldn't stop manufacturers from doing platform refreshes and sell fundamentally the same things with a bigger model number. Between major new IO additions, all chipsets look mostly the same and provide few to no benefits other than disambiguating what CPUs motherboards should be compatible with out-of-the-box.

Is it bad that it has an old socket?

At some point, old sockets become a hindrance to deploying new standards either from running out of spare pins for additional power/signalling requirements or the socket design not having the required electrical properties needed to support higher speeds. PCBs themselves may also need design and layout changes to meet tighter requirements on new standards.Is it bad that it has an old socket?

-Fran-

Glorious

That is exactly my point: OEMs don't want that since it's harder to justify "full system upgrades". Why would HP or Dell want to upgrade just the CPU of a hole server farm instead of the full racks? That's mostly money going to AMD/Intel and very little to the OEMs. That is where they influence AMD and Intel to do refreshes, even when the socket itself can still be used. As I said, AMD and Intel have had long-lived sockets with stupid small differences due to "reasons". What AMD did now is a huge anomaly because they needed the mindshare over getting OEM's attention. I guess they're now in a better position and OEMs may start offering AMD now, even if Intel doesn't want that to happen.Having a socket that lasts 10 years wouldn't stop manufacturers from doing platform refreshes and sell fundamentally the same things with a bigger model number. Between major new IO additions, all chipsets look mostly the same and provide few to no benefits other than disambiguating what CPUs motherboards should be compatible with out-of-the-box.

Regards.

In most production environments I've seen, PCs and servers retire pretty much as-built with few to no upgrades since they were deployed with everything needed for the foreseeable future and left as close to untouched to avoid unnecessary downtime from upgrades gone wrong. Racks of new stuff gets put in, racks of the oldest stuff that is either defective or no longer cost-effective to run gets migrated to some of the newer stuff, then the old/problematic stuff dumped once the migration is confirmed successful to make room for the next round..That is exactly my point: OEMs don't want that since it's harder to justify "full system upgrades". Why would HP or Dell want to upgrade just the CPU of a hole server farm instead of the full racks? That's mostly money going to AMD/Intel and very little to the OEMs.

Hell, until 2021, we were using Haswell-based workstations for the developers. A few had Xeon 4c/8t CPUs, but others were 4c/4t. Then there were some i5 workstations, also Haswell.

I think there were one or two Sandy Bridge based machines still being used. Optiplex 3010, small desktop form factor.

The developer machines definitely had more RAM than they started with, though.

I think there were one or two Sandy Bridge based machines still being used. Optiplex 3010, small desktop form factor.

The developer machines definitely had more RAM than they started with, though.

Is it bad that it has an old socket?

Its not much of an issue physically. A socket is nothing more than contact points for the CPU so it can communicate with the motherboard and other devices on it.

It is entirely possible to keep the same physical socket but change the layout electrically. Of course, doing so means its no longer compatible with older CPUs. And quite often, you might get some folks trying to put an old CPU into a new socket and vice versa. So changing the sockey physically prevents that.

It is a deal breaker when companies like AMD decide to launch things like the RX6500 that suffer massive performance bottlenecking from having excessively limited bus width which is almost certainly going to get worse as entry-level GPUs get faster.

If you are in the "I wish CPU manufacturers kept the same sockets for 100 years" club, then you should also want to have the most future-proof IOs available from components installed on the board so you don't end up having to upgrade the board just because the IO suddenly got old.

Most people I know including myself keep their PCs for 10+ years when we include backup/secondary use life. Pretty sure most of the IO bandwidth they may not need today will be very handy at some point down the line. Most people couldn't imagine needing SATA3 until SSDs came along, couldn't be bothered with USB3 until speedy USB3 thumb-drives and SATA3 enclosures for SSDs became affordable, and PCIe 3.0 in x16 flavor is a significant contributor to how the 4GB RX580 and GTX1650 Super can hold their ground against newer GPUs with truncated PCIe interfaces. I didn't need any of the new IO available on my i5-3470/h77 at the time I put it together but needed all of it by the 5th year out of 9 years as my primary PC. Having reasonably future-proof IO for things I was in no hurry to get into contributed to doubling its useful life.

In the case of 6500, I have to ask you is it a problem with the mainboard or the graphics card?

Btw, regarding SSD performance, is it fast because its SSD or because its NVMe interface? There are still many pple using SATA3 interface. Its only a bottleneck under sequential read/write. Not normal usage. The serial nature of I/O means there is hardly a chance to saturate the interface unless you are transferring huge files.

This is not me being or playing "devil's advocate", but there is a point in which you just can't fight "stupid". AMD, Intel and nVidia can only do so much and they won't ever say publicly "this is a bad product, don't buy it". That's, well, a stupid expectation and it won't happen in any way. You can only read the fine print and make your very best for people to understand what that means. After that, nothing anyone can do.

We can debate for hours to no end what each Company has done and how egregious it has been for the world in terms of misleading or dubious marketing, but the TL;DR will always be the same: if someone is convinced they know better then there's little to nothing you can do and whatever the outcome, it'll be on them and their "advisors".

Again, the point of the 6500XT being brought to the discussion wasn't if it was a good or bad video card, but the I/O support and it's longevity. The 10 year arbitrary number is because of the original post I quoted.

Regards.

REgarding 6500XT. I will say that most pple who buy it are not even running it on PCIE 4.0, they are running on PCIE 3.0

-Fran-

Glorious

That's... What I said... Yes...In most production environments I've seen, PCs and servers retire pretty much as-built with few to no upgrades since they were deployed with everything needed for the foreseeable future and left as close to untouched to avoid unnecessary downtime from upgrades gone wrong. Racks of new stuff gets put in, racks of the oldest stuff that is either defective or no longer cost-effective to run gets migrated to some of the newer stuff, then the old/problematic stuff dumped once the migration is confirmed successful to make room for the next round..

Regards,

Companies (OEM customers) cycling their equipment isn't the same as your allegation that OEMs want different sockets every two years to force all-new purchases. Most corporate clients aren't going to mess with a system beyond maintenance once deployed until it is retired. How long the socket may last is irrelevant since the system will be replaced regardless.That's... What I said... Yes...

-Fran-

Glorious

It is tied to it. Why would an OEM client say "yes, please replace all of these for the same PC". It makes no sense. There has to be a reason to upgrade/replace units. Also, you're thinking companies don't try to repair/upgrade their laptops or PCs in a mid-cycle refresh. That also happens. RAM and disk replacements happen quite often. Dell and HP, at least, so have technicians on site at big clients to manage all of that, including OS upgrades. Long story short, my point still stands: OEMs do have an influence, whether you like it or not.Companies (OEM customers) cycling their equipment isn't the same as your allegation that OEMs want different sockets every two years to force all-new purchases. Most corporate clients aren't going to mess with a system beyond maintenance once deployed until it is retired. How long the socket may last is irrelevant since the system will be replaced regardless.

In another note, this is very timely:

Regards.

They wouldn't be the same PCs since the new stock coming in would have 3-4 generations newer chipsets and CPUs even if the socket remains unchanged and forward/backward compatible.It is tied to it. Why would an OEM client say "yes, please replace all of these for the same PC". It makes no sense.

I don't disagree with that premise, but that's a different issue you're talking about: "not knowing". If you know, then you won't get a "bottom of the barrel" GPU if you want to game with a certain level of quality; if you don't care about quality, then... while it may sound a bit heartless, then it's a non-issue for the buyer? Also, if you're assembling your own PC, then you at the very least need to ask someone else when you don't know. If you don't, then that's on you and you alone. We can all agree the 6500XT is a bad product because the specs are there for anyone to read about them, so the issue you're talking about has a lot of potential avenues of solution. If you're in the unfortunate position in which the 6500XT is your only option, then nothing can be done unless you buy a new PC or upgrade other things like the PSU and/or case, etc.

The reason why the 6500XT was used in that context was the PCIe x4 restriction. If you buy a PC now and 10 years later the bottom of the barrel GPU blows your 10 year old GPU out of the water, but it's just x4 and PCIe10 (humour me), then your choices, as stated above, are quite simple: upgrade everything, deal with the x4 restriction, or just buy the best you can which is x16 and carry it over when you can upgrade the rest. In terms of what the future holds, no one can be 100% certain. Who knows, maybe PCIe5 will be used for another ~7 years like PCIe3 even when PCIe6 spec is out there because it's not economically viable for the consumer market. We could even move to a completely different standard and it would matter even less. Maybe you're old enough to remember ISA -> PCI -> AGP -> PCIe.

Regards.

You forgot Vesa 🤪🥸

TRENDING THREADS

-

-

-

Question Can my PC handle a NVIDA 3060 TI without upgrading anything else?

- Started by hexzero13

- Replies: 6

-

News Introducing the Tom’s Hardware Premium Beta: Exclusive content for members

- Started by Admin

- Replies: 43

-

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.